TL;DR:

- Stanford researchers unveil a pioneering model decoding neural processes behind natural visual scenes.

- Study delves into neural interactions in the retina, the key for transmitting visual data to the brain.

- Model predicts retinal responses to real-world scenes with remarkable precision.

- Interpretable model replicates motion analysis, adaptability, and predictive coding from natural scenes.

- Ganglion cells’ computations were dissected, revealing interneuron roles in retinal processing.

- Model’s three-layer structure mirrors retinal architecture, accurately simulating cell behaviors.

- Research offers insights into how the brain comprehends natural vision, shedding light on intricate processes.

Main AI News:

In the realm of sensory neuroscience, a cardinal pursuit is to unravel the intricate mechanisms underpinning the neural cipher accountable for deciphering the tapestry of natural visual panoramas. Within this domain, a paramount yet unresolved inquiry persists: how do neural constellations unfurl in the ambience of nature’s theater, a choreography orchestrated by the interplay of diverse cell archetypes? The eye, an evolutionary marvel, has become the herald of information transmission concerning the universe of visual stimuli, marshaling a myriad of interneurons essential for relaying this visual symphony to the brain.

While the retina’s operations have often been scrutinized under the radiance of artificial stimuli—flickering luminance and cacophonous noise—it becomes apparent that such experiments may not precisely reflect the retina’s intricate interpretation of bona fide visual data. Within the tapestry of over fifty distinct interneuron varieties, the labyrinthine convolution of their contributions to retinal processing eludes full illumination.

Despite employing diverse methodologies to unearth distinct computations, the essence of this orchestration remains inscrutable. Recent scholarly endeavors have, however, heralded a profound stride. A cadre of researchers has showcased a triumphant revelation—a three-strata network blueprint holds the potential to presage retinal reactions to the effervescent vistas of the natural world with astounding precision, straddling the very cusp of experimental confines. In quest of unraveling the enigma of how the brain elucidates natural visual reveries, the researchers turned their gaze toward the retina—an ocular realm that dispatches signals to the brain.

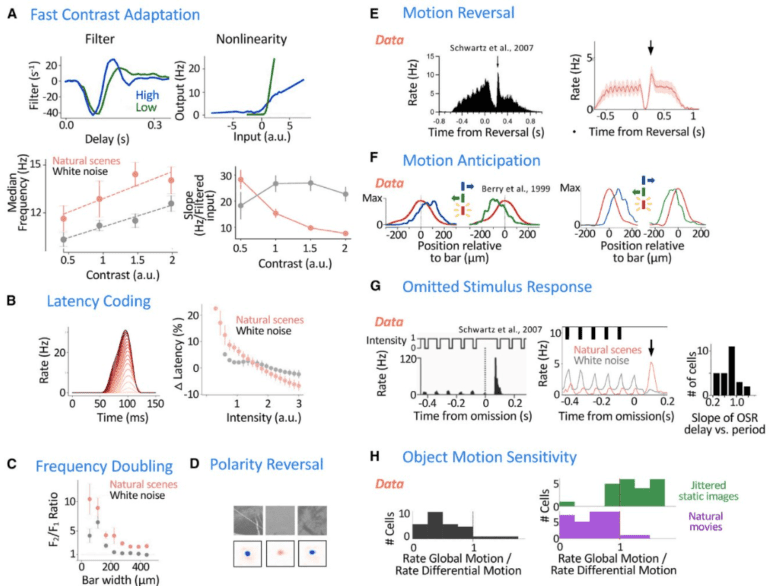

Anchored in the bedrock of interpretability, a cardinal facet of this model, lies its profound ability to fathom its inner architecture. A symphony of responses from interneurons seamlessly assimilated within the model finds resonance with independently recorded counterparts. This harmonious congruence posits that the model captures the essence of retinal interneuron activity in considerable measure. It efficaciously recapitulates a plethora of motion analyses, adaptive comportments, and prescient encoding phenomena, all when tutored solely on natural scenes. In stark contradistinction, models reared in the crucible of white noise flounder in replicating this suite of occurrences. Thus, it becomes evident that comprehending the panorama of natural visual processing mandates a tête-à-tête with the realms of natural environs.

The model’s ganglion cells, conduits of computational choreography, are disassembled into their individual contributions by means of a methodological exposition by the team. This paradigmatic approach begets nascent postulates regarding the interplay of interneurons, and their spatiotemporal response symphonies, culminating in retinal computations—a beacon illuminating the predictive realm.

In the theater of natural image sequences, the imagery dances to a rhythm of 30 frames per second, a rhythmic metamorphosis every second, and an ambulatory random walk, a homage to ocular fixation journeys. This ballet conjures a spatiotemporal narrative closely mirroring the retinal milieu.

Conclusion:

The breakthrough model devised by Stanford researchers provides a pivotal leap toward understanding the intricate mechanisms governing natural visual processing. Its precision in replicating retinal responses to real-world scenes underscores its potential in applications ranging from advanced vision technologies to more effective artificial intelligence models in image analysis and interpretation. As the model unravels the enigmatic dance of visual perception, businesses across industries will have the opportunity to leverage this newfound knowledge to refine their products and services, enhancing user experiences and opening new avenues for innovation in the visual technology market.