TL;DR:

- Researchers at UC San Diego have developed a noninvasive method to distinguish hand gestures using brain imaging.

- The findings pave the way for a brain-computer interface that could help individuals with paralysis or physical challenges.

- The study used magnetoencephalography (MEG), a safe and accurate technique that detects brain activity without invasive procedures.

- MEG outperformed other brain-computer interface techniques like electrocorticography (ECoG) and scalp electroencephalography (EEG).

- A deep learning model called MEG-RPSnet successfully analyzed MEG data to identify hand gestures with high accuracy.

- Results showed over 85% accuracy in distinguishing gestures, comparable to invasive ECoG interfaces.

- MEG measurements from half of the brain regions sampled yielded accurate results, suggesting the potential for future sensor reduction.

- The research sets a foundation for the development of MEG-based brain-computer interfaces.

- This breakthrough offers hope for the future of noninvasive control of assistive devices through the power of the mind.

Main AI News:

Researchers at the University of California San Diego have made a groundbreaking discovery in the field of brain-computer interfaces. By analyzing data solely from noninvasive brain imaging, they have successfully differentiated between hand gestures made by individuals without any input from the hands themselves. This development holds tremendous potential for the future of noninvasive brain-computer interfaces, offering hope to patients facing physical challenges such as paralysis or amputated limbs.

The study, which has been recently published in the prestigious journal Cerebral Cortex, showcases the most promising results to date in distinguishing single-hand gestures using a completely noninvasive technique called magnetoencephalography (MEG). Dr. Mingxiong Huang, co-director of the MEG Center at the Qualcomm Institute at UC San Diego, explained the primary objective of their research: “Our goal was to bypass invasive components and provide a safe and accurate option for developing a brain-computer interface that could ultimately help patients.”

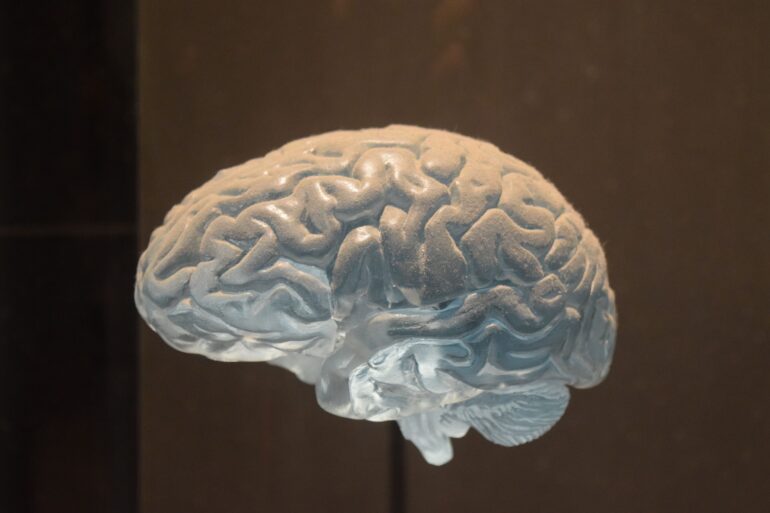

MEG involves the utilization of a helmet equipped with a specialized array of 306 sensors that detect the magnetic fields produced by neuronal electric currents within the brain. Unlike other brain-computer interface techniques such as electrocorticography (ECoG), which necessitates the surgical implantation of electrodes on the brain surface, or scalp electroencephalography (EEG), which provides less precise brain activity localization, MEG offers a safe and noninvasive alternative.

Dr. Roland Lee, director of the MEG Center at the UC San Diego Qualcomm Institute, further emphasized the advantages of MEG, stating, “With MEG, I can see the brain thinking without taking off the skull and putting electrodes on the brain itself.” The MEG helmet eliminates the risk of electrode breakage, expensive brain surgery, and potential brain infections. Lee aptly compared the safety of MEG to taking a patient’s temperature, remarking, “MEG measures the magnetic energy your brain is putting out like a thermometer measures the heat your body puts out. That makes it completely noninvasive and safe.”

In the study, 12 volunteers were equipped with the MEG helmet and instructed to perform various hand gestures akin to the game Rock Paper Scissors. The researchers then employed a high-performing deep learning model called MEG-RPSnet, developed by Yifeng (“Troy”) Bu, a Ph.D. student in electrical and computer engineering at the UC San Diego Jacobs School of Engineering and the first author of the paper. Bu’s network effectively combined spatial and temporal features, yielding superior results compared to previous models.

The research team achieved an impressive accuracy rate of over 85% in distinguishing between hand gestures, a result comparable to studies conducted with a much smaller sample size using the invasive ECoG brain-computer interface. Additionally, the team discovered that MEG measurements from only half of the brain regions sampled still generated accurate results, albeit with a marginal loss of 2-3% accuracy. This finding suggests that future iterations of MEG helmets may require fewer sensors, further streamlining the technology.

Looking ahead, Bu acknowledged the significance of their work, stating, “This work builds a foundation for future MEG-based brain-computer interface development.” The researchers pioneering achievements open up new avenues for individuals with physical limitations, offering the possibility of controlling assistive devices through the power of their minds. As research in this field progresses, the prospect of a more inclusive and accessible future becomes increasingly tangible.

Conlcusion:

The development of a noninvasive brain-computer interface utilizing magnetoencephalography (MEG) and deep learning techniques represents a significant milestone in the field of assistive technologies. This breakthrough has the potential to revolutionize the market by providing individuals with paralysis, amputated limbs, or other physical challenges with a means to control devices using their thoughts. The superior accuracy and safety offered by MEG over invasive techniques like electrocorticography (ECoG) and scalp electroencephalography (EEG) position it as a game-changer in the market.

As further advancements are made in MEG-based brain-computer interface development, we can anticipate an increased demand for such technologies, creating new opportunities and transforming the landscape of assistive devices and healthcare. Businesses operating in this sector should closely monitor these developments and consider incorporating MEG technology into their product offerings to stay at the forefront of this emerging market.