TL;DR:

- Deep learning “large language models” have improved natural language processing in medical tasks.

- Prompt-based instructions enhance the performance of generative language models.

- Breaking down tasks into smaller segments improves task performance, especially in healthcare.

- Curai Health introduces Dialog-Enabled Resolving Agents (DERA) to enhance natural language tasks.

- DERA assigns specific roles to agents, improving focus and alignment with objectives.

- DERA excels in tasks like medical conversation summarization and care plan creation.

- Question-answering performance sees minimal improvement with DERA.

- Future research includes the development of a new medical question-answering dataset.

- Chaining strategies show promise in enhancing performance.

- Applying prompting systems to real-world scenarios remains a challenge.

Main AI News:

The advent of deep learning “large language models” has propelled natural language processing to new heights. These models excel not only in language prediction but also in various natural language tasks. In the medical field, for instance, LLM-powered approaches have proved invaluable in information extraction, question-answering, and summarization. At the heart of these techniques lie prompts, which serve as instructions for the language models. These prompts contain task specifications, rules for predictions, and even samples of input and output.

One of the remarkable capabilities of generative language models is their ability to produce results based on natural language instructions, eliminating the need for task-specific training. This breakthrough empowers non-experts to leverage the technology’s potential. However, recent research suggests that breaking down complex tasks into smaller segments can enhance performance, particularly in healthcare. This alternative approach comprises two key components: an iterative process for refining the initial output and a guiding agent that directs the focus during each iteration, making the entire procedure more comprehensible.

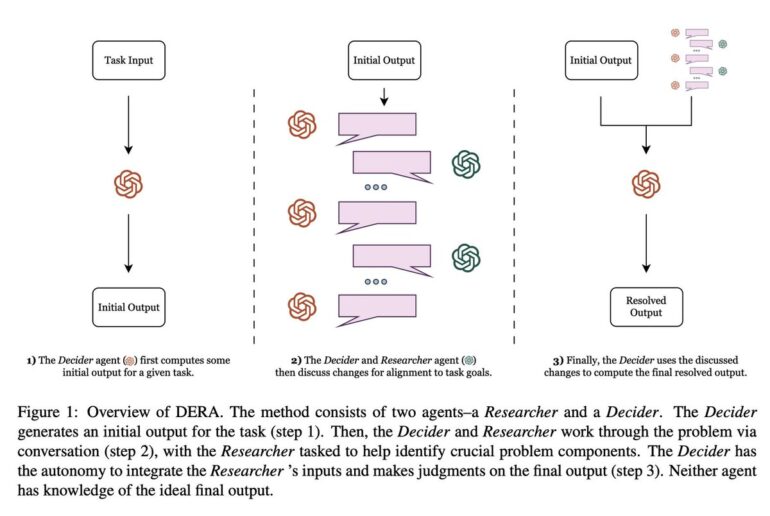

With the advent of GPT-4, researchers now possess a powerful conversational tool at their disposal. Building upon this progress, a team from Curai Health introduces Dialog-Enabled Resolving Agents (DERA). DERA serves as a framework to explore how dialogue-resolution agents can elevate performance in natural language tasks. By assigning specific roles to dialogue agents, the researchers believe they can direct their attention to specific aspects of the work, ensuring alignment with the overall objective. The Researcher agent’s role involves gathering relevant data and suggesting areas for the other agent to focus on.

DERA, the framework for agent-agent interaction, aims to enhance performance in natural language tasks. The researchers evaluate DERA’s efficacy across three distinct clinical tasks, each requiring different textual inputs and levels of expertise. The medical conversation summarizing challenge, for example, aims to generate an accurate summary of a doctor-patient dialogue, devoid of any factual errors or omissions. On the other hand, creating a care plan involves extensive information and generates lengthy outputs crucial for clinical decision support. The Decider agent analyzes this data and determines the optimal course of action.

The researchers strive to generate factually correct and relevant content across a range of tasks. Answering medical questions, for instance, presents an open-ended assignment requiring thoughtful knowledge-based responses. To tackle this challenge, they employ two question-answering datasets, pushing the boundaries further. Through rigorous evaluation against human-annotated assessments, DERA outperforms base GPT-4 in both care plan creation and medical conversation summarization tasks across various metrics. Quantitative analyses reveal DERA’s success in rectifying inaccuracies present in medical conversation summaries.

However, in the realm of question-answering, the researchers observe minimal improvement in performance between GPT-4 and DERA. They hypothesize that this approach excels in longer-form generation problems that involve intricate details. To delve deeper into the subject, they collaborated to release a new open-ended medical question-answering dataset based on MedQA. This initiative paves the way for further exploration and evaluation of question-answering systems. Chaining strategies, such as chains of reasoning and task-specific methods, offer promising avenues for improvement.

By encouraging the model to tackle problems like an expert, chain-of-thought techniques enhance performance in certain tasks. These methods strive to coax the most appropriate generation out of the underlying language model. However, one fundamental limitation of prompting systems is their reliance on predetermined prompts designed for specific purposes, such as writing explanations or addressing output abnormalities. While progress has been made in this direction, applying these systems to real-world scenarios remains a significant challenge.

Conclusion:

The integration of Dialog-Enabled Resolving Agents (DERA) within the natural language processing framework signifies a significant advancement in the field. The ability to leverage conversation and refine outputs iteratively has proven beneficial in medical tasks. DERA shows promise in improving tasks such as medical conversation summarization and care plan creation. However, further research is needed to enhance question-answering capabilities and address the challenges of real-world applications. The market can expect an increased focus on leveraging dialogue and refining outputs to improve natural language tasks, particularly in healthcare settings.