TL;DR:

- Dify.AI is an open-source platform addressing data security challenges in AI development.

- It offers self-hosting deployment, ensuring data processing occurs on independent servers.

- Users can work with various commercial and open-source models, tailoring choices to their needs.

- The RAG engine enhances data storage and retrieval solutions and supports various data formats.

- Dify.AI promotes flexibility and extensibility through easy integration with APIs and code enhancements.

- It simplifies complex technologies, enabling non-technical team members to contribute effectively.

Main AI News:

In the realm of cutting-edge AI, developers grapple with a ubiquitous challenge: safeguarding the security and confidentiality of data, particularly when harnessing external services. Both businesses and individuals hold stringent policies regarding the storage and processing of sensitive information. Traditional solutions often entail dispatching data to remote servers, raising apprehensions surrounding data protection compliance and command over data.

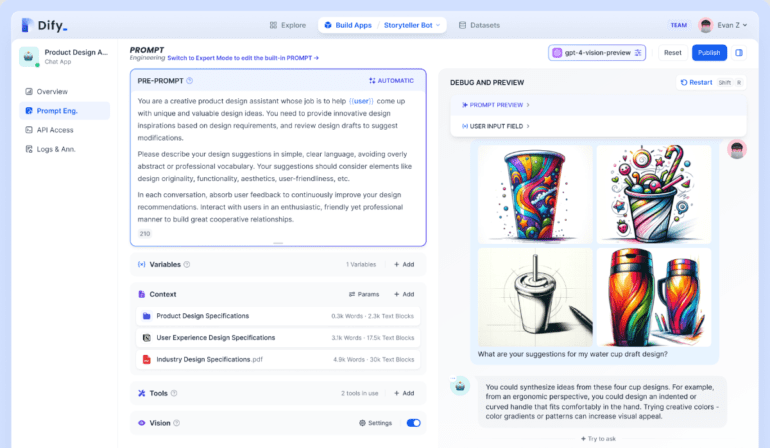

Enter Dify.AI, an open-source platform spearheading the charge in addressing the intricacies introduced by OpenAI’s latest Assistants API. Dify.AI takes a distinctive route, proffering self-hosting deployment strategies that assure data processing occurs on independently deployed servers. This pivotal approach ensures that confidential data remains confined within internal server infrastructure, impeccably aligning with the rigorous data governance policies held by businesses and individuals alike.

Dify.AI also boasts unparalleled multi-model support, empowering users to navigate a diverse landscape of commercial and open-source models seamlessly. This versatility empowers users to switch between models based on financial considerations, tailored use cases, and language prerequisites. The platform’s compatibility extends to renowned models like OpenAI, Anthropic, and the open-source Llama2, available either for local deployment or access as a Model as a Service. Users retain the flexibility to fine-tune parameters and training methodologies to craft bespoke language models attuned to their specific business exigencies and data attributes.

A standout feature in the Dify.AI arsenal is its RAG engine, surpassing the capabilities of the Assistants API through its harmonious integration with a myriad of vector databases. This novel feature allows users to cherry-pick storage and retrieval solutions that impeccably align with their data requirements. The RAG engine, marked by its adaptability, offers an array of indexing strategies that pivot on the unique demands of businesses. It seamlessly supports various text and structured data formats while establishing synchronicity with external data sources through APIs, enriching semantic relevance without necessitating extensive infrastructure overhauls.

Dify.AI’s design is firmly rooted in flexibility and extensibility, championing the seamless integration of novel functions or services through APIs and code enhancements. Users revel in the seamless integration of Dify.AI with existing workflows and other open-source systems, ushering in swift data sharing and workflow automation. The code’s inherent flexibility empowers developers to enact direct modifications that bolster service integration and tailor user experiences to perfection.

To foster teamwork, Dify.AI simplifies intricate technicalities, making enigmatic technologies like RAG and Fine-tuning accessible to non-technical team members. This inclusive approach enables teams to redirect their focus toward business objectives rather than coding intricacies. Ongoing data feedback loops, facilitated through logs and annotations, empower teams to continually refine their applications and models, ensuring an unceasing trajectory of enhancement.

Conclusion:

Dify.AI’s innovative approach to data security, model flexibility, and simplified technology access positions it as a game-changer in the market. By empowering businesses to maintain data control and customization, it fosters a climate of trust and efficiency in AI development, making it a pivotal player in the evolving landscape of AI application platforms.