- Quantum computing challenges traditional heuristic task management.

- DRLQ introduces Deep Reinforcement Learning (DRL) for dynamic task placement.

- Utilizes Deep Q Network (DQN) with advanced Rainbow DQN enhancements.

- Aims to minimize task completion time and reduce rescheduling needs.

- Integrates features like Double DQN, Prioritized Replay, and Distributional RL.

- Experimentally shows 37.81% to 72.93% faster task completion compared to heuristics.

- Effectively eliminates task rescheduling in evaluation scenarios.

Main AI News:

In the dynamic realm of quantum computing, traditional heuristic approaches to task management often fall short. The intricate nature of quantum systems demands adaptive strategies that can efficiently allocate tasks while maximizing resource utilization. Current methods frequently result in mismatched task placements and excessive rescheduling, undermining overall system efficiency.

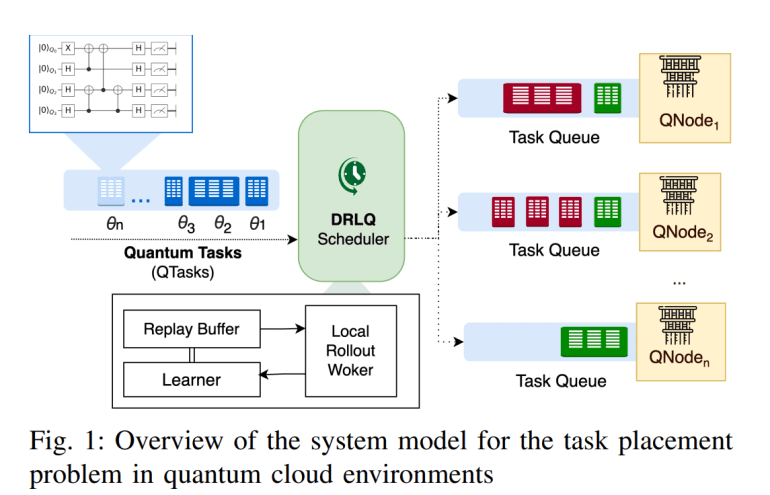

Enter DRLQ, a pioneering approach developed by researchers from the University of Melbourne and Data61, CSIRO. Leveraging Deep Reinforcement Learning (DRL) techniques, specifically the Deep Q Network (DQN) architecture enhanced with Rainbow DQN methodologies, DRLQ represents a paradigm shift in quantum task placement strategies. By continuously learning from interactions within quantum cloud computing environments, DRLQ optimizes task completion times and minimizes the need for disruptive rescheduling efforts.

The framework integrates advanced DQN features such as Double DQN, Prioritized Replay, Multi-step Learning, Distributional RL, and Noisy Nets. These enhancements not only streamline model training but also enhance its adaptability to diverse quantum computation scenarios. DRLQ operates by selecting the most suitable quantum computation node (QNode) for incoming quantum tasks (QTasks), based on criteria like qubit number, circuit depth, and arrival time. This strategic approach aims to reduce overall task response times while mitigating the occurrence of task rescheduling—a critical factor in maintaining operational continuity.

Experimental evaluations using QSimPy simulation toolkit reveal substantial performance gains with DRLQ. Compared to traditional heuristic methods, DRLQ achieves a remarkable 37.81% to 72.93% reduction in total quantum task completion times. Furthermore, it effectively eliminates the need for task rescheduling attempts under evaluation conditions, contrasting sharply with existing methodologies that frequently necessitate such interventions.

DRLQ exemplifies the future of quantum task management, where adaptive learning and sophisticated algorithms converge to unlock unprecedented efficiency in quantum cloud computing environments.

Conclusion:

The introduction of DRLQ marks a transformative step in quantum task management, promising enhanced efficiency and reduced operational disruptions in cloud computing environments. By integrating advanced reinforcement learning techniques, DRLQ not only optimizes task placement but also sets a precedent for leveraging AI in addressing complex computational challenges. As quantum computing continues to evolve, solutions like DRLQ are poised to reshape the market by offering scalable, adaptive strategies that maximize resource utilization and minimize downtime, thereby advancing the capabilities of quantum cloud services.