TL;DR:

- Large Language Models (LLMs) have excelled in recent technological advancements.

- The challenge lies in enhancing LLMs’ self-reflective and self-correction abilities.

- Traditional methods relying on external feedback often lead to erratic and overconfident responses.

- Zhejiang University and OPPO Research Institute introduce Self-Contrast, a novel approach.

- Self-Contrast fosters diversity in problem-solving perspectives, promoting exploration.

- The method analyzes and contrasts these perspectives, uncovering critical insights.

- A synthesized checklist guides the AI to reevaluate responses, emphasizing error correction.

- Self-Contrast significantly improves LLMs’ reflective capabilities, reducing biases and enhancing accuracy and stability.

- The versatility and effectiveness of Self-Contrast are evident across various models and tasks.

Main AI News:

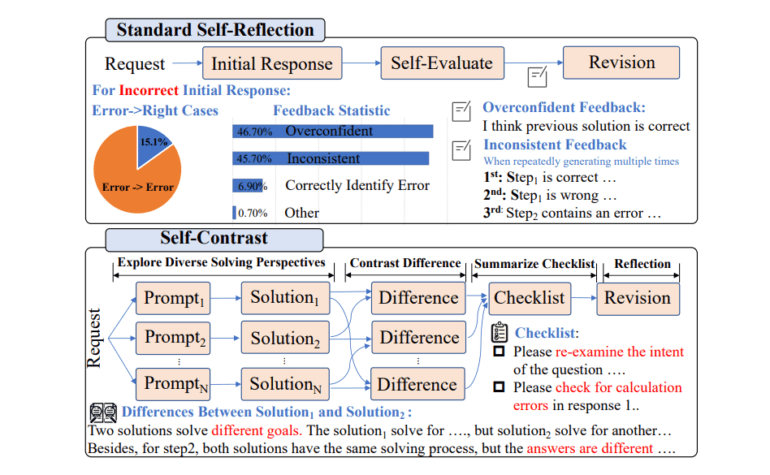

Large Language Models (LLMs) have undeniably stood as the vanguards of recent technological progress, showcasing exceptional prowess across diverse domains. Yet, fortifying these models’ capacity for reflective reasoning and self-improvement represents a formidable challenge in the realm of AI development. Conventional approaches, heavily reliant on external feedback mechanisms, often fall short in fostering LLMs’ innate ability to self-correct with precision.

Addressing this challenge, the dynamic research team at Zhejiang University and OPPO Research Institute presents an innovative paradigm shift known as Self-Contrast. This novel methodology departs from the conventional post-hoc prompting strategies, which have exhibited inherent limitations in guiding AI toward accurate self-reflection and refinement. The crux of the issue with existing methodologies lies in their dependency on the AI’s self-assessed feedback, which can be capricious and overly confident. Consequently, LLMs frequently dispense steadfast or inconsistent feedback, hampering their capacity for autonomous rectification.

Self-Contrast introduces a meticulously orchestrated multi-stage process that commences by generating a diverse array of problem-solving perspectives, each tailored to specific requests. This diversity is paramount, as it empowers the model to explore an array of approaches to a given challenge. Subsequently, the AI undertakes a comprehensive contrast analysis of these perspectives, with a keen focus on discerning their nuances and disparities. These contrasts furnish invaluable insights that would otherwise escape notice within the confines of a singular perspective approach.

Following the contrasting phase, the AI adeptly synthesizes these insights into an intricate checklist. This checklist serves as a guiding beacon, directing the model to conduct a meticulous reevaluation of its responses, with a dedicated emphasis on resolving the identified disparities. This pivotal step within the Self-Contrast framework compels the AI to scrutinize its initial responses, fostering a heightened awareness of its errors and, more crucially, the impetus to rectify them. The checklist not only facilitates error identification but also ensures that the AI’s process of introspection is more focused and efficacious.

Across a spectrum of reasoning and translation tasks, the Self-Contrast approach has exhibited a notable enhancement in the reflective prowess of LLMs. Self-Contrast has notably showcased an unparalleled ability to ameliorate biases while elevating the AI’s self-reflection precision and stability in contrast to conventional methodologies. This remarkable transformation is consistently observable across various models and tasks, underscoring the versatility and efficacy that underpins this groundbreaking methodology.

Conclusion:

The introduction of Self-Contrast represents a significant breakthrough in the AI market. This innovative approach promises to enhance the precision and reliability of Large Language Models, making them more dependable across a wide range of applications and domains. As businesses increasingly rely on AI-powered solutions, the adoption of Self-Contrast could lead to more accurate and trustworthy AI-driven outcomes, driving growth and innovation in the market.