TL;DR:

- Researchers address the challenge of consistent character generation from text prompts.

- Traditional methods yield inconsistent results, hindering creative projects.

- The study introduces a fully automated, systematic approach for reliable character creation.

- Clustering images with common traits forms the basis of their method.

- Iterative refinement ensures higher consistency in output graphics.

- Rigorous user research and objective evaluations validate the effectiveness of their technique.

- The research offers a unique solution for brand identity, communication, and emotional connection.

- It empowers creators to generate consistent characters effortlessly.

Main AI News:

In today’s fast-paced world, maintaining consistency in visual content across various contexts is paramount for brand identity, effective communication, and emotional connection. Whether it’s book illustrations, brand logos, comics, or presentations, the ability to generate consistent characters from a mere text prompt has been a persistent challenge for text-to-image generative models.

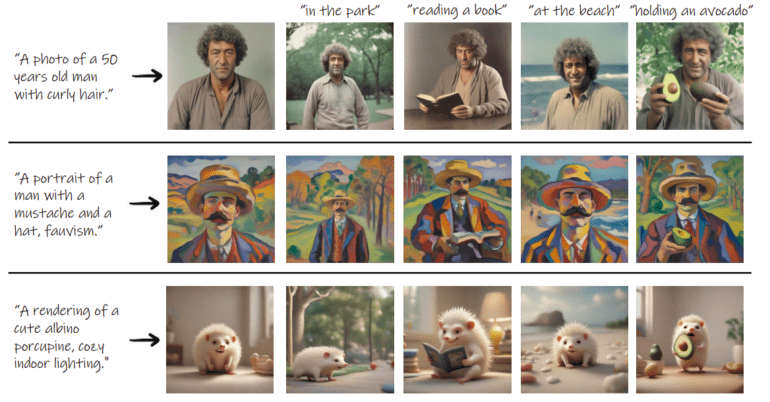

This pioneering research delves deep into the realm of consistent character generation, offering a solution that revolutionizes the way we create and represent characters. The crux of the problem lies in the ability to generate consistent portrayals of characters, even when faced with different situations and input prompts.

Traditional methods often fall short, resulting in a myriad of inconsistent outcomes, leaving creators frustrated and limited in their creative endeavors. Imagine an illustrator attempting to craft a Plasticine cat figure based on a textual description. The conventional text-to-image models struggle to deliver consistent results, as shown in Figure 2.

However, this study takes a giant leap forward by demonstrating a systematic and fully automated strategy to create reliable characters. Gone are the days of labor-intensive, ad hoc solutions that rely on visual variants or celebrity names as prompts. Instead, this research offers a novel approach that hinges on the power of text-to-image models.

Researchers from Google Research, The Hebrew University of Jerusalem, Tel Aviv University, and Reichman University join forces to tackle the challenge head-on. They assert that the ability to create a consistent character is often more critical than a faithful visual replication. Their approach centers on extracting a coherent persona from a natural language description, rather than relying on photos of the target character as input.

The key to their approach lies in the clustering of images with common traits, creating a representation that encapsulates the “common ground” among them. By repeating a meticulously designed procedure involving pre-trained feature extractors and Euclidean space embeddings, they achieve higher consistency in the output graphics while remaining faithful to the original input prompt.

The iterative refinement process continues until convergence, resulting in a gallery of images that not only mirrors the input prompt but also exhibits remarkable consistency. Rigorous user research and objective evaluations against various baselines validate the effectiveness of this innovative technique.

Consistency of identity: The technique yields the same cat, while a traditional text-to-image diffusion model creates multiple cats (all according to the input text) given the command “a Plasticine of a cute baby cat with big eyes.” Source: Marktechpost Media Inc.

Conclusion:

This groundbreaking research on automated character generation holds immense potential for the market. It paves the way for creators in various industries to establish and maintain visual content consistency effortlessly. The systematic approach eliminates the need for labor-intensive methods, offering a competitive advantage for businesses seeking to enhance brand identity, communication, and user engagement.