TL;DR:

- Large language models (LLMs) like GPT-3, Codex, PaLM, LLaMA, ChatGPT, and GPT4 have shown exceptional advancements in in-context learning and code generation.

- Despite their achievements, LLMs face limitations in recognizing present information, providing precise mathematical solutions, and reasoning across complex logic chains.

- Researchers have explored integrating external tools to enhance LLM performance and alleviate their memorization burden.

- CREATOR is a novel framework that empowers LLMs to become tool developers, creating and refining tools based on existing parameters.

- CREATOR aims to diversify the toolset, improve tool utilization, and introduce automated error-handling mechanisms.

- Initial tests show that ChatGPT built on CREATOR outperforms traditional baselines in terms of accuracy and problem-solving ability.

- The introduction of the Creation Challenge dataset further highlights LLMs’ tool-building capabilities and their potential for knowledge transfer.

Main AI News:

Large language models (LLMs) have revolutionized the field of artificial intelligence, propelling us closer to achieving Artificial General Intelligence. GPT-3, Codex, PaLM, LLaMA, ChatGPT, and the more recent GPT4 have showcased remarkable advancements in in-context learning, code generation, and a wide range of natural language processing (NLP) tasks.

However, these LLMs still face certain limitations, such as their inability to react to present information, inadequate mathematical solutions, and reasoning instability when handling complex logical chains. In response to these challenges, researchers have explored the integration of external tools to enhance LLM performance and alleviate their memorization burden.

One approach involves equipping LLMs with tools like web search engines or question-and-answer (QA) systems, enabling them to effectively leverage external resources for problem-solving. Recent studies have also incorporated additional external tools such as GitHub resources, neural network models (like the Huggingface module), and code interpreters (like the Python interpreter). Nonetheless, the integration of these tools into LLMs presents its own set of challenges. Specifically, researchers have identified three critical areas that demand attention:

- Diversifying the Toolset: While the potential for innovative tasks is virtually limitless, current efforts primarily focus on a limited number of tools. Consequently, finding an existing tool suitable for solving a new problem can be a daunting task.

- Enhancing Tool Utilization: LLMs face inherent complexities when attempting to deduce the most effective way to utilize tools. The process of task handling requires extensive planning, placing a significant cognitive strain on the models and incurring a high learning cost.

- Automated Error Handling: Existing tool-use pipelines lack a well-defined and automated error-handling mechanism. The framework’s accuracy and robustness require further development to ensure reliable execution results.

In light of these challenges, researchers from Tsinghua University and the University of Illinois (UC) propose a fresh perspective—empowering LLMs to become tool developers rather than mere consumers. Introducing CREATOR, a groundbreaking tool development framework that leverages LLMs’ capacity to create and refine tools based on existing parameters, researchers aim to enhance problem-solving accuracy and flexibility. CREATOR stands apart from traditional tool-using frameworks by focusing on diversifying the toolset, decoupling rationale levels, and improving overall resilience and correctness.

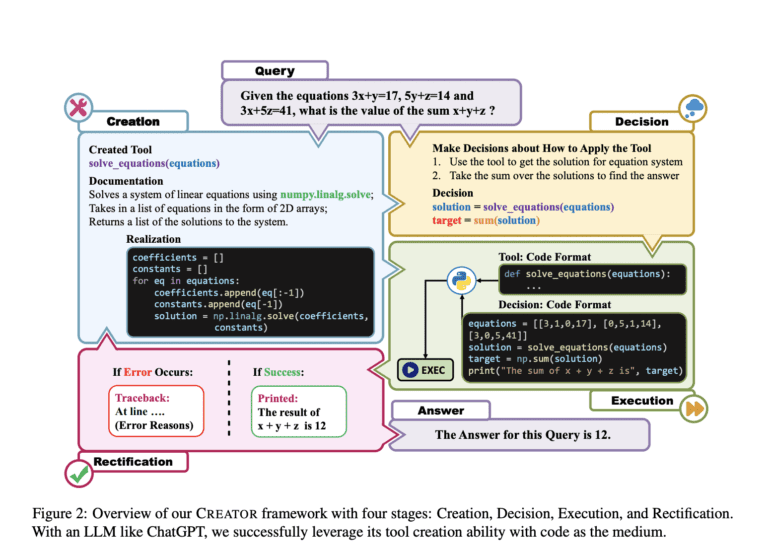

The CREATOR framework comprises four distinct steps:

- Creation: Leveraging LLMs’ abstract reasoning capabilities, tools with broad applicability are developed through meticulous documentation and code realization.

- Decision: Selecting the appropriate tools and determining when and how to apply them for optimal problem-solving.

- Implementation: Executing the program where the LLM utilizes the selected tools to address the given problem.

- Rectification: Based on the execution outcomes, refining the tools and adjusting the decision-making process for further improvement.

Initial tests conducted on CREATOR utilizing the MATH and TabMWP benchmarks demonstrated its remarkable effectiveness. The MATH dataset poses challenging and diverse math competition problems, while TabMWP offers a range of tabular settings for problem-solving. Notably, ChatGPT built on CREATOR outperformed traditional chain-of-thought (CoT), program-of-thought (PoT), and tool-using baselines by significant margins, achieving an average accuracy of 59.7% and 94.7% on the MATH and TabMWP datasets, respectively.

To further evaluate the capabilities of LLMs in tool creation, the researchers introduced the Creation Challenge dataset. This dataset comprises innovative and demanding challenges that require the use of existing tools or code packages. By employing this dataset, the researchers successfully showcased the value and utility of LLMs’ tool-building capabilities. Additionally, they provided experimental findings and compelling case studies that illustrate how tool development facilitates knowledge transfer and enables LLMs to adapt more effectively to a wide range of problem contexts.

Conclusion:

The development of CREATOR and its application in empowering LLMs to create their own tools signifies a significant advancement in the AI market. This framework addresses the limitations of current LLMs, enabling them to recognize present information, provide more accurate mathematical solutions, and reason across complex logic chains. By allowing LLMs to become tool developers, CREATOR opens up new opportunities for enhanced problem-solving capabilities and flexibility. This innovation has the potential to drive further progress in artificial general intelligence and revolutionize the way AI systems approach and solve complex problems in various industries.