TL;DR:

- Generative AI, fueled by diffusion models, has undergone a revolution in recent years.

- Video generation, powered by deep learning and generative models, creates lifelike content.

- Traditional methods relied on visual cues, but recent advancements embraced textual descriptions and trajectory data.

- DragNUWA emerges as a trajectory-aware video generation model with precise control.

- Its formula: semantic, spatial and temporal control through textual descriptions, images, and trajectories.

- Textual descriptions inject meaning, images provide spatial context, and trajectory control is DragNUWA’s innovation.

- Open-domain trajectory control is DragNUWA’s forte, overcoming complexity with inventive strategies.

- The integration of text, image, and trajectory control empowers users for diverse video scenarios.

Main AI News:

Generative AI has witnessed a monumental evolution over the past couple of years, largely attributed to the triumphant emergence of large-scale diffusion models. These cutting-edge models represent a formidable branch of generative technology capable of conjuring up lifelike images, text, and various forms of data. The fundamental concept behind diffusion models involves initiating from a bedrock of random noise, whether in the form of an image or text, and meticulously sculpting it over time, much akin to how reality itself takes shape. These models, typically honed on vast troves of real-world data, have birthed a paradigm shift in artificial intelligence.

Concurrently, the realm of video generation has been the crucible of remarkable advancements in recent years. It boasts the captivating prowess of breathing life into dynamic video content that mirrors reality itself. This transformative capability is the fruit of deep learning and generative models that have conspired to craft videos ranging from the surreal realms of dreams to astoundingly realistic simulations of our tangible world.

The prospect of harnessing the deep learning juggernaut to manipulate videos with meticulous control over their content, spatial configuration, and chronological progression holds immense potential across a myriad of applications, spanning from entertainment to education and beyond.

Traditionally, research in this domain revolved around visual cues, heavily reliant on initial frame images to steer the course of subsequent video generation. However, this approach grappled with its own set of limitations, particularly in predicting the intricate temporal dynamics of videos, which encompassed nuances like camera movements and the intricate dance of object trajectories. To surmount these challenges, recent research has embraced a paradigm shift by introducing textual descriptions and trajectory data as supplementary control mechanisms. While these innovations marked significant progress, they, too, carried their own set of limitations.

Enter DragNUWA—a formidable solution to overcome these constraints.

DragNUWA stands tall as a trajectory-aware video generation model equipped with meticulous control. It effortlessly amalgamates textual input, imagery, and trajectory data to furnish robust and user-friendly controllability.

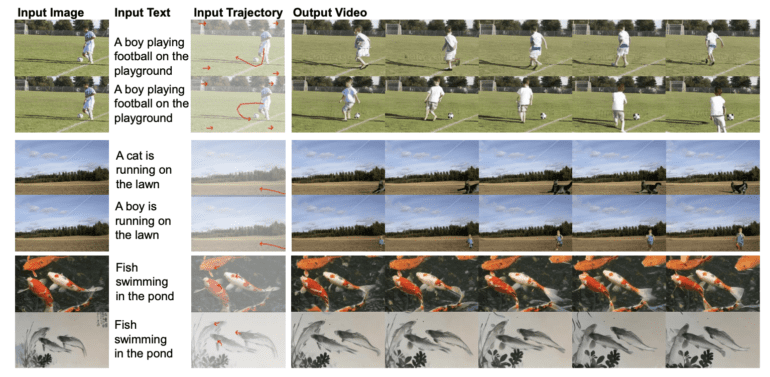

At the heart of DragNUWA’s prowess lies a straightforward formula built upon three pillars: semantic, spatial, and temporal control, governed by textual descriptions, images, and trajectories, respectively.

Textual control finds expression in the form of textual descriptions. This infusion of meaning and semantics breathes life into video generation, enabling the model to grasp and articulate the underlying essence of a video. A subtle nuance that can distinguish between depicting a mundane fish swimming and a masterpiece capturing the essence of piscine grace.

Visual control, on the other hand, finds its tool in imagery. Images offer the indispensable spatial context and intricacy required to faithfully represent objects and scenes within the video canvas. They emerge as vital complements to textual descriptions, infusing depth and lucidity into the generated content.

Yet, the true distinction of DragNUWA is revealed in its trajectory control. It boldly ventures into the realm of open-domain trajectory control. Where previous models stumbled in the face of trajectory complexity, DragNUWA stands resolute, armed with a Trajectory Sampler (TS), Multiscale Fusion (MF), and Adaptive Training (AT), devised to confront this challenge head-on. This innovation ushers in an era where videos can seamlessly adopt intricate, open-domain trajectories, simulate authentic camera movements, and orchestrate intricate object interactions.

Conclusion:

DragNUWA’s emergence signifies a seismic shift in the market for AI-driven video generation. Its prowess in combining text, imagery, and trajectory control promises unparalleled precision and opens doors to a wide array of applications, fundamentally reshaping the landscape of video content creation and manipulation. Businesses and industries across entertainment, education, and more should closely monitor and leverage the capabilities offered by DragNUWA to remain at the forefront of this evolving market.