- Sourcegraph’s coding assistant, Cody, now supports Anthropic Claude 3 LLMs alongside other models like OpenAI GPT-4.

- Claude 3 models exhibit superior code generation performance and offer a larger token context window, initially at 200K tokens.

- Cody’s token input limit is approximately 7K tokens, but Sourcegraph plans to expand this in the future.

- Over 55% of Cody Pro users switched to Claude 3 models within a month of their launch.

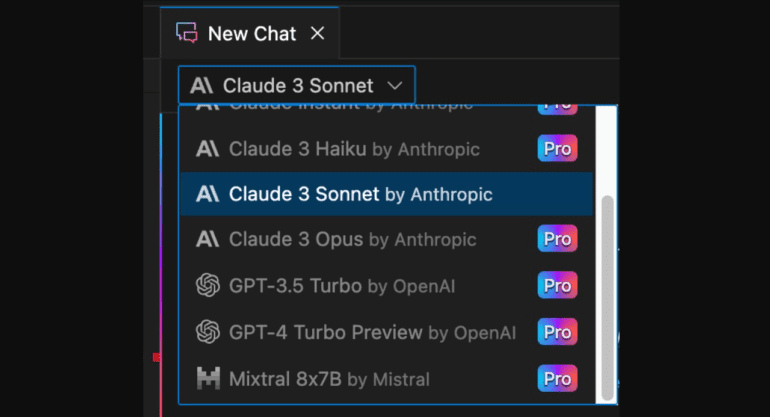

- Users on Cody’s free plan now default to Claude 3 Sonnet, positioned between Opus and Haiku models.

- Cody supports various IDEs, including VS Code, Neovim, and JetBrains family, with VS Code being the stable option.

- It offers features like coding chat, code documentation, identifying code smells, and unit test generation.

- Cody’s core is open source on GitHub, though much of Sourcegraph’s code transitioned to an enterprise license.

- The pluggable LLM feature in Cody’s enterprise edition allows customers to bring their preferred license.

Main AI News:

Sourcegraph’s coding assistant, Cody, now extends its support to Anthropic Claude 3 LLMs, alongside OpenAI GPT-4 and Azure OpenAI. As indicated by Sourcegraph, “all Claude 3 models exhibit superior performance in code generation.” One notable enhancement of the Claude 3 models is their expanded token context window, which defines the maximum input size for the LLM. Anthropic affirms that Claude 3 models initially feature a 200K context window, with underlying capabilities supporting inputs surpassing 1 million tokens.

Despite these advancements, Cody’s maximum token input, approximately 7K tokens, seems restrictive. Sourcegraph attributes Cody’s limit to the potential deterioration in response quality with larger code contexts. However, they express confidence in Claude 3’s ability to handle substantial contexts successfully, announcing plans to significantly broaden Cody’s context window in the near future.

In comparison, OpenAI’s GPT-4 boasts a maximum context window of 128K tokens. Following an initial trial, Sourcegraph reports that over 55 percent of Cody Pro users, predominantly reliant on GPT-4, transitioned their preference to the new Claude 3 models within a month of the launch. For users on Cody’s free plan, which offers 500 autocompletions and 20 messages and commands monthly, Claude 3 Sonnet now serves as the default model. Positioned between Opus, the premium model, and Haiku, the most basic and cost-effective option, Sonnet represents a balanced choice within the Claude 3 lineup.

Cody extends its support to various integrated development environments (IDEs), including VS Code, Neovim, and the JetBrains family, which encompasses IntelliJ IDEA and Android Studio. Notably, the VS Code extension stands as the designated stable option. Offering a plethora of features, Cody facilitates coding chat, code documentation and explanation, identification of code smells, and unit test generation. Moreover, its code completion feature can autocomplete individual lines or entire functions, covering a wide array of programming languages, albeit with potential discrepancies in response quality for more niche languages.

While code analysis is performed by the LLM, Sourcegraph clarifies in its FAQ that the third-party Language Model (LLM) providers do not undergo training on the user’s specific codebase. While GitHub’s Copilot remains a prominent AI coding assistant, the landscape now includes several alternatives, such as Google’s Gemini Code Assist, AWS Code Whisperer, Codeium, JetBrains AI Assistant, Tabnine, and others.

Two distinguishing features of Cody merit attention. Firstly, its core is open source on GitHub under the Apache 2 license. However, in June 2023, Sourcegraph transitioned much of its code, excluding the community edition of Cody, to an enterprise license, citing a commitment to charging companies while retaining open source tools for individual developers. Secondly, Cody’s pluggable LLM feature, available in the enterprise edition, enables customers to incorporate their preferred license, offering flexibility for developers seeking optimal productivity.

Conclusion:

Sourcegraph’s integration of Anthropic Claude 3 models into Cody signifies a significant advancement in coding assistance technology. The superior performance and expanded capabilities of Claude 3 models, coupled with plans to increase Cody’s token input limit, position Cody as a formidable contender in the AI coding assistant market. This move not only enhances Sourcegraph’s competitive edge but also underscores the escalating competition among AI coding assistants, driving innovation and choice for developers in the market.