- University of Alberta researchers identified a major limitation in current deep-learning systems: loss of learning ability during extended training.

- The “catastrophic forgetting” issue arises when AI systems lose proficiency in previously learned tasks after being trained on new data.

- AI systems also experience a “loss of plasticity” when trained sequentially on multiple tasks, which hinders their ability to learn further.

- Researchers propose reinitializing network weights between training sessions to maintain AI system plasticity and enable continuous learning.

- This solution could lead to more adaptive, accurate, and versatile AI applications.

Main AI News:

A group of AI researchers and computer scientists at the University of Alberta has uncovered a critical limitation in current deep-learning systems. When subjected to extended training on new data, these artificial networks lose their learning capacity. Published in Nature, their study reveals a groundbreaking solution to this problem by enhancing plasticity in supervised and reinforcement learning AI systems, enabling them to continue learning effectively.

As AI technology has advanced rapidly, particularly with the rise of large language models (LLMs) that power intelligent chatbots, one significant challenge has remained unresolved. While capable of producing sophisticated responses, these systems have been unable to learn and improve from new data once deployed. This limitation prevents them from becoming more accurate and refining their capabilities.

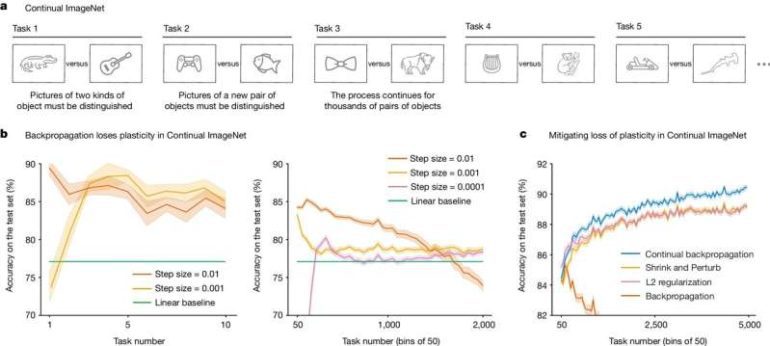

The research team at Alberta examined conventional neural networks’ ability to learn beyond their initial training and identified a phenomenon they termed “catastrophic forgetting.” This occurs when a system loses its proficiency in previously learned tasks after being exposed to new information. Moreover, they discovered that when these systems are trained sequentially on multiple tasks, they suffer from a “loss of plasticity,” effectively losing their ability to learn altogether.

To address this, the researchers propose a novel approach: reinitializing the network weights between training sessions. Weights in artificial neural networks are critical as they dictate the strength of connections between nodes, with strongerweights signifying more important information. By resetting these weights using the same methods employed during the initial system setup, the team suggests that AI systems can maintain their plasticity, thereby continuously preserving their capacity to learn and adapt to new data.

This breakthrough offers a promising avenue for developing more adaptive and resilient AI systems, which could lead to more intelligent, accurate, and versatile applications in the ever-evolving artificial intelligence landscape.

Conclusion:

This advancement in maintaining plasticity within AI systems represents a significant leap forward for the market. It addresses one of the key challenges hindering the development of truly adaptive AI solutions. As AI technologies become increasingly integral to business operations, the ability to continuously learn and improve will be crucial in maintaining a competitive edge. This breakthrough enhances the value of AI systems and opens up new opportunities for innovation and efficiency across various industries, from finance to healthcare. Companies that can integrate this technology will likely experience improved decision-making, reduced operational costs, and a stronger position in the market.