TL;DR:

- IriusRisk introduces automated threat modeling for machine learning systems.

- Machine learning technology integration necessitates proactive risk assessment.

- The AI & ML Security Library aids in understanding and mitigating ML-related security risks.

- Key questions include data origin, continual learning, and data confidentiality.

- The library caters to developers, engineers, and designers, enhancing accessibility.

- Available to both IriusRisk customers and community edition users.

Main AI News:

In the relentless pursuit of enhanced security in software development, the concept of “shift left” has gained prominence, urging organizations to initiate security discussions earlier in the development life cycle. Within this framework, threat modeling emerges as a crucial practice for identifying security vulnerabilities in software designs. With developers increasingly integrating machine learning into their applications, understanding the threats associated with this technology becomes paramount.

Gary McGraw, co-founder of the Berryville Institute of Machine Learning, emphasizes the imperative of acknowledging the inherent risks in adopting cutting-edge technologies such as machine learning. He notes, “People are still grappling with the whole idea that when you use that very new technology [machine learning], it brings along a bunch of risk, as well.” Addressing these risks effectively is a challenge that organizations must confront.

While conversations surrounding machine learning risks have proliferated, the real complexity lies in devising actionable strategies to mitigate these risks. Threat modeling, which involves identifying potential threats that can jeopardize an organization’s security, provides a systematic approach to analyzing security risks in machine learning systems. These risks encompass issues like data poisoning, input manipulation, and data extraction. By integrating threat modeling into the development process, developers can identify security flaws in their designs, ultimately reducing the time spent on security testing before production, as recommended by NIST’s Guidelines on Minimum Standards for Developer Verification of Software.

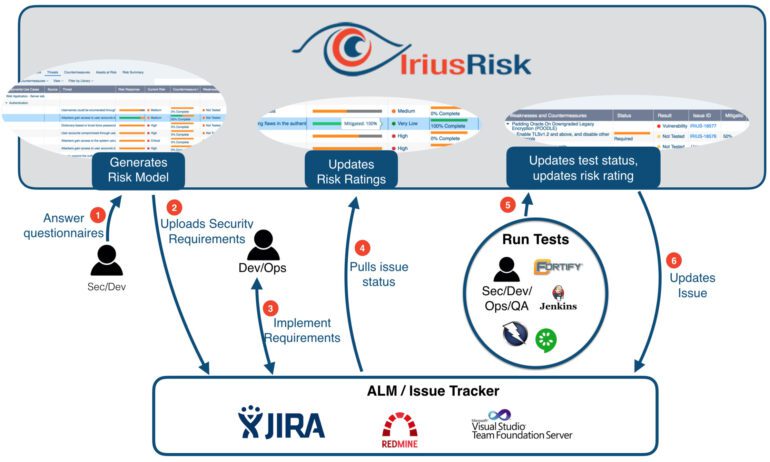

IriusRisk has stepped up to address this challenge by automating both threat modeling and architecture risk analysis. Developers and security teams can seamlessly incorporate their code into the tool, which then generates diagrams and threat models. With the aid of threat modeling templates, even individuals unfamiliar with diagramming tools or risk analysis can partake in this essential process.

Furthermore, the introduction of the AI & ML Security Library by IriusRisk empowers organizations planning to implement machine learning systems. This library facilitates threat modeling, enabling a comprehensive understanding of the associated security risks and strategies to mitigate them.

“We’re finally getting around to building machinery that people can use to address the risk and control the risk,” emphasizes McGraw, who also serves on IriusRisk’s advisory board. This shift ensures that when machine learning becomes a part of your system design and IriusRisk is employed, organizations can proactively manage and mitigate associated risks.

The Essence of ML Threat Modeling

The AI & ML Security Library from IriusRisk equips organizations with the means to ask critical questions, including:

- Data Origin: Determining the source of the data used to train machine learning models and assessing whether it could potentially contain incorrect or malicious data.

- Continual Learning: Evaluating how machine learning systems continue to learn once they are in production. Online machine learning systems that continually adapt based on user input pose different risks compared to those that do not.

- Data Confidentiality: Examining the potential for extracting confidential information from the machine. Safeguarding confidential data within machine learning algorithms is crucial to prevent unauthorized access.

This AI & ML Security Library leverages the BIML ML Security Risk Framework, a comprehensive taxonomy of machine learning threats, and an architectural risk assessment of typical machine learning components. Designed for developers, engineers, and designers involved in early project phases, this framework aligns with IriusRisk’s library, making it accessible to all practitioners of machine learning.

The AI & ML Security Library is available to IriusRisk customers as well as those utilizing the community edition of the platform.

Time to Embrace Threat Modeling

The creation of the AI & ML Security Library stems from heightened interest among organizations, particularly in the finance and technology sectors, regarding the analysis and security of AI and ML systems. Stephen de Vries, CEO of IriusRisk, notes the value of incorporating threat modeling in the design phase of these novel projects, enabling teams to grasp security requirements early on.

However, it’s essential to recognize that the AI & ML Security Library is only beneficial to organizations with visibility into their machine learning applications. Shadow machine learning, akin to shadow IT, presents a challenge where different departments experiment with applications and tools without comprehensive oversight. Bridging the gap between individual usage and the awareness of risks is imperative.

Conclusion:

While not all organizations currently incorporate threat modeling into their software design processes, the availability of tools like IriusRisk can revolutionize security practices. For those already implementing mature threat modeling programs, embracing machine learning threat modeling will further enhance their security posture. As McGraw succinctly puts it, “What about the people who aren’t doing threat modeling? Maybe they should start. It’s not new. It’s time to do it.”