- Vision-language models (VLMs) are evolving rapidly, promising enhanced visual assistance for the visually impaired.

- Challenges include multi-object hallucination, where VLMs inaccurately describe non-existent objects in images.

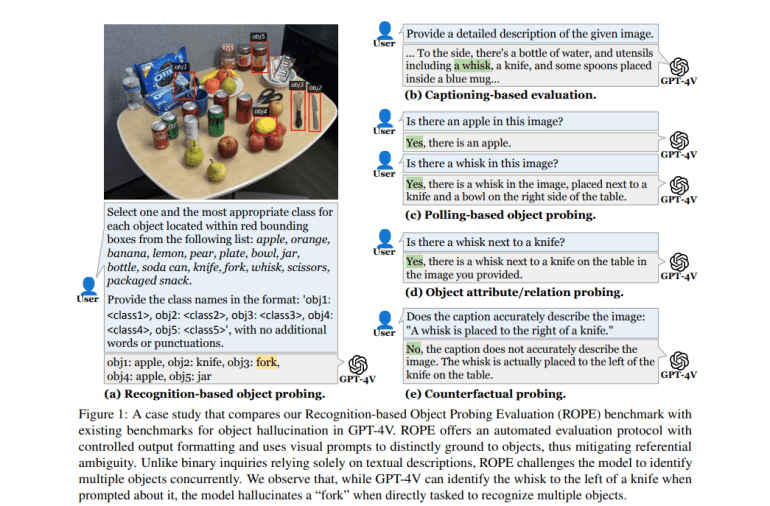

- The ROPE protocol evaluates VLMs’ performance under various conditions, highlighting issues like object salience and dataset biases.

- Cultural inclusivity remains a crucial aspect, with studies emphasizing the need for models to cater effectively to diverse cultural contexts.

- Technical advancements focus on automated evaluation protocols and diverse dataset annotations to improve model accuracy and cultural competence.

Main AI News:

The realm of vision-language models (VLMs) is advancing rapidly, driven by their potential to transform numerous applications, particularly visual assistance for the visually impaired. Yet, current assessments of these models often overlook the challenges posed by scenarios involving multiple objects and diverse cultural contexts. Recent studies delve into these complexities, exploring issues such as object hallucination in VLMs and the imperative of cultural inclusivity in their deployment.

Multi-Object Hallucination

Object hallucination, where VLMs describe non-existent objects in images, poses significant challenges, particularly in scenarios requiring recognition of multiple objects simultaneously. Addressing this, the Recognition-based Object Probing Evaluation (ROPE) protocol has been introduced as a comprehensive framework. It assesses how models handle diverse scenarios, considering factors like object class distribution and the impact of visual cues on model performance.

ROPE categorizes evaluation scenarios into distinct subsets—In-the-Wild, Homogeneous, Heterogeneous, and Adversarial—allowing nuanced analysis of model behavior across varied conditions. Studies indicate that larger VLMs tend to exhibit higher rates of hallucination when processing multiple objects, influenced by factors such as object salience, frequency in data, token entropy, and visual modality contribution.

Empirical findings underscore the prevalence of multi-object hallucinations across different VLMs, underscoring the need for balanced datasets and refined training protocols to mitigate these challenges effectively.

Cultural Inclusivity in Vision-Language Models

Beyond technical proficiency, the efficacy of VLMs hinges on their ability to cater to diverse cultural contexts. Addressing this gap, a culture-centric evaluation benchmark has been proposed. This research emphasizes the importance of considering users’ cultural backgrounds, especially in contexts involving visually impaired individuals.

Researchers conducted surveys to capture cultural preferences from visually impaired users, refining datasets like VizWiz to identify images with implicit cultural references. The benchmark evaluates various models, revealing that while closed-source models like GPT-4o and Gemini-1.5-Pro excel in generating culturally relevant captions, substantial gaps remain in capturing the full spectrum of cultural nuances.

Automatic evaluation metrics, while useful, must align closely with human judgment, particularly in culturally diverse settings, to accurately assess VLM performance.

Comparative Insights

Combining insights from both studies offers a holistic view of VLM challenges in real-world applications. Multi-object hallucination highlights technical limitations, while cultural inclusivity underscores the need for human-centered evaluation frameworks.

Technical Advances:

- ROPE Protocol: Automated evaluation protocols integrating object class distributions and visual prompts.

- Data Diversity: Ensuring diverse object annotations in training datasets.

Cultural Considerations:

- User-Centered Surveys: Incorporating user feedback to refine captioning preferences.

- Cultural Annotations: Enhancing datasets with culturally specific annotations.

Conclusion:

The advancements in vision-language models represent significant strides towards more effective visual assistance applications. However, challenges such as multi-object hallucination and cultural inclusivity underscore the importance of refining evaluation frameworks and dataset diversity. For the market, these developments signal opportunities for enhanced user engagement and broader application in diverse cultural settings, contingent upon addressing these technical and cultural challenges effectively.