TL;DR:

- EgoGen, developed by ETH Zurich and Microsoft, is a revolutionary synthetic data generator tailored for egocentric perception tasks in Augmented Reality (AR).

- It overcomes challenges in simulating natural human movements and behaviors, crucial for capturing accurate egocentric representations of the 3D environment.

- EgoGen utilizes a cutting-edge human motion synthesis model, collision-avoiding motion primitives, and a two-stage reinforcement learning strategy.

- Results show significant performance improvements across various tasks, including head-mounted camera mapping, egocentric camera tracking, and human mesh recovery.

- EgoGen’s user-friendly and scalable data generation pipeline is open-source, offering a practical solution for generating realistic egocentric training data.

- Its adaptability extends to applications beyond egocentric perception, with potential benefits in human-computer interaction, virtual reality, and robotics.

Main AI News:

In the realm of Augmented Reality (AR), the importance of understanding the world through a first-person perspective cannot be overstated. It introduces a set of unique challenges and significant visual transformations that set it apart from conventional third-person views. While synthetic data has undeniably enhanced the capabilities of vision models in the context of third-person perspectives, its potential in tasks involving embodied egocentric perception remains largely uncharted territory. One formidable obstacle in this domain is the faithful simulation of natural human movements and behaviors, which are paramount for steering embodied cameras to capture accurate egocentric representations of the 3D environment.

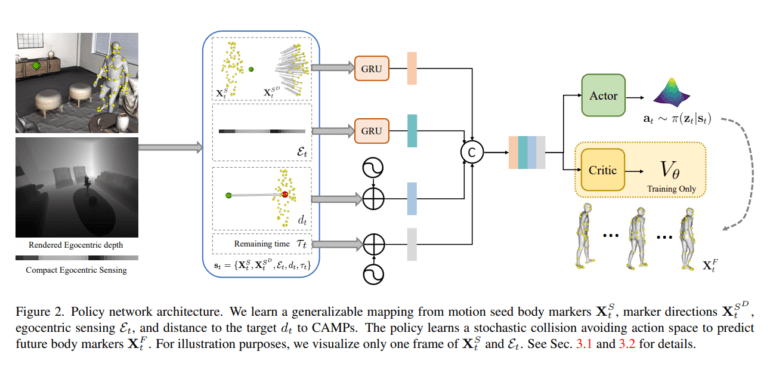

Addressing this challenge head-on, a team of dedicated researchers from ETH Zurich and Microsoft proudly introduces EgoGen, a groundbreaking synthetic data generator meticulously crafted to provide precise and comprehensive ground-truth training data for egocentric perception tasks. At the heart of EgoGen lies a pioneering human motion synthesis model that leverages egocentric visual inputs from a virtual human to gain insights into the surrounding 3D environment.

This state-of-the-art model is further augmented with collision-avoiding motion primitives and is driven by a two-stage reinforcement learning strategy. This design not only enables a closed-loop solution but also seamlessly integrates the embodied perception and movement of the virtual human. Remarkably, unlike previous methodologies, this innovative approach eliminates the necessity for a predefined global path and is directly applicable to dynamic environments.

With the advent of EgoGen, the augmentation of existing real-world egocentric datasets with synthetic images becomes a seamless reality. Rigorous quantitative evaluations demonstrate substantial enhancements in the performance of state-of-the-art algorithms across a spectrum of tasks. These tasks encompass mapping and localization for head-mounted cameras, egocentric camera tracking, and the intricate task of human mesh recovery from egocentric viewpoints. These compelling results underscore the formidable efficacy of EgoGen in elevating the capabilities of existing algorithms and underscore its potential to propel egocentric computer vision research to new heights.

EgoGen does not merely stop at groundbreaking technology; it is further complemented by a user-friendly and scalable data generation pipeline. This testament to its effectiveness is evident across three key tasks: mapping and localization for head-mounted cameras, egocentric camera tracking, and human mesh recovery from egocentric views. By embracing an open-source approach, the creators of EgoGen seek to offer a pragmatic solution for the generation of realistic egocentric training data. It is poised to serve as an invaluable resource for researchers venturing into the realm of egocentric computer vision.

But EgoGen’s potential extends far beyond its initial scope. Its versatility and adaptability render it a promising tool with applications spanning human-computer interaction, virtual reality, and robotics. As it emerges as an open-source asset, the research community anticipates EgoGen to spark innovation and catalyze advancements in the field of egocentric perception, thereby leaving an indelible mark on the broader landscape of computer vision research.

Conclusion:

EgoGen’s emergence in the market signifies a monumental leap in the field of egocentric perception. By providing precise synthetic data, it enhances the capabilities of AR systems, mapping technologies, and computer vision algorithms. Its open-source nature invites collaboration and innovation, opening doors to diverse applications beyond its original scope. EgoGen is poised to reshape the landscape of AR and computer vision, driving advancements and fostering breakthroughs in various industries.