- AI experts developed WILDHALLUCINATIONS, a benchmarking tool for evaluating the factual accuracy of large language models (LLMs).

- LLMs like ChatGPT are widely used but prone to generating inaccurate statements, known as hallucinations.

- The quality of training data is crucial; models trained on highly accurate datasets are more reliable.

- Despite claims of improvements, most LLMs need more progress in accuracy over previous versions.

- LLMs perform better when they can reference reliable sources like Wikipedia but need help with topics like celebrities and financial matters.

Main AI News:

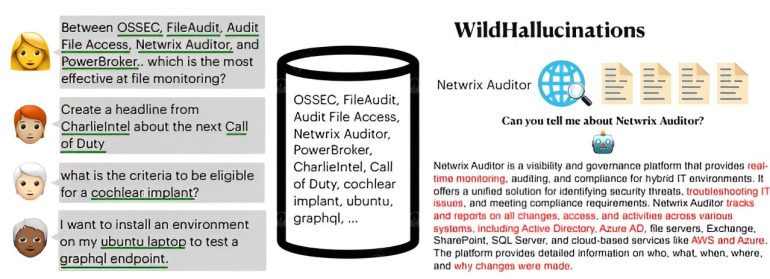

A consortium of AI experts from Cornell University, the University of Washington, and the Allen Institute for Artificial Intelligence has unveiled WILDHALLUCINATIONS, a cutting-edge benchmarking tool designed to rigorously evaluate the factual reliability of leading large language models (LLMs). This initiative, documented in a recent paper on the arXiv preprint server, represents a significant step forward in the ongoing effort to quantify and improve the accuracy of these increasingly influential AI systems.

LLMs such as ChatGPT have rapidly integrated into various industries, being leveraged for tasks ranging from drafting correspondence and creative writing to producing research papers. Yet, despite their widespread adoption, these models have exhibited a critical flaw: a tendency to generate statements that need to be grounded in fact. These deviations from accuracy, commonly referred to as “hallucinations,” have raised concerns, especially when they stray too far from verifiable information.

The research team asserts that the root cause of these hallucinations is largely attributed to the quality of the training data. LLMs are typically trained on vast corpora of internet text, which, while extensive, vary widely in accuracy. Consequently, models built on meticulously curated, high-fidelity datasets are demonstrably more likely to produce correct outputs.

Despite claims from LLM developers about the reduced hallucination rates in their latest iterations, the research team identified a gap in the means available to users for independently verifying these assertions. To address this need, WILDHALLUCINATIONS was developed to empower users with a tool to assess the accuracy of popular LLMs objectively.

WILDHALLUCINATIONS operates by prompting various LLMs to respond to user-generated queries and subjecting these responses to a rigorous fact-checking process. Recognizing that many chatbot responses are often derived from widely accessible sources like Wikipedia, the researchers specifically analyzed the accuracy of responses based on whether the information was available on such platforms.

In applying their tool across several top LLMs, including the latest updates, the researchers uncovered a sobering reality: advancements in LLM accuracy have been minimal. Most models demonstrated accuracy levels comparable to their predecessors.

Interestingly, the study revealed that LLMs are generally more accurate when referencing Wiki page information. However, their performance varied significantly across different topics. For example, they struggled with delivering reliable content on celebrities and financial matters but excelled in fields like science, where factual consistency is more easily maintained.

Conclusion:

The introduction of WILDHALLUCINATIONS underscores the ongoing challenge of improving the factual accuracy of large language models. Despite significant investments and development efforts, most LLMs have yet to show substantial advancements in accuracy, which could undermine their credibility in professional and business settings. This stagnation suggests a critical need for more rigorous training data and evaluation methods. For the market, it signals that while AI continues to be a powerful tool, its limitations necessitate cautious deployment, especially in areas where accuracy is paramount. Businesses relying on these models should remain vigilant and consider complementary solutions to mitigate the risk of misinformation.