- Offline RL utilizes prior data to develop policies, facing challenges in aligning dataset distributions with learned policies.

- Policy extraction methods significantly impact performance over value learning algorithms.

- Behavior-regularized policy gradient techniques like DDPG+BC show superior scalability and performance compared to traditional methods like AWR.

- Offline RL struggles with policy effectiveness on test-time states, emphasizing the need for better generalization strategies.

- High-coverage datasets and test-time policy extraction are suggested solutions to improve offline RL performance.

Main AI News:

Data-driven methods that convert offline datasets of prior experiences into policies play a pivotal role in solving control problems across various domains. Within this domain, two primary approaches stand out: imitation learning and offline reinforcement learning (RL). While imitation learning relies on high-quality demonstration data, offline RL has the potential to derive effective policies even from suboptimal datasets, making it a theoretically intriguing area of study. However, recent studies indicate that with sufficient expert data and fine-tuning, imitation learning often outperforms offline RL, despite the latter having access to ample data. This raises significant questions regarding the key factors influencing the performance gap observed in offline RL.

Offline RL focuses on developing policies exclusively from previously collected data. A major challenge in this field lies in bridging the gap between the state-action distributions present in the dataset and those required by the learned policy. This disparity often leads to substantial overestimations in value estimates, posing potential risks. To mitigate this issue, previous research in offline RL has proposed various methods aimed at improving the accuracy of value function estimations using techniques like behavior-regularized policy gradient (e.g., DDPG+BC), weighted behavioral cloning (e.g., AWR), and sampling-based action selection methods like SfBC. Despite these efforts, only limited studies have systematically analyzed and addressed the practical hurdles encountered in offline RL implementations.

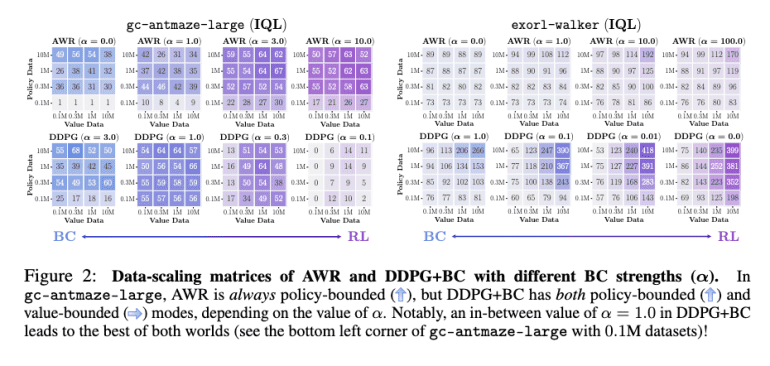

Recent research conducted jointly by the University of California Berkeley and Google DeepMind has unveiled two notable insights into offline RL, offering valuable guidance for domain-specific practitioners and future algorithmic advancements. Firstly, researchers found that the choice of policy extraction algorithms exerts a more significant impact on overall performance than the selection of value learning algorithms. Surprisingly, policy extraction methods are often overlooked during the design of value-based offline RL algorithms. Among various policy extraction techniques, behavior-regularized policy gradient approaches such as DDPG+BC consistently exhibit superior scalability and performance compared to conventional methods like value-weighted regression (e.g., AWR).

Secondly, researchers observed that offline RL frequently encounters challenges related to the performance of policies on test-time states, which differ from those encountered during training. The primary concern shifts from issues such as pessimism and behavioral regularization to the broader issue of policy generalization across diverse operational contexts. To address this, researchers proposed practical solutions focusing on leveraging high-coverage datasets and implementing test-time policy extraction techniques.

In response to these challenges, researchers have introduced novel techniques aimed at enhancing policy refinement during evaluation, thereby leveraging insights derived from the value function to improve overall performance. Notably, among the various policy extraction algorithms evaluated, DDPG+BC has consistently demonstrated optimal performance and scalability across different scenarios, closely followed by methods like SfBC. Conversely, the performance of AWR has shown significant limitations in comparison, highlighting its potential drawbacks in multiple use cases. Furthermore, the data-scaling matrices utilized by AWR often exhibit vertical or diagonal color gradients, reflecting partial utilization of the underlying value function. The choice of policy extraction algorithm, such as weighted behavioral cloning, significantly influences the efficacy of learned value functions and subsequently impacts the overall performance potential of offline RL implementations.

Conclusion:

This research highlights the critical role of policy extraction methods in enhancing the effectiveness of Offline Reinforcement Learning (RL). The findings underscore the importance for businesses and developers in the RL market to prioritize sophisticated policy extraction techniques like DDPG+BC to maximize performance and scalability. Addressing challenges related to dataset distribution alignment and policy generalization not only improves current RL applications but also sets the stage for future advancements in algorithm development and implementation strategies.