TL;DR:

- Large language models (LLMs) like GPT-Vision are revolutionizing visual data interpretation.

- A framework has been developed to evaluate the effectiveness of AI in describing complex scientific images.

- AI can aid the visually impaired, assist authors in generating image descriptions, and enhance information processing.

- Challenges include ensuring accuracy, avoiding misuse, and refining AI-generated summaries.

- Grounded theory-based qualitative analysis offers valuable insights into AI performance.

- Caution is advised in high-stakes scenarios like medicine and cooking.

- Future applications include improving audiobook accessibility and bridging the gap between complex equations and natural language.

Main AI News:

The past year has witnessed the rise of Large Language Models (LLMs), which have gained recognition for their ever-expanding capabilities encompassing text generation, image generation, and, more recently, comprehensive image analysis. The infusion of artificial intelligence (AI) into image analysis represents a significant paradigm shift in how individuals perceive and engage with visual data. Traditionally, this task has relied on human vision and contextual knowledge to decipher images. However, the emergence of new AI tools introduces a groundbreaking paradigm, enabling a broader audience to interact with images by generating detailed descriptions. These descriptions not only aid the visually impaired but also educate the general public about the content of scientific illustrations.

In a collaborative effort, Associate Professor Chris Callison-Burch, Assistant Professor Andrew Head, and Ph.D. Candidate Alyssa Hwang from the Department of Computer and Information Science at the School of Engineering and Applied Science, University of Pennsylvania, has developed a comprehensive framework for assessing the capabilities of vision-based AI, exemplified by OpenAI’s ChatGPT-Vision. Their rigorous evaluation, conducted prior to its recent release, primarily focused on the LLM’s competence in identifying and describing scientific images. Their findings are meticulously documented in a research paper available on the pre-print server arXiv.

A Deeper Dive into the AI’s Functionality and Testing Methodology

Hwang explains that vision-based LLMs, such as GPT-Vision, possess the ability to analyze images and respond to a wide array of requests by processing both images and accompanying text as input. The research team curated a diverse set of test images comprising diagrams, graphs, tables, code snippets, mathematical equations, and full-text pages. Their objective was to gauge the AI’s proficiency in describing these images accurately.

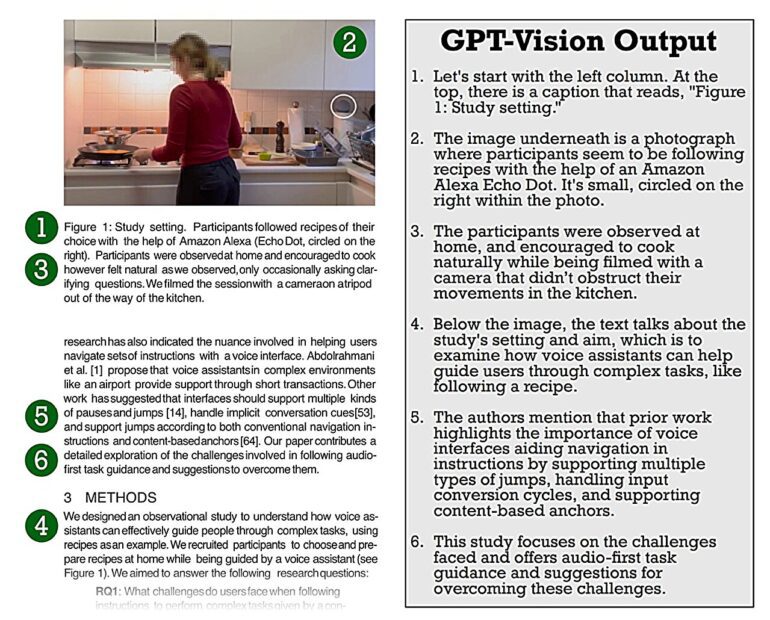

Scientific images often convey intricate information, making them ideal test subjects. Hwang emphasizes their focus on qualitative analysis and the utilization of established methods from the social sciences to uncover intriguing patterns within the AI’s responses. One notable experiment involved presenting a photo collage of 12 dishes, each labeled with their respective recipe names. Surprisingly, even when the labels were intentionally changed to unrelated terms, GPT-Vision still attempted to incorporate these fictitious labels into its descriptions.

Hwang notes, however, that the AI performed notably better when prompted to verify the accuracy of the labels before proceeding. This demonstrates the AI’s capacity to make informed inferences based on its visual capabilities, a promising avenue for further research.

Additionally, when tasked with describing an entire page of text, the AI exhibited a tendency to provide summaries, albeit often incomplete and out of order. It occasionally misquoted the author or lifted significant portions of text directly from the source. This could pose challenges when redistributing content generated by the AI. Nevertheless, Hwang expresses confidence that, with appropriate adjustments, GPT-Vision can be trained to generate accurate summaries, quote text correctly, and avoid excessive reliance on source material.

The Framework and Its Implications

Traditionally, researchers in natural language processing have relied on automatic metrics to evaluate the quality of generated text. Hwang points out that this task has become more intricate as generative AI models produce increasingly sophisticated text. The transition from assessing grammatical correctness to gauging subjective qualities, such as the level of interest in a story, has made automatic metrics less straightforward.

Drawing from her prior experience with Amazon’s Alexa and her background in social sciences and human-computer interaction research, Hwang introduced grounded theory, a qualitative analysis method, into the evaluation process. This approach allowed the researchers to identify patterns in the AI’s responses and iteratively generate more insights as they delved deeper into the data. Hwang believes that this research-based method formalizes the trial-and-error process, providing both researchers and the broader audience with valuable insights into emerging generative AI models.

Applications and Considerations

Hwang highlights the potential of AI-driven image descriptions as an invaluable accessibility tool for blind or visually impaired individuals. The AI can automatically generate alt text for existing images, or authors can use it to assist in crafting descriptive text before publishing. Beyond accessibility, image descriptions can enhance convenience and enrichment for sighted readers with information processing disorders, aiding in tasks like news article comprehension or textbook reading.

However, Hwang advises caution, particularly in high-stakes scenarios such as medicine and cooking. Inaccuracies in AI-generated information can pose significant risks when users are unable to verify the AI’s output. For instance, relying on AI to determine the dosage of a medical treatment could have severe consequences. It is essential to thoroughly assess and understand the limitations of these technologies before widespread adoption.

Final Thoughts and Future Endeavors

Overall, Hwang expresses admiration for the current state of generative AI and envisions a wealth of opportunities for future development. Addressing inconsistencies and exploring creative and inclusive applications of these tools are among the key areas for improvement. Researchers must continue seeking answers to subjective questions regarding what constitutes a good description, its usefulness, and potential annoyances. User feedback should play a crucial role in refining these AI models as they evolve.

Inspired by the idea of narrating the contents of scientific papers with intuitive explanations of figures and formulas, Hwang’s next project involves leveraging AI models to enhance audiobooks. She envisions a more seamless listening experience, where AI can summarize content in real-time, enabling users to navigate audiobooks sentence by sentence or paragraph by paragraph. Moreover, AI could bridge the gap by translating complex mathematical equations into natural language, facilitating accessibility to textbooks and research papers. These exciting applications are on the horizon, and Hwang is enthusiastic about contributing to their development.

Conclusion:

The advancement of generative AI in visual data interpretation signifies a transformative shift in the market. Businesses should explore opportunities for implementing AI-driven image description services to enhance accessibility and enrich user experiences. However, a cautious approach is necessary, especially in domains with high-stakes implications, to ensure the reliability and accuracy of AI-generated content. As these technologies continue to evolve, user feedback and ongoing refinement will play pivotal roles in shaping their market impact.