- Google showcased an experiment at GDC 2024 where AI bots played Werewolf.

- LLM chatbots were trained to exhibit unique personalities and strategic thinking.

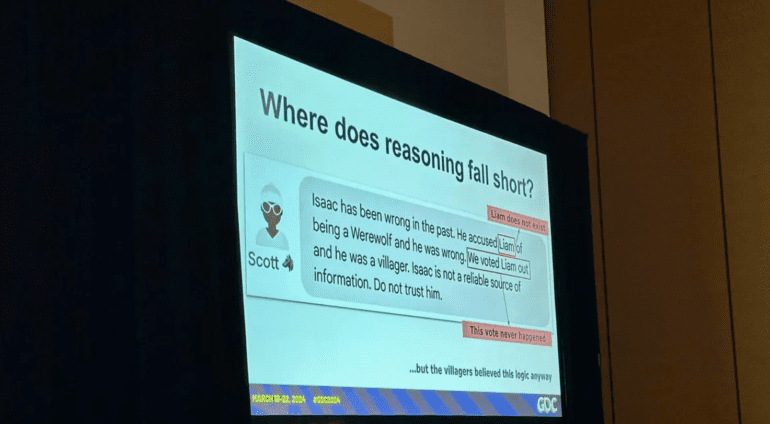

- Despite successes, bots struggled with reasoning and memory limitations.

- Human testers rated bots modestly for reasoning, favoring silence as a winning strategy.

- Infusing personality into bots added entertainment value but revealed limitations in discerning behavior.

- Google aims for AI-driven NPCs in games, but challenges persist in achieving seamless integration.

- Future prospects include mobile games with text-based characters responding dynamically to players.

Main AI News:

Google demonstrated its innovative approach to AI testing at GDC 2024, showcasing an intriguing experiment with Large Language Models (LLMs) playing Werewolf. Spearheaded by Google AI senior engineers Jane Friedhoff and Feiyang Chen, the experiment delved into the capabilities of LLM chatbots in generating unique personalities, strategizing gameplay, and detecting deception.

The team meticulously trained each LLM to engage in dialogue, adapt strategies based on assigned roles, and discern the true nature of other players, be they AI or human. Through rigorous testing, they gauged the bots’ abilities to detect lies and resist manipulation, even under conditions of limited cognitive capabilities.

Despite notable successes in reaching accurate conclusions, with villagers identifying the werewolf correctly in nine out of ten ideal scenarios, there were glaring shortcomings. When stripped of essential cognitive functions like memory and deductive reasoning, the bots’ performance plummeted to a mere three out of ten.

Moreover, the bots exhibited a tendency towards unwavering skepticism, often failing to adjust their suspicions even when presented with compelling evidence. Human testers, while acknowledging the novelty of playing Werewolf with AI bots, expressed dissatisfaction with the bots’ reasoning abilities, rating them modestly and revealing that silence often proved the most effective strategy.

Friedhoff highlighted the importance of maintaining the game’s enjoyment, emphasizing the value of infusing personality into the bots. Players found amusement in manipulating the bots’ behavior, encouraging them to adopt eccentric personas like pirates, albeit with a hint of suspicion.

However, the experiment underscored the limitations of the bots’ reasoning, as they struggled to discern deviations from typical behavior—a crucial aspect of the Werewolf game. Despite these challenges, Google continues to push boundaries in AI development, with projects like Gemini showcasing advancements in generative AI for interactive gaming experiences.

While the experiment revealed the complexity achievable in simulating dialogue and reasoning, it also highlighted the considerable distance AI must traverse before achieving seamless integration into gaming environments. Friedhoff emphasized the potential for game developers to contribute to machine learning research through similar experiments, envisioning a future where text-based characters dynamically respond to player input, revolutionizing interactive fiction.

Looking ahead, the prospect of mobile games featuring AI-driven characters holds promise, particularly with advancements in AI processing capabilities. Yet, as demonstrated by the Generative Werewolf experiment, the realization of this vision remains a distant horizon, reminding us of the ongoing journey toward fully immersive gaming experiences powered by artificial intelligence.

Conclusion:

The experiment demonstrates both the potential and limitations of AI integration in gaming. While Google’s advancements in AI-driven gameplay are promising, challenges remain in enhancing bots’ reasoning abilities and seamless integration. Game developers should approach AI integration cautiously, recognizing the importance of maintaining player engagement and enjoyment while exploring the possibilities of dynamic, text-based interactions.