TL;DR:

- AI Group Normalization is a technique that standardizes features in a dataset, improving the accuracy and efficiency of machine learning models.

- Group Normalization divides channels into smaller groups, enhancing stability and reducing dependence on batch size.

- Models trained with Group Normalization achieve higher accuracy in image recognition tasks compared to Batch Normalization.

- Group Normalization reduces computational resources required for training, making it suitable for resource-constrained applications.

- Determining the optimal group size for normalization is crucial for maximizing the benefits.

- Further research is needed to explore the potential of Group Normalization in other domains beyond image recognition.

Main AI News:

In the realm of artificial intelligence (AI), significant strides have been made in recent years, and machine learning models have emerged as a driving force behind these advancements. Among the innovative techniques reshaping the field, AI Group Normalization stands out as a key player in unlocking the potential of superior machine learning models. By revolutionizing the way data is processed, this method enhances accuracy and efficiency, propelling AI systems to new heights.

At its core, AI Group Normalization is a technique that standardizes or “normalizes” the features within a dataset. Data preprocessing for machine learning models relies on normalization as a crucial step, ensuring that all features are brought to the same scale. By doing so, this technique enables the model to effectively learn from the data, thereby improving its predictive performance.

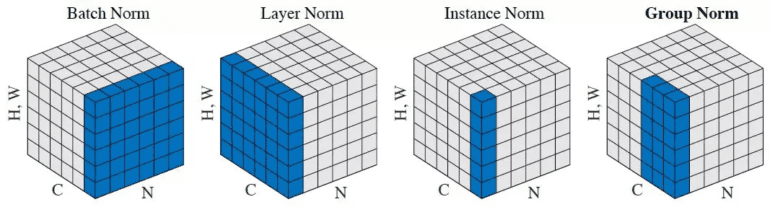

Diving deeper, Group Normalization is a variant of normalization that partitions channels into smaller groups and normalizes the features within each group. This approach was introduced to address the limitations of Batch Normalization, a widely utilized normalization technique in deep learning. Unlike Batch Normalization, which normalizes features across the entire batch of data, Group Normalization operates on single instances. This key distinction makes Group Normalization more stable and less reliant on the batch size, offering improved results.

The impact of AI Group Normalization on machine learning models has been notable across various tasks. In image recognition, for example, models trained with Group Normalization have consistently achieved higher accuracy rates than those trained with Batch Normalization. This success stems from Group Normalization’s ability to preserve information in the data, resulting in more precise predictions.

Moreover, AI Group Normalization also contributes to the efficiency of machine learning models. Operating on single instances, this technique significantly reduces the computational resources required for training the model. Consequently, it emerges as an ideal choice for applications where computational resources are constrained, such as mobile devices or edge computing.

Despite its advantages, AI Group Normalization is not exempt from challenges. Determining the optimal group size for normalization represents one of the main hurdles. When the group size is too small, the model may struggle to capture relationships between features effectively. Conversely, if the group size is too large, the benefits of Group Normalization may diminish. Striking the right balance is thus essential to maximize the advantages of AI Group Normalization.

Moreover, while AI Group Normalization has displayed promising results in image recognition tasks, its efficacy in other domains remains an area of ongoing research. Future studies will shed light on the potential of Group Normalization across a broader range of applications, such as natural language processing or recommendation systems.

Conclusion:

The emergence of AI Group Normalization as a powerful technique for improving machine learning models has significant implications for the market. Businesses and organizations can leverage this technology to enhance the accuracy and efficiency of their AI systems, particularly in image recognition tasks. The ability to achieve higher accuracy rates and reduce computational resources makes Group Normalization a valuable asset, especially in applications with limited computational capabilities, such as mobile devices and edge computing. However, it is important for market players to invest in further research and development to unlock the full potential of Group Normalization across a wider range of applications beyond image recognition. By embracing this technique, businesses can stay at the forefront of AI advancements and unlock superior performance in their machine learning endeavors.