TL;DR:

- Falcon 180B, a new open-source LLM, boasts 180 billion parameters, setting new standards in AI.

- Hugging Face AI community’s blog post announces Falcon 180 B’s release on Hugging Face Hub.

- Falcon 180 B’s architecture builds upon prior Falcon series models and utilizes innovations like multiquery attention.

- Achieved an industry record for single-epoch pretraining with 4,096 GPUs and 7 million GPU hours.

- Falcon 180 B’s parameter size surpasses Meta’s LLaMA 2 by 2.5 times.

- Outperforms LLaMA 2 and competes closely with commercial models like Google’s PaLM-2.

- Impressive performance on various NLP benchmarks, including HellaSwag, LAMBADA, WebQuestions, and Winogrande.

- Positioned between GPT 3.5 and GPT-4 in terms of capabilities.

- Marks a significant advancement in the AI landscape, driven by techniques like LoRAs, weight randomization, and Nvidia’s Perfusion.

Main AI News:

In a groundbreaking announcement, the artificial intelligence landscape welcomes Falcon 180B, a monumental achievement in the realm of open-source large language models (LLMs). Boasting an impressive 180 billion parameters, this remarkable newcomer has swiftly outshined its predecessors, marking a significant stride in AI innovation.

Unveiled via a blog post by the esteemed Hugging Face AI community, Falcon 180B now stands proudly on the Hugging Face Hub. Building upon the foundation laid by the previous Falcon series of open-source LLMs, it incorporates cutting-edge features such as multiquery attention, propelling it to the pinnacle with its staggering 180 billion parameters, all gleaned from a colossal 3.5 trillion tokens dataset.

This accomplishment represents a milestone in the realm of open-source models, as Falcon 180B achieves the longest single-epoch pretraining ever witnessed. This feat required the collective power of 4,096 GPUs, working relentlessly for approximately 7 million GPU hours, with Amazon SageMaker serving as the platform for training and refinement.

To put the sheer magnitude of Falcon 180B into perspective, its parameters dwarf Meta’s LLaMA 2 model, measuring a colossal 2.5 times larger. LLaMA 2 had held the mantle of the most capable open-source LLM, armed with 70 billion parameters, trained on 2 trillion tokens, before the ascent of Falcon 180B.

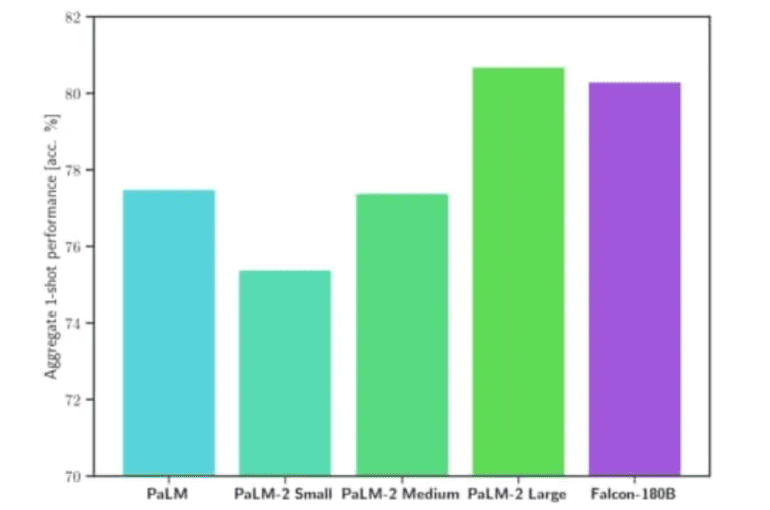

What truly sets Falcon 180B apart is its unparalleled performance across various natural language processing (NLP) benchmarks. It reigns supreme on the open access models leaderboard with an impressive score of 68.74 points, nearly matching commercial giants like Google’s PaLM-2 on evaluations like the renowned HellaSwag benchmark.

Delving into specifics, Falcon 180B not only matches but often surpasses PaLM-2 Medium on widely recognized benchmarks, including HellaSwag, LAMBADA, WebQuestions, Winogrande, and more. Remarkably, it stands shoulder to shoulder with Google’s PaLM-2 Large, showcasing extraordinary prowess for an open-source model, even when compared to industry giants’ solutions.

In a direct comparison with ChatGPT, Falcon 180B emerges as a formidable contender, offering superior capabilities compared to the free version while remaining just slightly behind the prowess of the paid “plus” service.

The Hugging Face AI community optimistically anticipates further refinements and enhancements from the broader community for Falcon 180B. As it stands, this model strikes a remarkable balance between GPT 3.5 and the highly anticipated GPT-4, depending on the evaluation benchmark. The future holds exciting prospects as the AI community dives into finetuning and exploring the full potential of this open-source marvel.

The release of Falcon 180B symbolizes a monumental leap forward in the ever-evolving landscape of large language models. Beyond the mere expansion of parameters, techniques such as LoRAs, weight randomization, and Nvidia’s Perfusion have ushered in a new era of highly efficient training for these colossal AI models.

With Falcon 180B now readily available on Hugging Face, researchers and AI enthusiasts worldwide eagerly await the model’s evolution and its continued journey toward pushing the boundaries of natural language understanding. This impressive debut reaffirms the boundless potential of open-source AI, setting the stage for a future rich in innovation and collaboration.

Conclusion:

The emergence of Falcon 180B as a formidable open-source AI model with unprecedented scale and performance heralds a promising future for the market. Its ability to compete with commercial giants and the potential for further improvements through community collaboration underscores the continued evolution of AI technologies. This development reaffirms the growing demand for advanced natural language processing capabilities and signifies a shift towards more efficient AI model training techniques, driving innovation and competitiveness in the industry.