TL;DR:

- Oxford researchers propose Farm3D, an AI framework for generating articulated 3D animals in real-time applications.

- The framework leverages text-to-image generation and synthetic data to create high-quality 3D models.

- Farm3D focuses on creating a statistical model of an entire class of articulated 3D objects for AR/VR, gaming, and content creation.

- The framework trains a network to predict articulated 3D models from a single photograph, using synthetic data generated through a 2D diffusion model.

- Farm3D offers advantages such as curated training data, implicit virtual views, and increased adaptability by eliminating the need for real data.

- The network enables the efficient reconstruction of articulated 3D models from a single image, allowing for manipulation and animation.

- Farm3D’s approach generalizes to real images and has applications in studying and conserving animal behaviors.

- The framework incorporates Stable Diffusion for generating training data and extends the Score Distillation Sampling (SDS) loss for multi-view supervision.

- Farm3D demonstrates excellent performance, rivaling or surpassing baseline models, without requiring real image data.

Main AI News:

The rapid growth of generative AI has ushered in a new era of innovation in visual content creation, with groundbreaking techniques like DALL-E, Imagen, and Stable Diffusion enabling the generation of stunning images from textual prompts. This revolutionary progress, however, may not be limited to the realm of 2D data. Recent developments have shown that a text-to-image generator can be harnessed to produce high-fidelity 3D models, as exemplified by the remarkable work of DreamFusion. Despite lacking specific 3D training, this generator has successfully reconstructed intricate 3D shapes, opening up new possibilities for the field. In this article, we delve into how researchers are pushing the boundaries of text-to-image generation to unlock the potential of articulated 3D models across various object types.

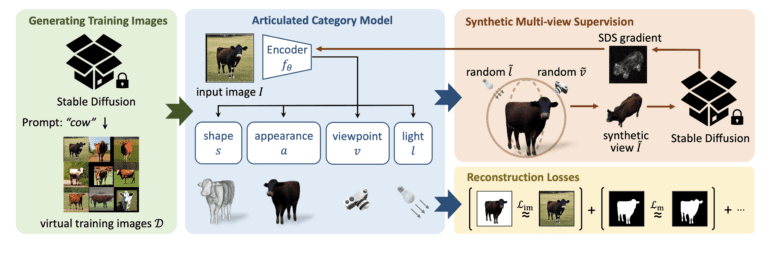

Rather than focusing on the creation of individual 3D assets, as DreamFusion does, the Oxford researchers seek to establish a statistical model encompassing an entire class of articulated 3D objects, such as cows, sheep, and horses. This comprehensive model aims to generate animatable 3D assets applicable to augmented reality (AR), virtual reality (VR), gaming, and digital content creation, regardless of whether the input is a real image or a digitally created one. To achieve this ambitious goal, the researchers propose training a network capable of predicting the articulated 3D model of an object based on a single photograph. In contrast to previous efforts that heavily relied on real-world data, they advocate for the utilization of synthetic data generated through a 2D diffusion model, such as Stable Diffusion.

Introducing Farm3D, a groundbreaking addition to the existing array of 3D generators like DreamFusion, RealFusion, and Make-a-video-3D, which enable the creation of both static and dynamic 3D assets through test-time optimization, albeit at the cost of significant time investment. Farm3D brings forth numerous advantages to the table. Firstly, the 2D picture generator possesses an inherent ability to produce accurate and pristine examples of object categories, effectively curating the training data and streamlining the learning process. Secondly, the generator provides additional insight by implicitly presenting virtual views of each object instance through distillation, thereby enhancing the understanding of the underlying concepts. Lastly, Farm3D’s approach boosts adaptability by eliminating the need for extensive gathering and potential censorship of real-world data.

During the testing phase, the researchers’ network achieves efficient reconstruction from a single image in a feed-forward manner, yielding an articulated 3D model that can be easily manipulated, animated, or even relit, rather than being confined to a fixed 3D or 4D artifact. Notably, their method demonstrates remarkable versatility, as the reconstruction network generalizes to actual images despite being trained solely on virtual input. The applications of Farm3D extend beyond the realms of synthesis and analysis, offering opportunities to study and conserve animal behaviors, among other possibilities.

Farm3D is built upon two groundbreaking technical innovations. Firstly, the researchers illustrate how Stable Diffusion can be leveraged to generate a large training dataset comprising generally clean pictures of a given object category through rapid engineering, facilitating the learning of articulated 3D models. Secondly, they showcase an alternative approach to fitting a single radiance field model, by extending the Score Distillation Sampling (SDS) loss to achieve synthetic multi-view supervision. This extension enables the training of a photo-geometric autoencoder, in this case, MagicPony, which dissects the object into various contributing aspects of image formation, including articulated shape, appearance, camera viewpoint, and illumination.

To facilitate gradient updates and back-propagation to the autoencoder’s learnable parameters, the synthetic views generated by the photo-geometric autoencoder are fed into the SDS loss. The researchers provide Farm3D with a qualitative evaluation, showcasing its proficiency in 3D production and repair tasks. Additionally, the model can be quantitatively assessed through analytical tasks like semantic key point transfer, as it possesses reconstruction capabilities alongside its creation prowess. Remarkably, despite not utilizing real images for training, Farm3D demonstrates comparable or even superior performance to various baseline models, effectively saving valuable time typically spent on data gathering and curation.

Conclusion:

The development of Farm3D represents a significant advancement in the field of AI-driven 3D content generation. The framework’s ability to create animatable, articulated 3D models in real-time has substantial implications for various markets. In gaming, Farm3D can revolutionize character and asset creation, enhancing the realism and interactivity of virtual worlds. In AR/VR experiences, the framework enables the seamless integration of lifelike 3D animals, enhancing immersion and user engagement. Furthermore, Farm3D’s applications in content creation offer new avenues for visual storytelling and digital media production. Overall, Farm3D has the potential to shape and elevate the market by empowering creators with efficient and high-quality 3D modeling capabilities.