TL;DR:

- Five Princeton-affiliated luminaries are acknowledged in TIME100 AI list.

- Advocates for AI fairness and critics of biases within the field.

- Arvind Narayanan exposes flaws in predictive AI, highlighting human biases.

- Narayanan and Sayash Kapoor co-authored a book on “AI snake oil.”

- Narayanan leads the Princeton Web Transparency and Accountability Project.

- Diverse projects at Princeton address AI harms and biases.

- Dr. Fei-Fei Li promotes diversity in AI and calls for responsible government funding.

- Eric Schmidt voices concerns about AI’s impact on political elections.

- Dario Amodei and Daniela Amodei co-founded Anthropic, pioneering “Constitutional AI.”

- These leaders exemplify the fusion of academia and ethical commitment.

Main AI News:

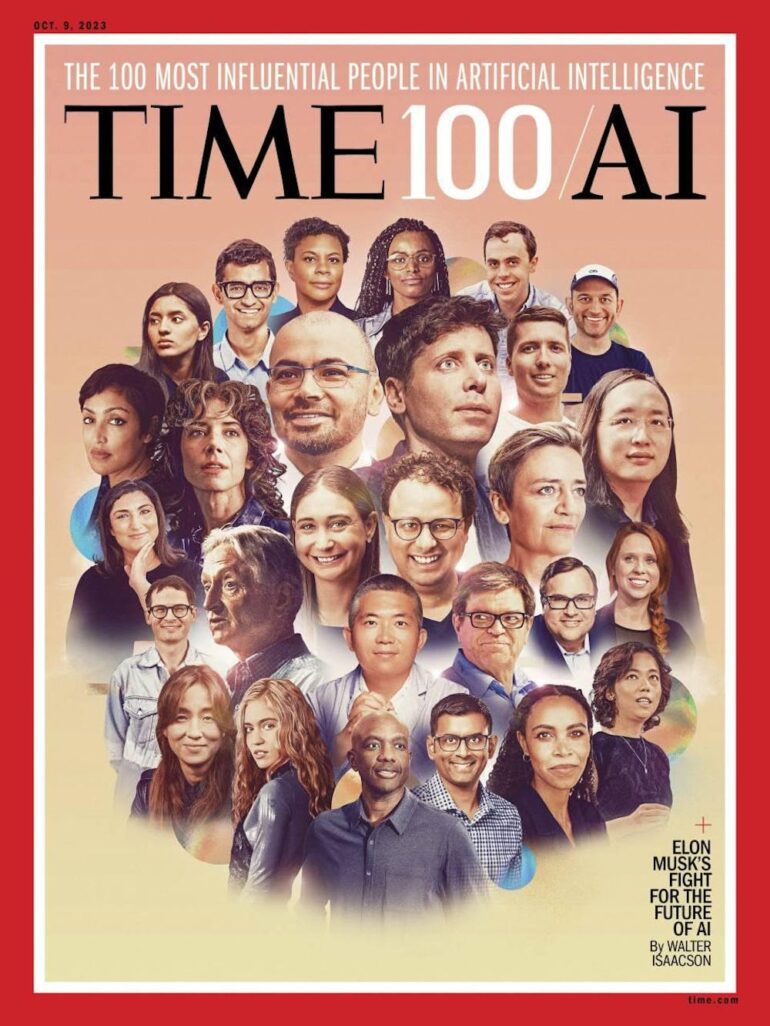

In the ever-evolving landscape of artificial intelligence (AI), five distinguished individuals with ties to Princeton University have taken center stage, as recognized by TIME Magazine’s esteemed 2023 TIME100 Artificial Intelligence list. These Princetonians are not only trailblazers in the field but are also fervent advocates for the pursuit of AI fairness, highlighting potential biases, risks, and the imperative alignment with human interests.

Among this illustrious group, we find University computer science professor Arvind Narayanan, graduate student at the Center for Information Technology Policy (CITP) Sayash Kapoor, and three University alumni: Dario Amodei GS ’11, CEO of Anthropic; Dr. Fei-Fei Li ’99, a distinguished Stanford computer science professor; and Eric Schmidt ’76, former CEO of Google. These individuals stand among the top 100 most influential figures shaping AI’s trajectory and the discourse surrounding it.

Arvind Narayanan and Sayash Kapoor, both affiliated with the University’s CITP, have garnered acclaim for their unflinching critique of what they term “AI snake oil.” In 2019, Narayanan delivered a viral lecture titled “How to recognize AI snake oil,” exposing the inherent flaws in many predictive AI technologies. He argued that these technologies often perpetuate human biases rather than upholding the ideals of impartiality, with a particular focus on AI’s role in evaluating job candidates.

Kapoor, a Ph.D. student under Narayanan’s mentorship, is collaborating with the professor on a forthcoming book about the phenomenon of “AI snake oil,” set to be published in 2024. Additionally, they maintain a popular Substack page under the same banner.

Narayanan spearheads the Princeton Web Transparency and Accountability Project, a vital initiative aimed at comprehending the vast troves of user data collected by companies and their subsequent utilization. His insights were shared with the generative AI working group, organized by the Office of the Dean of the College, Jill Dolan, and the group’s findings are currently under review by the Provost.

Narayanan emphasized, “Predictive AI is fundamentally dubious. The challenges in predicting the future are intrinsic and unlikely to be completely resolved. While not all predictive AI is ‘snake oil,’ it becomes problematic when marketed in ways that obscure its limitations.”

Furthermore, Narayanan elucidated the diverse ways in which Princeton scholars are actively addressing AI’s associated perils, such as biases and existential risks. Professor Aleksandra Korolova’s team, for instance, scrutinizes how ad targeting algorithms can perpetuate discrimination and potential remedies. Postdoctoral fellow Shazeda Ahmed leads an ethnographic exploration of the insular AI safety community, shaping influential arguments and policies regarding AI and existential risks.

“Professor Olga Russakovsky’s team is at the forefront of building advanced computer vision algorithms while diligently mitigating biases. Sayash and I have delved into the impact of generative AI on social media. These are just glimpses of the ongoing projects,” Narayanan added.

In addition to Princeton’s representation, TIME’s prestigious list includes notable figures like Canadian singer and songwriter Grimes and OpenAI CEO Sam Altman. The list aims to spotlight industry leaders driving the AI revolution, individuals who grapple with profound ethical questions surrounding AI’s applications, and innovators worldwide leveraging AI to address societal challenges.

Princeton’s legacy in AI innovation is further upheld by Dr. Fei-Fei Li, an alumna who pursued graduate studies in computer science at Caltech after her undergraduate years at the University. Li’s remarkable journey includes co-founding AI4ALL, a nonprofit dedicated to promoting diversity and inclusion in AI through initiatives like high school camps. She is renowned for her pioneering work in highly accurate AI image-recognition systems and is now a vocal advocate for substantial government funding to advance AI technologies safely and responsibly.

Reflecting on the parallels between AI and the challenges faced in the realm of atomic fission, Li underscores the profound responsibility scientists bear as global citizens. “We’re all citizens of the world,” she states in her TIME100 interview.

Eric Schmidt, famous for his tenure as Google’s CEO from 2001 to 2011, expresses concerns akin to Li’s. Schmidt, now the co-founder of Schmidt Features, worries that AI’s rapid societal transformation lacks adequate regulatory safeguards, leaving room for the spread of misinformation on social media platforms and potential threats to the integrity of political elections.

With a vision to align AI technologies with human values, Dario Amodei and his sister, Daniela Amodei, have earned their spot on TIME’s list. The siblings co-founded Anthropic, a globally recognized AI lab known for its pioneering work in “Constitutional AI.” This innovative approach sets explicit principles for AI systems to follow, promoting alignment with human values.

Dario Amodei’s journey at the University saw him completing his Ph.D. studies in physics under the guidance of neuroscience professor Michael Berry and physics professor William Bialek. Prior to his involvement with Anthropic, Amodei led the GPT-2 and GPT-3 teams at OpenAI, an organization that faced criticism for compromising its founding commitment to transparency due to external funding pressures.

Conclusion:

Princeton’s AI luminaries, featured in TIME100, underscore the importance of AI fairness and responsible innovation. Their work reflects a growing market demand for ethical AI solutions and regulatory frameworks, emphasizing the need for transparency and diversity to navigate the complex landscape of artificial intelligence. Businesses and industries must prioritize ethics and fairness in AI development to meet evolving societal expectations and regulatory standards.