TL;DR:

- AI’s rapid progress has revolutionized industries, with neural networks achieving human-level performance.

- Neural networks are vulnerable to adversarial inputs due to their susceptibility to minor data alterations.

- Injecting controlled noise into initial layers shows promise in enhancing network resilience.

- Attackers have shifted focus to inner layers, exploiting knowledge of the network’s inner workings.

- Researchers from The University of Tokyo introduced a breakthrough by inserting random noise into inner layers, making networks resilient to inner-layer attacks.

- The approach addresses specific attack types, and the battle between attack and defense in neural networks continues.

Main AI News:

The remarkable progress in Artificial Intelligence (AI) has ushered in an era of transformative applications across diverse sectors, from computer vision to audio recognition. The widespread adoption of AI, particularly neural networks, has reshaped industries by achieving performance levels that rival human capabilities. However, lurking beneath these AI triumphs lies a pressing concern—the vulnerability of neural networks to adversarial inputs.

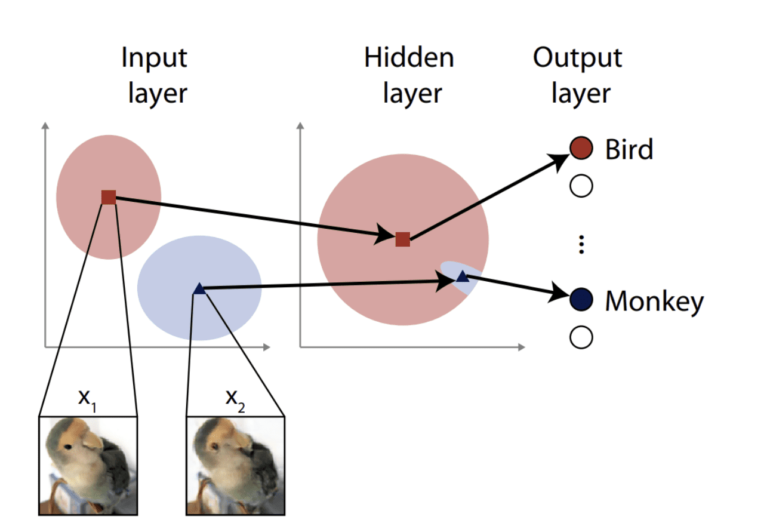

The Achilles’ heel of deep learning is its susceptibility to subtle alterations in input data, capable of leading a neural network astray. Even the most imperceptible changes can cause a neural network to make glaringly incorrect predictions, often with unwarranted confidence. This vulnerability raises significant doubts about the reliability of AI in safety-critical applications like autonomous vehicles and medical diagnostics.

In response, researchers have embarked on a quest for solutions. One intriguing strategy involves introducing controlled noise into the initial layers of neural networks. This innovative approach aims to fortify the network’s resilience against minor variations in input data, preventing it from fixating on trivial details. By compelling the network to learn more general and robust features, noise injection holds the promise of mitigating susceptibility to adversarial attacks and unexpected input variations. This breakthrough offers the potential to make neural networks more dependable and trustworthy in real-world scenarios.

Yet, a fresh challenge emerges as attackers shift their focus to the inner layers of neural networks. Instead of subtle alterations, these attacks exploit intimate knowledge of the network’s inner workings, providing inputs that significantly deviate from expectations but yield the desired result through specific artifacts.

Safeguarding against these inner-layer attacks has proven more complex. The prevalent belief that introducing random noise into the inner layers could impair network performance under normal conditions posed a significant hurdle. However, researchers from The University of Tokyo have defied this assumption.

The research team devised an adversarial attack that targets the inner, hidden layers, resulting in the misclassification of input images. This successful attack served as a platform to evaluate their innovative technique—inserting random noise into the network’s inner layers. Astonishingly, this seemingly simple modification rendered the neural network resilient against the attack. This breakthrough suggests that injecting noise into inner layers can enhance the adaptability and defensive capabilities of future neural networks.

While this approach shows promise, it’s vital to recognize that it addresses a specific attack type. Researchers caution that future attackers may devise novel methods to bypass the feature-space noise considered in their research. The ongoing battle between attack and defense in neural networks demands a perpetual cycle of innovation and improvement to safeguard the systems we rely on daily.

Conclusion:

The innovative technique of injecting noise into the inner layers of neural networks marks a significant advancement in safeguarding AI systems against adversarial attacks. This breakthrough enhances the market’s confidence in the reliability of neural networks for critical applications, driving further investment and innovation in AI technologies.