- Federated Learning (FL) enables collaborative model training without centralizing data.

- Vertical FL (VFL) faces privacy risks, especially from label inference attacks.

- KD𝑘 combines Knowledge Distillation with 𝑘-anonymity to protect VFL.

- KD𝑘 reduces label inference attack accuracy across various scenarios.

- Research validates KD𝑘’s effectiveness through extensive experimentation.

Main AI News:

In the dynamic landscape of modern technology, Federated Learning (FL) stands out as a game-changer, facilitating collaborative model training without the need to centralize data. This decentralized approach is invaluable for organizations and individuals seeking to advance model development while safeguarding sensitive data. FL achieves this by keeping data local and conducting model updates within the confines of each participant’s environment, thereby minimizing communication overhead and accommodating diverse datasets.

However, despite its advantages, FL is not without its vulnerabilities. One of the most pressing concerns is the risk of indirect information leakage, particularly during the model aggregation phase. Various strategies exist within FL, including Horizontal FL (HFL), Vertical FL (VFL), and Transfer Learning, each tailored to specific scenarios.

Vertical FL (VFL) involves non-competing entities with vertically partitioned data, sharing overlapping samples but differing in feature space. While VFL offers deeper attribute dimensions for more accurate models, it also presents unique challenges, notably in terms of privacy protection against label inference attacks.

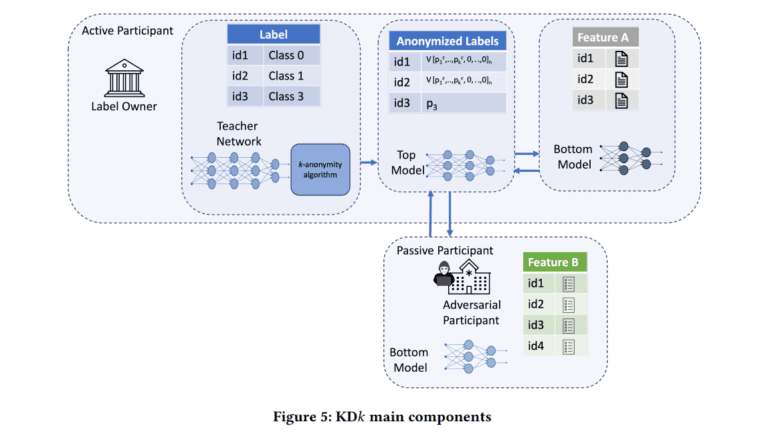

To tackle this issue head-on, researchers at the University of Pavia have developed a groundbreaking defense mechanism known as KD𝑘 (Knowledge Discovery and 𝑘-anonymity). KD𝑘 combines Knowledge Distillation (KD) with an obfuscation algorithm to bolster privacy protection in VFL scenarios.

Knowledge Distillation (KD) is a machine learning compression technique that transfers knowledge from a larger teacher model to a smaller student model, producing softer probability distributions instead of hard labels. In the KD𝑘 framework, an active participant employs a teacher network to generate soft labels, which are then processed using 𝑘-anonymity to introduce uncertainty. By grouping the 𝑘 labels with the highest probabilities, attackers face significant challenges in accurately inferring the most probable label.

Experimental results demonstrate a remarkable reduction in the accuracy of label inference attacks across all three types outlined by Fu et al., underscoring the effectiveness of the KD𝑘 defense mechanism. This research represents a significant contribution to the field, providing a robust countermeasure against label inference attacks validated through extensive experimentation. Moreover, it offers a comprehensive comparison with existing defense strategies, showcasing the superior performance of the KD𝑘 approach.

Conclusion:

The introduction of the KD𝑘 defense framework marks a significant advancement in securing Vertical Federated Learning (VFL) environments against label inference attacks. This innovation not only addresses pressing privacy concerns but also enhances the viability of collaborative model training across disparate entities. As organizations continue to prioritize data security and privacy, the adoption of robust defense mechanisms like KD𝑘 is poised to reshape the landscape of federated learning applications, fostering greater trust and collaboration in data-driven endeavors.