- Large Language Models (LLMs) face challenges in processing complex graph data despite advancements in AI.

- G-Retriever integrates Graph Neural Networks (GNNs) and Retrieval-augmented generation (RAG) to handle real-world graph structures effectively.

- The architecture emphasizes parameter-efficient fine-tuning (PEFT) techniques for enhanced model robustness.

- Researchers from multiple institutions collaborate on G-Retriever, focusing on scalable graph question-answering solutions.

- The framework showcases superior performance in various datasets, reducing token and node counts for faster training and mitigating hallucination by 54%.

Main AI News:

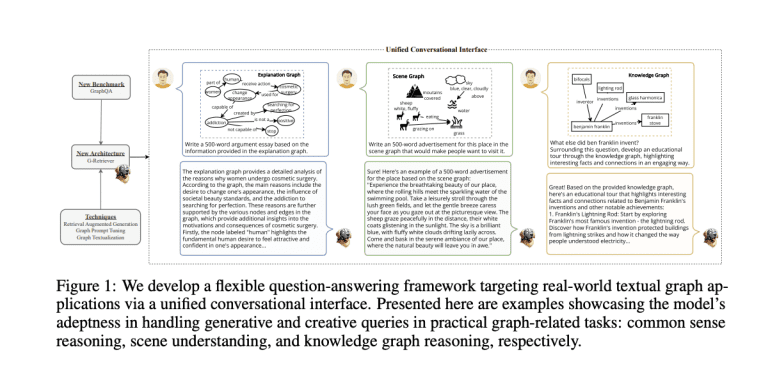

Advances in artificial intelligence have propelled Large Language Models (LLMs) to the forefront, yet their ability to handle complex structured data like graphs remains a challenge. Our interconnected world generates vast amounts of data structured as graphs, from the Web and e-commerce systems to knowledge graphs, all of which are ideal for LLM-centric methods. While efforts have integrated Graph Neural Networks (GNNs) with LLMs, they’ve typically focused on basic graph tasks or simple queries on small graphs. Our research aims to develop a flexible framework for answering complex questions about real-world graphs, providing users with a unified conversational interface.

Building on previous work that combines graph techniques with LLMs, our approach spans general graph models, multi-modal architectures, and practical applications such as fundamental graph reasoning, node classification, and graph classification/regression. Retrieval-augmented generation (RAG) shows promise in mitigating inaccuracies in LLMs and enhancing reliability, although its adaptation to general graph tasks remains underexplored beyond knowledge graphs. Parameter-efficient fine-tuning (PEFT) techniques have refined LLMs, particularly multimodal models, yet their application to graph-specific LLMs is nascent.

Researchers from the National University of Singapore, University of Notre Dame, Loyola Marymount University, New York University, and Meta AI introduce G-Retriever, an innovative architecture tailored for GraphQA. By leveraging GNNs, LLMs, and RAG, G-Retriever combines efficient fine-tuning with the preserved capabilities of pre-trained LLMs. This framework addresses hallucination through direct retrieval of graph data, scaling effectively even with graphs larger than the LLM’s context window. G-Retriever adapts RAG for graphs, framing subgraph retrieval as a Prize-Collecting Steiner Tree (PCST) optimization problem to enhance interpretability by returning the retrieved subgraph.

G-Retriever’s architecture unfolds through four key phases: indexing, retrieval, subgraph construction, and generation. In the indexing phase, node and graph embeddings are generated using a pre-trained language model and stored for efficient retrieval. The retrieval phase employs k-nearest neighbors to identify pertinent nodes and edges based on a query. Subgraph construction utilizes the Prize-Collecting Steiner Tree algorithm to form a relevant and manageable subgraph. The generation phase integrates a Graph Attention Network to encode the subgraph, a projection layer aligning graph tokens with LLM vectors, and a text embedder transforming the subgraph into textual format. Finally, the LLM generates an answer through graph prompt tuning, combining graph tokens with textual embeddings.

G-Retriever excels across diverse datasets and configurations, surpassing baseline models in inference-only scenarios and exhibiting significant enhancements through prompt tuning and LoRA fine-tuning. The method optimizes efficiency by reducing token and node counts, thereby accelerating training times, and decreases hallucination rates by 54% compared to baselines. A comprehensive ablation study underscores the significance of all components, particularly the graph encoder and textualized graph, confirming G-Retriever’s resilience across different encoders and scalability with larger LLMs in graph question-answering tasks.

Conclusion:

G-Retriever’s innovative integration of GNNs, LLMs, and RAG represents a significant leap forward in graph question-answering capabilities. Its demonstrated efficiency and reliability underscore its potential to reshape how AI interacts with complex data structures, offering promising applications in diverse industries reliant on data-driven insights.