- Galileo unveils Luna Evaluation Foundation Models (EFMs) for assessing Large Language Models (LLMs) like GPT-4o and Gemini Pro.

- Luna EFMs are fine-tuned to detect issues such as hallucinations, data leaks, and contextual errors.

- Offers superior speed, cost efficiency, and accuracy compared to traditional evaluation methods.

- Luna EFMs outperform GPT3.5, delivering results in milliseconds with up to 30 times lower evaluation costs.

- Provides customizable evaluations and detailed explanations for improved operational efficiency.

- Early adopters, including Fortune 10 banks and Fortune 50 companies, endorse Luna EFMs.

Main AI News:

Galileo Technologies Inc., a pioneering force in AI accuracy assessment, unveils its latest breakthrough: the Luna Evaluation Foundation Models (EFMs), representing the industry’s premier suite of tailored evaluation models. These cutting-edge EFMs are meticulously crafted to appraise the performance of prominent Large Language Models (LLMs) like OpenAI’s GPT-4o and Google LLC’s Gemini Pro.

In response to the burgeoning trend within the AI landscape of employing AI for evaluation purposes, Galileo has ingeniously engineered Luna EFMs. Over recent years, there has been a surge in research exploring the viability of utilizing models such as GPT-4 to scrutinize the outputs of other LLMs, yielding promising advancements.

Galileo’s innovation comes as a strategic response to this paradigm shift. Recognizing the need for dedicated evaluation tools, Luna EFMs have been fine-tuned to execute precise evaluation tasks. These tasks range from identifying “hallucinations” – instances where AI systems fabricate responses – to detecting data leaks, contextual errors, and malicious prompts.

Galileo positions Luna EFMs as a game-changer in the realm of AI model assessment. In contrast to conventional methods like human evaluations or reliance on GPT-4, Luna EFMs offer unparalleled speed, cost efficiency, and accuracy. Businesses can now deploy generative AI chatbots at scale with newfound confidence, thanks to Galileo’s groundbreaking solution.

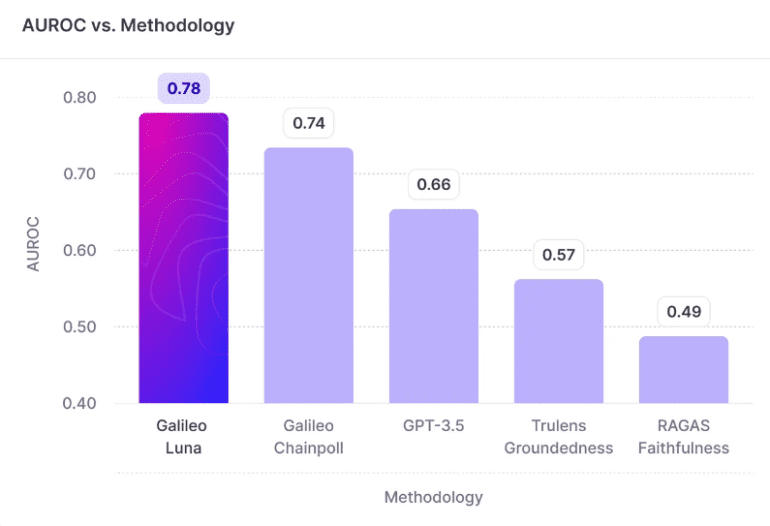

Vikram Chatterji, Galileo’s CEO, underscores the urgency for enterprises to evaluate AI responses rapidly and comprehensively. Luna EFMs empower organizations to assess thousands of AI responses in near real-time, addressing critical issues such as hallucinations, toxicity, and security risks. Chatterji asserts that Luna sets unprecedented benchmarks for speed, accuracy, and cost efficiency, outperforming GPT3.5 by significant margins.

In rigorous benchmark tests, Luna EFMs showcased remarkable performance, surpassing existing evaluation models by up to 20% in overall accuracy. Notably, Luna EFMs boast substantially lower evaluation compute costs, up to 30 times less than GPT-3.5, and deliver results in milliseconds. Furthermore, Luna EFMs offer unparalleled customization, swiftly adapting to pinpoint specific issues with generative AI outputs.

Moreover, Luna EFMs excel in providing explainability, furnishing users with insights into their evaluations. This feature streamlines root-cause analysis and debugging, enhancing operational efficiency.

Early adopters of Luna EFMs have validated their efficacy. Alex Klug, head of product, data science, and AI at HP Inc., emphasizes the significance of accurate model evaluation for developing reliable AI applications. Klug lauds Galileo for overcoming the formidable evaluation challenges faced by enterprise teams.

Galileo’s Luna EFMs are now available on the Galileo Project and Galileo Evaluate platforms, already embraced by Fortune 10 banks and Fortune 50 companies. With Galileo’s groundbreaking innovation, the future of AI evaluation is more efficient, insightful, and accessible than ever before.

Conclusion:

Galileo’s introduction of Luna EFMs marks a significant leap forward in AI evaluation, offering unparalleled efficiency and accuracy. This innovation is poised to revolutionize the market, empowering enterprises to deploy AI applications with greater confidence and reliability. As Luna EFMs gain traction, traditional evaluation methods are likely to be replaced, driving efficiency and cost savings across industries reliant on AI technologies.