TL;DR:

- Carnegie Mellon University proposes GILL, an approach that integrates Large Language Models (LLMs) with image encoder and decoder models.

- GILL enables the generation of unique images by processing mixed image and text inputs.

- It maps the embedding spaces of text and image models to fuse them, resulting in coherent and readable outputs.

- GILL outperforms baseline generation models, particularly in tasks involving longer and more sophisticated language.

- The method combines LLMs with image models for image retrieval, new image production, and multimodal dialogue.

- GILL’s mapping network grounds LLMs to text-to-image generation models, leveraging powerful text representations for visually consistent outputs.

- The model retrieves images from datasets and decides whether to produce or obtain an image during inference.

- GILL’s computational efficiency eliminates the need to run the image generation model during training.

- GILL’s integration of LLMs and image models opens up new possibilities in the field of multimodal tasks.

Main AI News:

In the ever-evolving landscape of AI advancements, OpenAI’s GPT-4 has ushered in a new era of multimodality for Large Language Models (LLMs). Building upon its predecessor, GPT-3.5, which solely catered to textual inputs, the latest iteration, GPT-4, embraces both text and images as viable sources of input. Recognizing the immense potential of this integration, a team of dedicated researchers from Carnegie Mellon University has put forth an innovative approach known as Generating Images with Large Language Models (GILL). This pioneering method extends the boundaries of multimodal language models, enabling the generation of captivating and unique images.

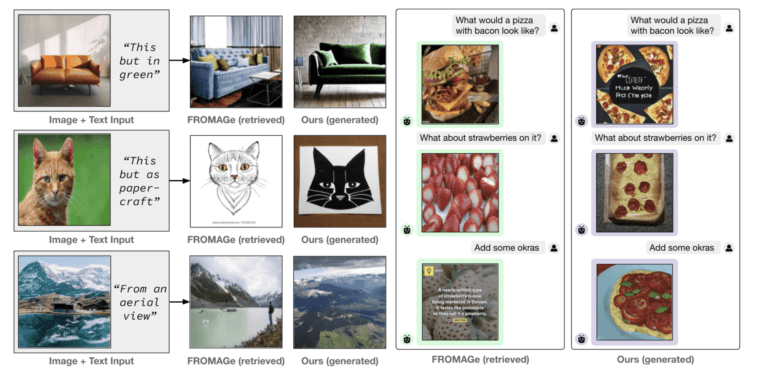

GILL introduces a groundbreaking framework that seamlessly processes inputs by combining images and text. By bridging the gap between distinct text encoders and image-generating models, GILL achieves remarkable feats. Unlike previous methodologies that required interleaved image-text data, GILL accomplishes this by fine-tuning a small set of parameters, leveraging image-caption pairings.

This integration of frozen text-only LLMs and trained image encoding and decoding models empower GILL to offer a plethora of multimodal capabilities, including image retrieval, novel image production, and interactive multimodal dialogue. The fusion of embedding spaces across modalities serves as the underlying foundation for GILL’s success, facilitating the creation of coherent and intelligible outputs.

At the heart of GILL lies an efficacious mapping network that grounds LLMs to text-to-image generation models, resulting in unparalleled performance in picture generation. This mapping network seamlessly translates hidden text representations into the visual models’ embedding space, harnessing the powerful text representations of LLMs to generate aesthetically consistent outputs.

This transformative approach not only enables the model to create new images but also equips it with the ability to retrieve images from a specified dataset. The decision of whether to generate or obtain an image is dynamically determined during inference, guided by a learned decision module conditioned on the LLM’s hidden representations. Moreover, GILL achieves this computational efficiency by eliminating the need to run the image generation model during training, making it a truly remarkable feat.

GILL surpasses baseline generation models, particularly when confronted with longer and more intricate language tasks. In the realm of text processing, including dialogue and discourse, GILL outshines the Stable Diffusion method, showcasing its prowess in handling extended-form text. Dialogue-conditioned image generation also witnesses a notable enhancement with GILL, outperforming non-LLM-based generation models. By leveraging multimodal context, GILL excels in generating images that seamlessly align with the accompanying text. Unlike conventional text-to-image models that solely process textual inputs, GILL deftly handles arbitrarily interleaved image-text inputs, further expanding its capabilities.

Conclusion:

The introduction of GILL, a fusion of Large Language Models (LLMs) and image encoder and decoder models, heralds a significant milestone in the market for multimodal AI solutions. GILL’s ability to seamlessly combine text and image inputs, generating coherent and visually appealing outputs, positions it as a powerful tool for various industries. Its superior performance in handling longer and more complex language tasks, along with its capacity for image retrieval and production, makes it a promising solution for applications ranging from content creation to interactive multimodal experiences. As GILL continues to push the boundaries of multimodal capabilities, businesses can leverage this technology to unlock new opportunities for engaging and immersive experiences for their customers.