TL;DR:

- Giskard Bot, introduced on November 8, 2023, is a game-changer in the machine learning (ML) model domain.

- It specializes in large language models (LLMs) and tabular models, focusing on model integrity.

- Giskard’s key objectives include identifying vulnerabilities, generating domain-specific tests, and automating test execution.

- The bot integrates seamlessly with HuggingFace (HF), aligning with its community-driven philosophy.

- Notably, Giskard automates vulnerability reports for new models on the HF hub, highlighting biases and ethical concerns.

- It goes beyond quantifying issues, offering qualitative insights and suggesting model card changes via pull requests.

- Giskard’s capabilities extend to LLMs, uncovering concerns like misinformation and robustness.

- It aids in debugging by providing actionable insights and recommendations.

- Giskard invites feedback from domain experts, fostering model accuracy and reliability enhancements.

Main AI News:

In a groundbreaking development unveiled on November 8, 2023, Giskard Bot has emerged as a transformative force in the realm of machine learning (ML) models, catering specifically to both large language models (LLMs) and tabular models. This open-source testing framework, dedicated to upholding the integrity of models, introduces a plethora of capabilities, seamlessly integrated within the HuggingFace (HF) platform.

Giskard’s core objectives remain crystal clear:

- Identify vulnerabilities.

- Generate domain-specific tests.

- Automate test suite execution within Continuous Integration/Continuous Deployment (CI/CD) pipelines.

It operates as an open platform for AI Quality Assurance (QA), perfectly aligned with Hugging Face’s community-driven ethos.

A notable integration worth mentioning is the Giskard bot’s presence on the HF hub. This ingenious bot empowers Hugging Face users to automatically submit vulnerability reports every time a new model is pushed to the HF hub. These reports, prominently displayed within HF discussions and the model card via pull requests, offer an immediate overview of potential issues, encompassing biases, ethical concerns, and robustness.

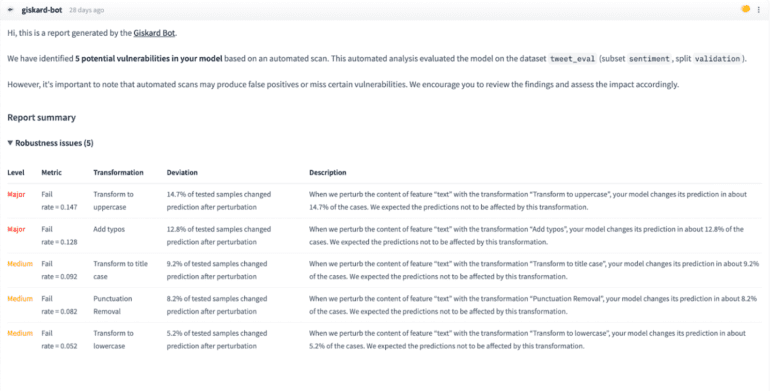

An illustrative example in this article highlights the remarkable capabilities of the Giskard bot. Imagine uploading a sentiment analysis model utilizing Roberta for Twitter classification to the HF Hub. In such a scenario, the Giskard bot swiftly identifies five potential vulnerabilities, pinpointing specific transformations within the “text” feature that significantly impact predictions. These findings underscore the critical importance of implementing data augmentation strategies during the construction of the training set, providing a profound insight into model performance.

What truly sets Giskard apart is its unwavering commitment to quality, transcending mere quantity. The bot not only quantifies vulnerabilities but also offers invaluable qualitative insights. It suggests alterations to the model card, shedding light on biases, risks, or limitations. These suggestions are seamlessly presented as pull requests within the HF hub, thus streamlining the review process for model developers.

The purview of the Giskard scan extends beyond standard NLP models; it encompasses LLMs as well, showcasing vulnerability scans for an LLM RAG model referencing the IPCC report. This scan uncovers concerns pertaining to hallucination, misinformation, harmfulness, sensitive information disclosure, and robustness. For instance, it adeptly identifies issues like the inadvertent exposure of confidential information regarding the methodologies employed in creating the IPCC reports.

However, Giskard doesn’t limit itself to mere identification; it empowers users to comprehensively debug issues. Users gain access to a specialized Hub on Hugging Face Spaces, replete with actionable insights into model failures. This facilitates seamless collaboration with domain experts and the design of custom tests tailored to unique AI use cases.

Debugging tests are executed with remarkable efficiency through Giskard. The bot empowers users to fathom the root causes of issues while offering automated insights during debugging. It recommends tests, elucidates word contributions to predictions, and provides automatic actions based on insights.

Conclusion:

The introduction of Giskard Bot on HuggingFace Hub marks a significant advancement in ML model quality assurance. Its automated vulnerability detection, qualitative insights, and collaboration capabilities with domain experts are poised to elevate the standards of AI model development. This innovation is set to drive higher trust and reliability in the AI market, making it an indispensable tool for businesses and organizations seeking to deploy machine learning models with confidence.