TL;DR:

- Google AI and Tel Aviv University introduced AnyLens, a groundbreaking AI framework for image rendering.

- AnyLens combines text-to-image diffusion models with specialized lens geometry for precise visual control.

- It enables the generation of various visual effects, including fish-eye, panoramic views, and spherical texturing, from a single model.

- The framework fine-tunes latent diffusion models using per-pixel coordinate conditioning, allowing for versatile image manipulation.

- AnyLens surpasses fixed resolution in image generation and incorporates metric tensor conditioning for enhanced control.

- This innovation offers highly realistic image generation, aligned with target geometry, and minimal artifacts in spherical panoramas.

- It encourages creativity and governance in image synthesis, opening new possibilities for high-quality image production.

- Future work aims to overcome limitations and explore advanced conditioning techniques for even more remarkable image generation capabilities.

Main AI News:

Recent advancements in image generation have taken a giant leap forward, thanks to the collaborative efforts of Google Research and Tel Aviv University. They have introduced an innovative AI framework, known as AnyLens, which seamlessly combines a text-to-image diffusion model with specialized lens geometry, ushering in a new era of image rendering.

This groundbreaking framework builds upon the foundation of large-scale diffusion models, which have been trained on paired text and image data. What sets AnyLens apart is its ability to incorporate diverse conditioning approaches, enabling enhanced visual control over the image generation process. From explicit model conditioning to modifying pretrained architectures, AnyLens covers a wide spectrum of techniques.

One of the key breakthroughs presented by this framework is the utilization of original resolution information to achieve multi-resolution and shape-consistent image generation. The researchers have introduced a GANs framework that harnesses this information, allowing for the creation of various visual effects, including fish-eye perspectives, panoramic views, and spherical texturing, all from a single diffusion model.

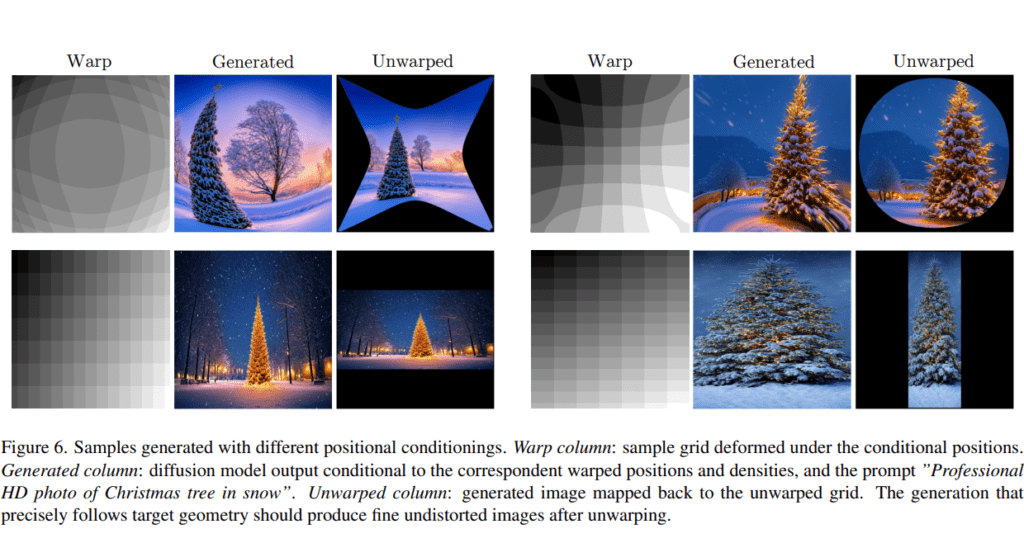

The true innovation lies in AnyLens’ capacity to integrate local lens geometry into the text-to-image diffusion model. This integration empowers the model to replicate intricate optical effects, resulting in highly realistic image generation. Unlike traditional canvas transformations, this method allows for per-pixel coordinate conditioning, enabling a wide range of grid warps. This opens up new possibilities for panoramic scene generation and sphere texturing, pushing the boundaries of image generation.

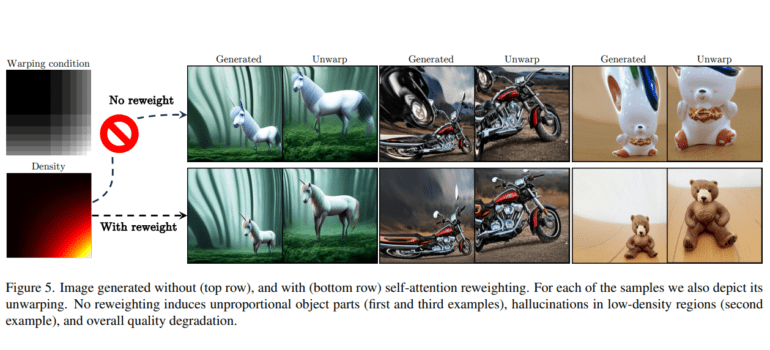

At its core, the framework employs per-pixel coordinate conditioning to fine-tune a pre-trained latent diffusion model. By manipulating curvature properties, it can achieve diverse effects such as fish-eye and panoramic views. Moreover, it surpasses the limitations of fixed resolution in image generation and incorporates metric tensor conditioning, providing even greater control over the process. This comprehensive approach addresses challenges like large image generation and self-attention scale adjustments in diffusion models.

The results speak for themselves: AnyLens successfully integrates a text-to-image diffusion model with specific lens geometry, offering a wide array of visual effects, including fish-eye, panoramic views, and spherical texturing, all from a single model. The level of control over curvature properties and rendering geometry is unparalleled, resulting in highly realistic and nuanced image generation. With training on a vast textually annotated dataset and per-pixel warping fields, this method consistently produces exceptional results aligned with the target geometry. It also simplifies the creation of spherical panoramas with realistic proportions and minimal artifacts.

In conclusion, this cutting-edge framework, with its incorporation of various lens geometries in image rendering, provides a level of control over curvature properties and visual effects that was previously unimaginable. Through per-pixel coordinate and metric conditioning, it enables the manipulation of rendering geometry, resulting in highly realistic images with precise curvature properties. This framework is a testament to the intersection of creativity and technology, serving as a valuable tool for producing high-quality images.

Looking ahead, the researchers envision overcoming limitations by exploring advanced conditioning techniques to enhance diverse image generation further. Their future goals include achieving results akin to specialized lenses that capture distinct scenes. By embracing more advanced conditioning techniques, they anticipate even more remarkable image generation capabilities in the near future.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of AnyLens represents a significant leap in AI-driven image rendering technology. This innovation not only empowers creators with precise control over visual effects but also streamlines the image generation process. It is poised to reshape the market by providing professionals with a versatile tool for producing high-quality images, expanding creative possibilities, and setting new industry standards.