TL;DR:

- SynCLR is an AI breakthrough that learns visual representations exclusively from synthetic images and captions, bypassing the need for real-world data.

- It aims to address the challenges of representation learning, where data quantity, quality, and diversity are crucial.

- The method leverages generative models to curate large-scale datasets, reducing the financial burden associated with real data collection.

- By generating images based on captions, SynCLR excels at achieving granularity at the caption level, surpassing traditional methods.

- Results show SynCLR’s superior performance in comparison to existing models, particularly in fine-grained classification and semantic segmentation tasks.

- The system’s three stages involve synthesizing picture captions, creating synthetic images and captions, and training models for visual representations.

- Future research opportunities include improving caption sets, refining sample ratios, and exploring high-resolution training phases.

Main AI News:

In a groundbreaking collaboration, Google researchers and the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (MIT CSAIL) have introduced SynCLR, an innovative AI approach poised to revolutionize the field of visual representation learning. SynCLR redefines the boundaries of artificial intelligence, offering a remarkable breakthrough by learning visual representations exclusively from synthetic images and captions, all without the need for any real-world data.

In the realm of representation learning, the holy grail lies in harnessing raw and often unlabeled data. The effectiveness of any model’s representation hinges on the quantity, quality, and diversity of the data it encounters. The model essentially mirrors the collective intelligence encoded within the data. As the saying goes, “garbage in, garbage out.” It’s no surprise that the most powerful visual representation learning algorithms of today rely heavily on massive real-world datasets.

However, the process of collecting real data is not without its challenges. While gathering copious amounts of unfiltered data may be feasible due to its lower cost, introducing uncurated data at scale often results in diminishing returns and poor scaling behavior for self-supervised representation learning. On the other hand, collecting curated data on a smaller scale limits the applicability of the resulting models to specific tasks.

To alleviate the financial burden associated with large-scale real-world data collection, Google Research and MIT CSAIL embarked on a quest to determine whether state-of-the-art visual representations could be trained using synthetic data derived from commercially available generative models. This novel approach, aptly termed “Learning from Models,” stands apart from traditional methods that learn directly from data. The team leverages the newfound control offered by models’ latent variables, conditioning variables, and hyperparameters to curate data effectively. This approach offers a plethora of benefits, including reduced storage and sharing complexities compared to unwieldy raw data. Additionally, models can generate an endless stream of data samples, albeit with a limited range of variability.

In their groundbreaking study, the researchers delve into the intricacies of visual classes by employing generative models. Consider, for instance, the four images corresponding to the commands: “A cute golden retriever sits in a house made of sushi” and “A golden retriever, wearing sunglasses and a beach hat, rides a bike.” Traditional self-supervised methods, such as SimCLR, treat each image as a separate class without explicitly considering the underlying semantics. In contrast, supervised learning algorithms like SupCE group all these images under a single class, like “golden retriever.”

Collecting multiple images described by a given caption is a formidable challenge, particularly when scaling up the number of captions. However, this level of granularity is intrinsic to text-to-image diffusion models. With the same caption as a training set and varying noise inputs, these models can generate numerous images that precisely match the caption.

The research findings reveal that compared to SimCLR and supervised training, SynCLR excels at achieving granularity at the caption level. Furthermore, its ability to easily extend visual class descriptions is a significant advantage. Through online class (or data) augmentation, SynCLR has the potential to scale up to an unlimited number of classes, a feat not achievable with fixed-class datasets like ImageNet-1k/21k.

The proposed SynCLR system consists of three stages:

- Synthesizing a substantial collection of picture captions marks the initial phase. The team has developed a scalable method that harnesses the in-context learning capabilities of large language models (LLMs) through word-to-caption translation examples.

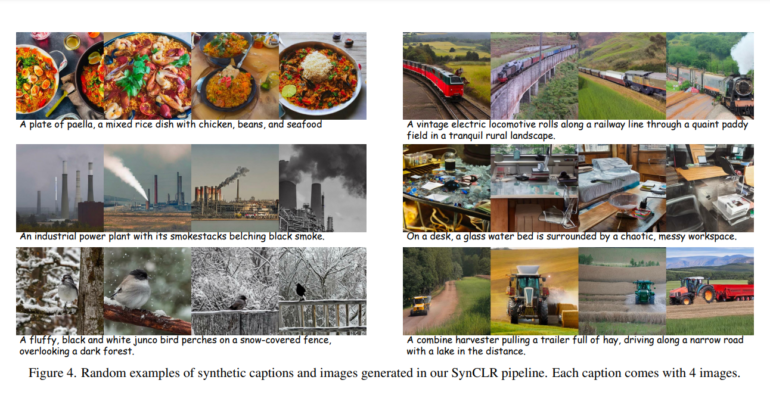

- The next step involves creating a multitude of synthetic images and captions using a text-to-image diffusion model, resulting in a dataset comprising a staggering 600 million photos.

- Finally, models for visual representations are trained using masked image modeling and multi-positive contrastive learning.

The researchers conducted a comprehensive comparison with OpenAI’s CLIP, evaluating top-1 linear probing accuracy on ImageNet-1K. The results speak volumes, with the ViT-B model achieving 80.7% accuracy and the ViT-L model reaching an impressive 83.0%, both trained with SynCLR pre-training. In fine-grained classification tasks, SynCLR’s performance rivals that of DINO v2 models derived from a pre-trained ViT-g model, surpassing CLIP for ViT-B by 3.3% and ViT-L by 1.5%. Furthermore, in the realm of semantic segmentation on ADE20k, SynCLR outperforms MAE pre-trained on ImageNet by 6.2 and 4.1 in mIoU for ViT-B and ViT-L, respectively, under the same setup. This highlights SynCLR’s remarkable adaptability to dense prediction tasks, akin to DINO v2, all without the need for high-resolution training, a distinct advantage that sets it apart.

The research team’s forward-thinking approach underscores numerous avenues for enhancing caption sets. Suggestions include leveraging more sophisticated LLMs, refining sample ratios across diverse concepts, and expanding the library of in-context examples. Additionally, incorporating high-resolution training phases, intermediate IN-21k fine-tuning stages, SwiGLU, and LayerScale integration can lead to architectural improvements. However, these recommendations remain subjects for future research, given the limitations of resources and the paper’s primary focus on achieving groundbreaking metrics.

Conclusion:

SynCLR presents a groundbreaking approach to visual representation learning, with significant implications for the AI market. By tapping into synthetic data and leveraging generative models, it addresses the limitations of traditional methods, offering scalability and improved performance. This innovation has the potential to reshape the landscape of AI applications, enhancing capabilities in image recognition and understanding. The impressive results achieved by SynCLR position it as a game-changer in the field, fostering new opportunities for AI-driven solutions across industries.