TL;DR:

- Google Cloud has launched A3 supercomputer virtual machines for Large Language Models (LLMs).

- The A3 virtual machines are powered by Nvidia’s H100 GPUs and offer 2TB of RAM.

- They provide a bisectional bandwidth of 3.6 TB/s through NVLink 4.0 and NVSwitch.

- Integration with Intel Xeon Scalable processors enables efficient management of administrative tasks.

- The A3 supercomputers deliver up to 26 exaFlops of power, reducing training time and costs for machine learning models.

- Google’s GPU-to-GPU interface allows data sharing at 200 Gbps, ten times faster than previous models.

- Customers can sign up for a preview waitlist to access the A3 virtual machines.

- Google plans to integrate the new cloud offerings with its software and cloud products.

- Other companies, such as Microsoft and IBM, are also developing their own AI supercomputers.

Main AI News:

Google has made a significant announcement at Google I/O 2023 with the launch of its cutting-edge A3 supercomputer virtual machines. These powerful offerings are specifically designed to meet the resource-intensive demands of Large Language Models (LLMs), revolutionizing the field of AI.

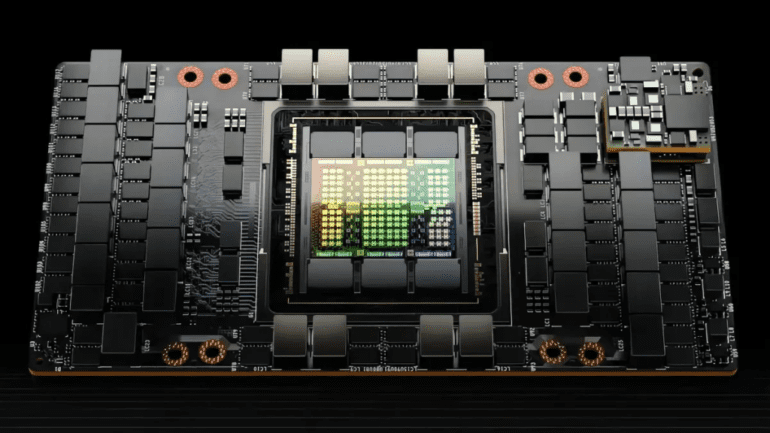

The A3 virtual machines are equipped with Nvidia’s H100 GPUs, leveraging the advanced Hopper architecture. With 2TB of RAM and a remarkable bisectional bandwidth of 3.6 TB/s across GPUs via NVLink 4.0 and NVSwitch, these machines deliver exceptional performance. Furthermore, integration with fourth-generation Intel Xeon Scalable processors ensures efficient management of administrative tasks.

Ian Buck, the Vice President of Hyperscale and High Performance Computing at NVIDIA, expressed excitement about the collaboration, stating, “Google Cloud’s A3 VMs, powered by next-generation NVIDIA H100 GPUs, will accelerate training and serving of generative AI applications. We’re proud to continue our work with Google Cloud to help transform enterprises worldwide with purpose-built AI infrastructure.”

Google’s A3 supercomputers boast an astonishing power capacity of up to 26 exaFlops. This unprecedented level of computational power significantly reduces the time and cost required to train machine learning models. Notably, it introduces a GPU-to-GPU interface that facilitates data sharing at an impressive rate of 200 Gbps, ten times faster than the previous A2 VMs.

To access the A3 virtual machines, interested customers will need to sign up for a preview waitlist. Google has plans to seamlessly integrate these innovative cloud offerings with its existing software and cloud products, offering a comprehensive AI infrastructure solution.

While Google remains at the forefront of supercomputer virtual machine development, it’s important to acknowledge that other industry leaders are also investing in this technology. Microsoft, in partnership with Nvidia, unveiled their own Azure-powered AI supercomputers last year. Similarly, IBM is working on their AI supercomputer design called Vela, specifically targeting government agencies.

As the competition in the field intensifies, these advancements in supercomputing technology pave the way for unprecedented breakthroughs in AI research and applications. Google’s A3 supercomputers demonstrate the company’s commitment to pushing the boundaries of innovation and solidify its position as a key player in the AI infrastructure landscape.

Conlcusion:

The introduction of Google Cloud’s A3 supercomputer virtual machines for Large Language Models (LLMs) signifies a significant advancement in the market of AI infrastructure. The integration of powerful Nvidia H100 GPUs and Intel Xeon Scalable processors, coupled with exceptional performance capabilities, revolutionizes the training of large AI models. With reduced training time and costs, businesses can accelerate their AI development and innovation.

Furthermore, the impressive GPU-to-GPU data sharing capabilities and seamless integration with existing cloud products offer a comprehensive solution for organizations seeking to leverage AI technologies. As Google, along with other industry players, continues to push the boundaries of supercomputing technology, the market can anticipate further advancements and increased competition, driving further growth and accessibility of AI infrastructure for businesses across various sectors.