TL;DR:

- Robotics seeks the holy grail of versatility in an era of specialization.

- Robot learning emerges as the solution, with various approaches in development.

- Google DeepMind collaborates with 33 research institutes to create Open X-Embodiment, akin to ImageNet.

- This massive shared dataset aims to train generalist models for controlling diverse robots and tasks.

- Open X-Embodiment features 500+ skills and 150,000 tasks from 22 robot types.

- Data is open-sourced, promoting collaboration and accelerating research.

Main AI News:

In the quest to advance robotics, the pursuit of knowledge stands as the holy grail. In an age where “general purpose” is a buzzword, comprehending the current capabilities of robotic systems can be challenging for those outside the field. The reality is that most contemporary robots excel at performing one or, at best, a few specific tasks.

This truth holds across the spectrum, from humble robot vacuums to cutting-edge industrial setups. The question then becomes: how do we transform these specialized machines into versatile, general-purpose robots? The journey towards multipurpose robotics is bound to be a series of milestones.

The solution, it seems, lies in the realm of robot learning. Visit any robotics research laboratory today, and you’ll encounter teams dedicated to addressing this very challenge. Startups and corporations are also joining the effort, exemplified by companies like Viam and Intrinsic, which aim to simplify robot programming.

Currently, the solutions available span a wide range. However, it is increasingly evident that no single silver bullet will solve this issue. Instead, the path to building more complex and capable robotic systems likely involves a combination of approaches. What is central to most of these approaches is the need for a substantial, shared dataset.

Google’s DeepMind robotics team recently unveiled their collaboration with 33 research institutes, resulting in the creation of a vast, shared database known as Open X-Embodiment. This endeavor draws parallels to ImageNet, a database of over 14 million images established in 2009, which played a pivotal role in advancing computer vision research.

DeepMind researchers Quan Vuong and Pannag Sanketi emphasize the significance of Open X-Embodiment, stating, “Just as ImageNet propelled computer vision research, we believe Open X-Embodiment can do the same to advance robotics.” They recognize that building a diverse dataset of robot demonstrations is a crucial step in training a versatile model capable of controlling various types of robots, comprehending diverse instructions, performing rudimentary reasoning for complex tasks, and generalizing effectively.

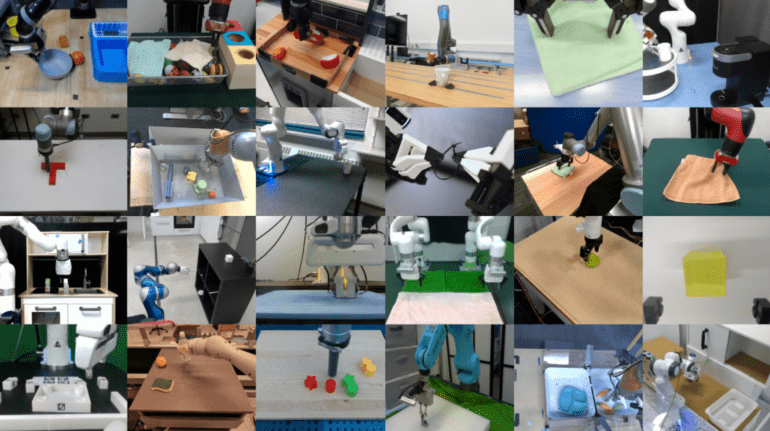

However, the enormity of this task cannot be entrusted to a single research lab. The Open X-Embodiment database encompasses over 500 skills and 150,000 tasks sourced from 22 different robot types. As the name “Open” suggests, the creators are committed to making this invaluable data accessible to the broader research community.

In their own words, the DeepMind team asserts, “We hope that open sourcing the data and providing safe but limited models will reduce barriers and accelerate research. The future of robotics relies on enabling robots to learn from each other and, most importantly, allowing researchers to learn from one another.” This collaborative endeavor holds the promise of reshaping the landscape of robotics, propelling it toward a more versatile and interconnected future.

Conclusion:

Google DeepMind’s Open X-Embodiment initiative, akin to ImageNet for robotics, represents a pivotal step in advancing the field. By fostering collaboration and providing a massive shared dataset, it promises to accelerate research and drive the future of versatile, interconnected robotics. This development holds significant implications for the robotics market, potentially ushering in a new era of innovation and capabilities in robotic systems. Businesses in this space should closely monitor these advancements to stay at the forefront of the evolving landscape.