TL;DR:

- 2024 marks a significant year for AI and robotics convergence.

- Google’s DeepMind Robotics researchers are pioneering AI-powered robotics.

- AutoRT utilizes Visual Language Models for situational awareness and robot task optimization.

- Large Language Models (LLMs) enable robots to understand natural language commands.

- AutoRT orchestrates up to 20 robots simultaneously, collecting invaluable data.

- RT-Trajectory overlays robotic arm sketches on videos, enhancing learning.

- RT-Trajectory achieves a remarkable 63% success rate in testing.

- These innovations unlock untapped potential and knowledge in robot datasets.

Main AI News:

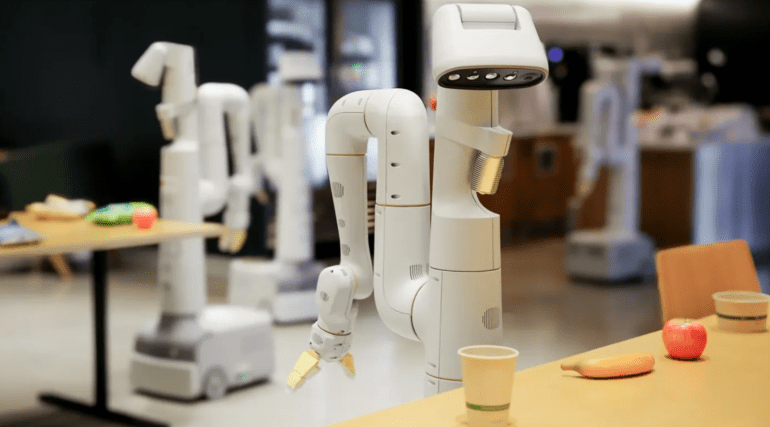

As we step into the year 2024, the convergence of generative AI and large foundational models with the world of robotics promises to usher in a transformative era. This exciting intersection holds vast potential across a spectrum of applications, extending from the realms of learning to product design. Among the pioneering teams embarking on this journey is Google’s DeepMind Robotics researchers, who are at the forefront of exploring the boundless possibilities within this domain. In a recent blog post, the team sheds light on their ongoing research endeavors aimed at endowing robots with a deeper understanding of human desires and requirements.

Historically, robots have been specialized in performing singular tasks repeatedly throughout their operational lifespan. While these single-purpose robots excel in their designated roles, they encounter challenges when faced with unforeseen changes or inadvertent errors within their operational contexts.

Enter AutoRT, an innovative system engineered to harness the power of large foundational models to address a myriad of objectives. The system’s operation commences by leveraging a Visual Language Model (VLM) to enhance situational awareness. AutoRT excels at orchestrating fleets of robots operating collaboratively, equipped with cameras to comprehensively survey their surroundings and identify objects within their environment.

In tandem, a formidable large language model (LLM) takes center stage by proposing tasks that can be accomplished by the hardware, including its end effector. LLMs are widely acknowledged as the linchpin in enabling robots to comprehend natural language commands, thereby diminishing the reliance on rigid and labor-intensive programming.

This groundbreaking system has undergone rigorous testing over the past seven months, demonstrating remarkable capabilities. AutoRT boasts the capability to coordinate the activities of up to 20 robots concurrently, encompassing a diverse array of 52 distinct devices. Throughout this testing phase, DeepMind meticulously accumulated a staggering dataset, comprising a staggering 77,000 trials, encompassing over 6,000 diverse tasks.

In addition to AutoRT, the DeepMind Robotics team introduces RT-Trajectory, a novel approach that leverages video input for robotic learning. While several research groups have explored the use of YouTube videos as a means to train robots at scale, RT-Trajectory adds a compelling dimension to this endeavor. It overlays a two-dimensional sketch of the robotic arm in action onto the video, augmenting the learning process.

The team highlights the significance of these trajectories, which are presented as RGB images, as they provide invaluable low-level visual cues to the model while it learns its robot-control policies. In extensive testing, RT-Trajectory demonstrated a remarkable success rate, doubling that of its predecessor, RT-2 training, with a notable 63% compared to the previous 29%, while tackling 41 distinct tasks.

DeepMind emphasizes that RT-Trajectory taps into the rich repository of robotic-motion information inherent in all robot datasets, which has been hitherto underutilized. This breakthrough not only propels us closer to the vision of creating robots capable of navigating novel scenarios with precision and efficiency but also unlocks the latent knowledge residing within existing datasets. The future of robotics and AI-driven automation appears poised for unprecedented growth and innovation in the business landscape.

Conclusion:

The groundbreaking innovations in robotics, showcased by Google’s AutoRT and RT-Trajectory, signify a pivotal moment in the market. These advancements are poised to drive significant improvements in the efficiency and adaptability of robots, enabling them to understand human commands and navigate complex tasks. This represents a substantial leap towards broader adoption of robotics in various industries, offering businesses the opportunity to streamline operations and enhance productivity through AI-driven automation.