TL;DR:

- Google introduces StyleDrop, an AI method for synthesizing images that faithfully follow specific visual styles.

- StyleDrop enables users to seamlessly transfer desired styles to new images while preserving unique characteristics.

- Powered by Muse’s advanced generative vision transformer, StyleDrop undergoes training with minimal parameters for impressive results.

- It proves invaluable for brands to develop their unique visual styles and prototype ideas efficiently.

- StyleDrop’s image generation process relies on text-based prompts and accurately captures the essence of desired styles.

- It offers a remarkably quick generation process, taking only three minutes.

- Extensive studies showcase StyleDrop’s superiority in faithfully transferring styles compared to other methods.

- Limitations include the need for further exploration of diverse visual styles and responsible use of the technology.

Main AI News:

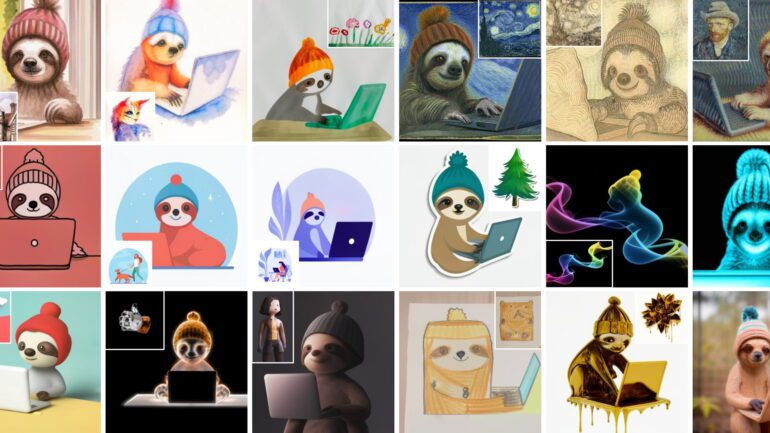

In a groundbreaking development, a team of Google researchers has introduced StyleDrop, an innovative neural network in collaboration with Muse’s fast text-to-image model. This cutting-edge technology empowers users to generate images that faithfully embody a specific visual style, capturing even the most intricate details and nuances. By selecting an original image with the desired style, users can seamlessly transfer it to new images while preserving all the unique characteristics that define the chosen style. With the versatility of StyleDrop, it becomes possible to transform a children’s drawing into a stylized logo or character, opening up endless creative possibilities.

Driving the capabilities of StyleDrop is Muse’s advanced generative vision transformer, which undergoes training using a combination of user feedback, generated images, and Clip Score. Remarkably, this neural network achieves exceptional results with minimal trainable parameters, accounting for less than 1% of the total model parameters. Through iterative training, StyleDrop continually enhances the quality of generated images, delivering impressive results within minutes.

For brands seeking to cultivate their unique visual style, StyleDrop proves to be an invaluable tool. Creative teams and designers can efficiently prototype ideas in their preferred manner, making it an indispensable asset in the realm of brand development. Extensive studies have been conducted to evaluate StyleDrop’s performance against other methods, including DreamBooth, Textual Inversion on Imagen, and Stable Diffusion. The results consistently demonstrate StyleDrop’s superiority, producing high-quality images that faithfully adhere to the user-specified style.

StyleDrop’s image generation process centers around the text-based prompts provided by users. By appending a natural language style descriptor during training and generation, StyleDrop accurately captures the essence of the desired style. This capability enables users to train the neural network with their brand assets, seamlessly integrating their unique visual identity into the generated images.

One of StyleDrop’s standout features is its remarkably efficient generation process, typically taking a mere three minutes. This swift turnaround time empowers users to explore a multitude of creative possibilities and experiment with different styles effortlessly. However, it’s important to note that while StyleDrop showcases the immense potential for brand development, the application has not yet been released to the public.

Moreover, experiments conducted to evaluate StyleDrop’s performance provide compelling evidence of its capabilities and superiority over existing methods. These experiments encompass a wide variety of styles and showcase StyleDrop’s ability to faithfully capture the nuances of texture, shading, and structure across diverse visual styles. The quantitative results, measured by CLIP scores for style consistency and textual alignment, further reinforce the effectiveness of StyleDrop in transferring styles faithfully.

However, it is crucial to acknowledge the limitations of StyleDrop. While the presented results are impressive, visual styles are diverse and warrant further exploration. Future studies could delve into a more comprehensive examination of various visual styles, including formal attributes, media, history, and art style. Additionally, careful consideration should be given to the societal impact of StyleDrop, particularly regarding the responsible use of the technology and the potential for unauthorized copying of individual artists’ styles.

Conclusion:

The introduction of StyleDrop by Google represents a significant leap in AI-based visual style synthesis. With its ability to faithfully transfer styles, generate high-quality results swiftly, and assist brands in developing their visual identities, StyleDrop has the potential to revolutionize the market. It empowers creative individuals and provides a user-friendly interface for exploring endless creative possibilities. However, further research is needed to expand the range of visual styles and address potential ethical considerations.