TL;DR:

- Google Researchers introduce a novel auditing scheme for differentially private machine learning systems.

- The approach focuses on assessing privacy guarantees with just a single training run.

- It emphasizes the connection between differential privacy (DP) and statistical generalization.

- DP ensures minimal individual data impact on outcomes, providing a quantifiable privacy guarantee.

- This innovative method reduces computational costs compared to traditional privacy audits.

- It offers broad applicability to various differentially private algorithms and practical considerations.

- The scheme’s versatility and efficiency mark a significant advancement in privacy auditing.

Main AI News:

Differential privacy (DP) stands as a cornerstone technique within the realm of machine learning, serving as a bulwark for protecting the privacy of individuals whose data fuels the training of predictive models. It operates as a rigorous mathematical framework, ensuring that the model’s outcomes remain impervious to the presence or absence of any individual within the input data. Recent developments have yielded a pioneering auditing scheme, offering a versatile and efficient means to evaluate privacy assurances within these models, all while making minimal assumptions about the underlying algorithm.

Google Researchers have unveiled an ingenious auditing solution tailored for differentially private machine learning systems, focusing on the efficacy of a single training run. The study spotlights a crucial nexus between DP and statistical generalization, a cornerstone of their proposed auditing methodology.

Differential privacy, at its core, provides a quantifiable assurance that individual data contributions exert minimal influence on model outcomes. The realm of privacy audits, in turn, examines potential anomalies or flaws in the implementation of DP algorithms. Traditional audits often prove computationally intensive, necessitating multiple iterations. However, by harnessing parallelism to introduce or remove training examples independently, this groundbreaking scheme imposes fewer constraints on the underlying algorithm and adapts seamlessly to both black-box and white-box scenarios.

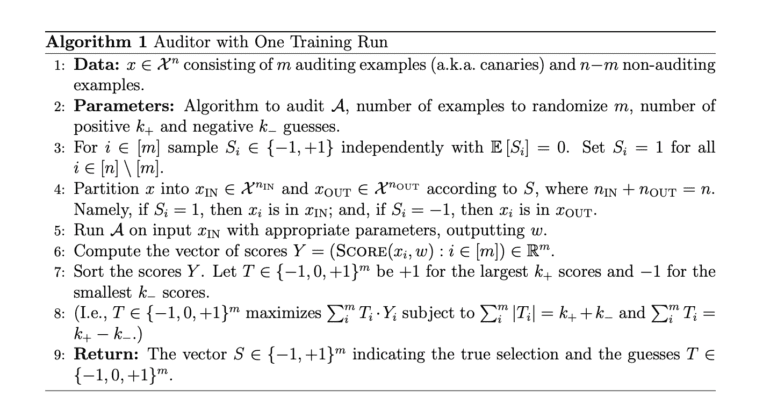

This pioneering approach, as elucidated in Algorithm 1 of the study, independently manipulates the inclusion or exclusion of examples and computes scores for decision-making purposes. By dissecting the interplay between DP and statistical generalization, this approach remains versatile, lending itself effectively to black-box and white-box contexts. Algorithm 3, aptly named the DP-SGD Auditor, exemplifies a specific instantiation of this methodology, underscoring its applicability to a diverse array of differentially private algorithms. This inclusivity extends to considerations such as in-distribution examples and parameter assessments, reinforcing the versatility of their privacy auditing techniques.

In summary, this innovative auditing method delivers a quantifiable privacy guarantee, facilitating the evaluation of mathematical analyses and error detection. Its generic applicability to various differentially private algorithms, coupled with its adaptability to factors like in-distribution examples and parameter evaluations, leads to demonstrable privacy assurances with substantially reduced computational overhead.

The proposed auditing scheme represents a significant leap forward in assessing differentially private machine learning techniques, doing so with a single, parallelized training run. Its ability to provide effective privacy assurances while significantly reducing computational burdens vis-à-vis traditional audits is indeed noteworthy. Furthermore, its broad applicability to a spectrum of differentially private algorithms, along with a pragmatic approach encompassing in-distribution examples and parameter evaluations, cements its position as a valuable contribution to the field of privacy auditing within the business magazine style.

Conclusion:

Google’s pioneering approach to privacy auditing in machine learning signifies a significant step forward for the market. It offers businesses the ability to maintain robust privacy assurances while reducing computational expenses, a crucial factor in today’s data-driven landscape. This breakthrough strengthens the confidence of organizations and individuals in the privacy of their data, fostering trust and innovation within the industry.