TL;DR:

- Google introduced VideoPoet, a cutting-edge Large Language Model (LLM) for video generation.

- VideoPoet excels in various tasks, including text-to-video, image-to-video, video stylization, inpainting, outpainting, and video-to-audio conversion.

- It addresses the challenge of creating coherent large motions in videos, surpassing current video generation technologies.

- VideoPoet integrates multiple video generation capabilities within a single LLM framework, distinguishing itself from segmented models.

- It is trained with various tokenizers, allowing it to perform diverse tasks based on text inputs.

- Compared to other AI-generated video models, VideoPoet excels in text fidelity and motion interestingness, delivering more engaging videos.

- It showcases zero-shot capabilities, generating content from minimal input with exceptional accuracy.

- Some skepticism surrounds its practical application, particularly regarding specific prompting techniques like “8k.”

Main AI News:

Google has unveiled its latest innovation, VideoPoet, a cutting-edge Large Language Model (LLM). VideoPoet is engineered to excel in various tasks, including text-to-video conversion, image-to-video transformation, video stylization, video inpainting and outpainting, and video-to-audio conversion. This introduction marks a pivotal moment in addressing the challenge of generating seamless and coherent large-scale motions within videos, a persistent limitation in existing video generation technologies.

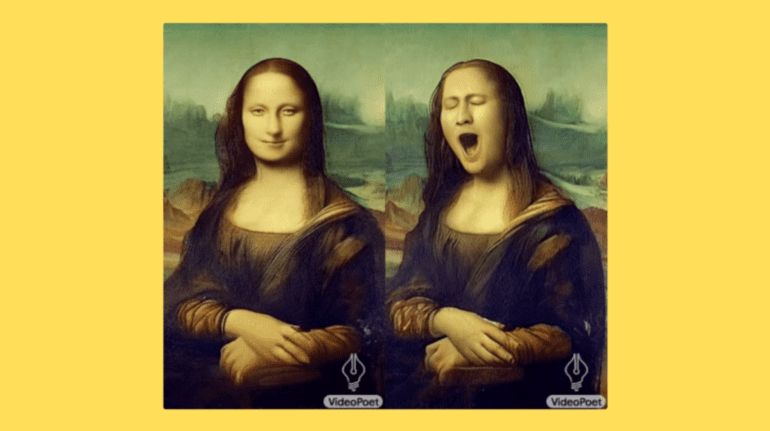

What sets VideoPoet apart is its holistic approach, integrating multiple video generation capabilities into a unified LLM framework. Unlike its segmented counterparts, this model harnesses various modalities and undergoes training with multiple tokenizers, including MAGVIT V2 for video and image, as well as SoundStream for audio. This multifaceted training empowers VideoPoet to undertake an array of tasks, from breathing life into static images to editing and stylizing videos based on textual inputs.

In the ever-evolving landscape of AI-generated video technology, VideoPoet emerges as a significant advancement, carving out a niche for itself amongst established models like Imagen Video, RunwayML, Stable Video Diffusion, Pika, and Alibaba Group’s recent ‘Animate Anyone.’ What truly distinguishes VideoPoet is its remarkable proficiency in preserving text fidelity and infusing videos with captivating motions.

Key factors for comparison include Zero-Shot Capabilities, where VideoPoet, akin to its contemporary peers, excels at generating content with minimal input, such as a solitary text prompt or image, without the need for specific training on the subject matter. However, it distinguishes itself by showcasing superior accuracy in translating text prompts into videos, thereby elevating the overall user experience. While other models often grapple with the challenge of creating large, artifact-free motions, VideoPoet shines through with marked improvements, delivering more dynamic and fluid video content.

As with any groundbreaking Google announcement, skepticism invariably accompanies the unveiling of VideoPoet by Google Research on December 19, 2023. While VideoPoet undeniably represents a leap forward in text fidelity and motion interestingness within video generation, critics raise questions regarding the model’s reliance on specific prompting techniques. Some observers note the inclusion of terms like “8k” in prompts, a technique reminiscent of previous AI models such as VQGAN + CLIP and Stable Diffusion. This has led to concerns about the potential artificial enhancement of photorealism, casting a shadow on the model’s inherent capabilities.

Conclusion:

Google’s VideoPoet marks a significant leap in AI-driven video generation, offering a versatile and powerful solution. Its superior text fidelity and motion accuracy set it apart from competitors. However, concerns about the use of specific prompts raise questions about its true capabilities. Nevertheless, VideoPoet is poised to reshape the video generation market, driving innovation and sparking debates about its potential impact.