TL;DR:

- Microsoft Research and UC Berkeley introduce Gorilla, a superior Large Language Model (LLM), outperforming GPT-4 in generating accurate API calls.

- Gorilla’s retriever-aware training technique equips it with a semantic understanding of API calls, enabling precise and syntactically correct generation.

- Gorilla achieves 95% accuracy in crafting API calls, surpassing GPT-4’s 85% success rate.

- Gorilla’s training in code snippets and natural language descriptions empowers it with programming language expertise and coding best practices.

- Microsoft’s collaboration with OpenAI enables seamless integration of Gorilla’s capabilities across various services.

Main AI News:

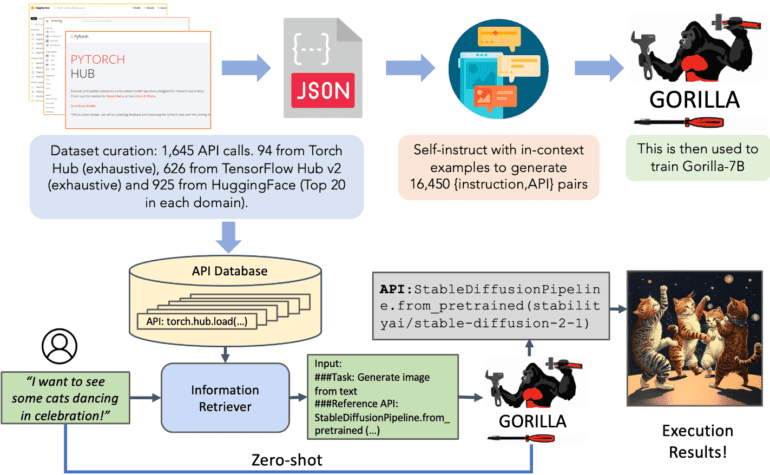

In a remarkable advancement within the realm of artificial intelligence, the convergence of academia and industry has given rise to a groundbreaking achievement. A collaborative endeavor between UC Berkeley and Microsoft Research has borne fruit in the form of an innovative Large Language Model (LLM) named Gorilla. This impressive creation has not only outshone its predecessor, GPT-4, but also etched a new paradigm in the arena of API (Application Programming Interface) call accuracy and versatility.

The Challenge: GPT-4’s Achilles Heel

The renowned GPT-4, nurtured by OpenAI, has demonstrated extraordinary capabilities in text generation, language translation, and even question answering. Yet, a significant limitation has been unveiled when it comes to the intricate world of API calls. GPT-4’s shortfall lies in its inability to discern the nuanced semantics underpinning API calls, relegating it to producing text closely aligned with the API documentation but lacking the true comprehension of the interactions.

Enter the Game-Changer: Gorilla’s Mastery

The visionary solution lies within Gorilla’s ingenious “retriever-aware training” technique. By immersing the LLM in an expansive dataset featuring API calls alongside their accompanying documentation, Gorilla has grasped the subtleties of API semantics. This intricate training equips Gorilla with the acumen to craft API calls that are both syntactically and semantically impeccable, even amid fluctuations in the API documentation landscape.

The Proof: Gorilla’s Unrivaled Prowess

A resounding testament to Gorilla’s triumph is a recent study showcasing its superiority over GPT-4 across an array of API call scenarios. Gorilla attains a remarkable 95% accuracy in generating precise API calls, leaving GPT-4 trailing at a modest 85% success rate. Notably, Gorilla effortlessly navigates beyond the confines of its training dataset, proficiently devising API calls for APIs not previously encountered, a feat GPT-4 struggles to replicate.

The Catalyst: Training Strategies Defined

The crux of Gorilla’s prowess lies in its distinctive training regimen. Gorilla’s training encompasses a rich corpus, spanning code snippets and natural language explanations, cultivating a deep understanding of programming languages, APIs, and coding best practices. Unlike GPT-4, which draws from a more general text corpus, Gorilla’s focused training imbues it with the unique ability to discern intricate syntax, semantics, and structural patterns inherent in coding. Moreover, Gorilla’s employment of a novel attention mechanism augments its capacity to encapsulate prolonged dependencies and structural intricacies, further refining its code generation capabilities.

The Advantages: A Quantum Leap in AI Precision

Gorilla’s ascendancy over GPT-4 translates into a multifaceted advantage for developers and industry practitioners alike:

• Unparalleled Accuracy: Gorilla achieves an extraordinary 95% accuracy in formulating impeccable API calls, overshadowing GPT-4’s 85%.

• Unrestricted Flexibility: Gorilla’s dexterity extends to crafting API calls for uncharted APIs, a quintessential asset for those grappling with novel interfaces.

• Simplicity in Deployment: Gorilla’s user-friendly nature simplifies interaction. Developers merely articulate their API call requirements in natural language, leaving Gorilla to autonomously materialize the accurate API calls.

Forging Ahead: Microsoft and OpenAI’s Synergy

Beyond the realm of AI achievement, the symbiotic alliance between Microsoft and OpenAI unveils a captivating narrative of innovation and collaboration. Microsoft’s substantial investment in OpenAI, conferring a significant stake of 49%, not only fosters mutual growth but facilitates the amalgamation of resources. This harmonious union has seen Microsoft seamlessly integrate OpenAI’s generative AI prowess within its expansive ecosystem, exemplified by ventures such as Bing Chat, Bing Image Creator, GitHub Copilot, Microsoft 365 Copilot, and Azure OpenAI Service.

Conclusion:

The emergence of Gorilla as an advanced Large Language Model signifies a transformative stride in AI accuracy for API call generation. This achievement underscores the potential of tailored training methodologies to overcome existing limitations. As Gorilla’s superior performance becomes recognized, the market can anticipate enhanced efficiency and precision in AI-powered application development, enabling developers to seamlessly interact with diverse APIs and expand the frontiers of innovation.