TL;DR:

- Large language models (LLMs) like ChatGPT and GPT-4 have revolutionized natural language processing tasks.

- Vision-and-language models align the vision encoder with LLMs through instruction tuning.

- However, these models have been limited to region-level comprehension and struggle with complex tasks like region captioning and reasoning.

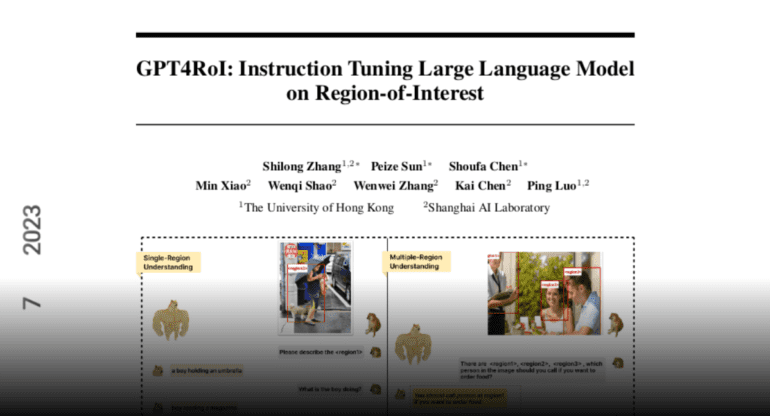

- This research introduces GPT4RoI, a vision-language model that enables fine-grained comprehension of regions of interest.

- Spatial instruction methods like RoIAlign and Deformable attention are used to align image-text pairings at the region level.

- Carefully selected datasets and object detectors are employed for instruction tuning, enhancing conversational quality and human-like responses.

- The model offers a unique interactive experience, allowing users to communicate using both language and spatial instructions.

- GPT4RoI brings advancements in complex area reasoning, region captioning, and multimodal comprehension.

Main AI News:

Large language models (LLMs) have recently made significant breakthroughs, showcasing remarkable performance in conversational tasks that require natural language processing. Notable examples include commercial products like ChatGPT, Claude, Bard, text-only GPT-4, and community open-source models like LLama, Alpaca, Vicuna, ChatGLM, MOSS, among others. These LLMs possess unprecedented capabilities, offering a promising path toward the development of general-purpose artificial intelligence models. Leveraging the effectiveness of LLMs, the multimodal modeling community is now forging a new technological frontier by utilizing LLMs as the universal interface for creating versatile models. This entails aligning the feature space of a specific task with that of pre-trained language models.

Vision-and-language models such as MiniGPT-4, LLaVA, LLaMA-Adapter, and InstructBLIP have emerged as pioneers in bridging the gap between vision and language. They achieve this by tuning the vision encoder to align with LLMs through instruction tuning on image-text pairings, which serves as a representative task. The quality of this alignment greatly impacts the performance of vision-and-language models, specifically under the design concept of instruction tuning. While these models demonstrate exceptional multimodal capabilities, their region-level alignment has limited their progression beyond complex comprehension tasks like region captioning and reasoning. Their alignment is primarily focused on image-text pairings. However, some studies have explored the use of external vision models such as MM-REACT, InternGPT, and DetGPT to facilitate region-level comprehension in vision-language models.

Nonetheless, their non-end-to-end design could be further improved to cater to the needs of all-purpose multimodal models. This research aims to develop a comprehensive vision-language model that achieves fine-grained comprehension of regions of interest from inception to completion. In picture-level vision-language models, the key design involves establishing the object box as the spatial instruction format. These models compress the entire image as the image embedding, without explicitly referencing specific parts. To obtain accurate responses, the LLM is furnished with visual elements extracted through spatial instruction and linguistic guidance. For instance, when the inquiry is presented as an interleaved sequence of “What is this doing?”, the model will replace the query with the relevant area feature referred to in the spatial instruction.

To facilitate spatial instruction, two flexible implementation methods are introduced: RoIAlign and Deformable attention. These methods update the training data from image-text datasets to region-text datasets, where each item’s bounding box and text description are provided to establish fine-grained alignment between region-text pairings. Publicly accessible datasets such as COCO object identification, RefCOCO, RefCOCO+, RefCOCOg, Flickr30K entities, Visual Genome (VG), and Visual Commonsense Reasoning (VCR) are combined to form modified instruction-tweaking formats. Furthermore, off-the-shelf object detectors can be utilized to extract object boxes from images and employ them as spatial instructions. This allows leveraging image-text training data, such as LLaVA150K, for spatial teaching purposes. Importantly, these enhancements are made without compromising the integrity of the LLM.

By learning from carefully selected image-text datasets, which have been tailored for visual instruction tweaking, the model demonstrates improved conversational quality and generates more human-like responses. Depending on the text length, the collected datasets are categorized into two types. The first type comprises short-text data, which includes information on item categories and basic characteristics. This data is used for pre-training the region feature extractor while maintaining the integrity of the LLM. The second type consists of lengthier texts that often involve complex ideas or require logical thinking. Intricate spatial instructions are provided for this data to enable end-to-end fine-tuning of the area feature extractor and LLM, simulating flexible user instructions in real-world scenarios. This approach, which harnesses the power of spatial instruction tuning, provides users of vision-language models with a unique interactive experience, allowing them to communicate their inquiries to the model using both language and spatial instructions.

Conclusion:

The introduction of GPT4RoI represents a significant milestone in the market for vision-language models. By addressing the limitations of existing models and enabling fine-grained comprehension of regions of interest, GPT4RoI opens up new possibilities for applications in various industries. Businesses can now leverage the power of multimodal comprehension, benefiting from accurate and human-like responses in conversational AI systems. This advancement paves the way for the development of more sophisticated and interactive artificial intelligence models that cater to the needs of diverse sectors, ultimately driving innovation and enhancing user experiences. As GPT4RoI’s capabilities continue to evolve, businesses should closely monitor its progress to stay at the forefront of AI technology and leverage its potential for competitive advantage.