TL;DR:

- GS-SLAM, a 3D Gaussian representation-based SLAM system, enhances 3D mapping and localization.

- Developed through a collaboration between top Chinese AI research institutions.

- Features a real-time differentiable splatting rendering pipeline and adaptive expansion strategy.

- Balances accuracy and efficiency, reducing runtime while improving pose tracking.

- Outperforms existing real-time SLAM methods on Replica and TUM-RGBD datasets.

- Fills the gap in camera pose estimation and real-time mapping using 3D Gaussian models.

- Elevates scene reconstruction, camera pose estimation, and rendering performance.

- Ideal for robotics, virtual reality, and augmented reality applications.

- Competitively surpasses NICE-SLAM, Vox-Fusion, iMAP, and CoSLAM.

- Notable for clear mesh boundaries, intricate details, and superior tracking.

- Suitable for real-time applications with a running speed of approximately 5 FPS.

- Requires high-quality depth information for optimal performance.

- Future plans aim to mitigate memory usage in large-scale scenes and analyze limitations.

Main AI News:

In a groundbreaking collaboration between Shanghai AI Laboratory, Fudan University, Northwestern Polytechnical University, and The Hong Kong University of Science and Technology, a cutting-edge 3D Gaussian representation-based Simultaneous Localization and Mapping (SLAM) system has emerged as a game-changer in the field. Named GS-SLAM, this innovative system seeks to strike the perfect balance between precision and efficiency, revolutionizing the landscape of 3D mapping and localization.

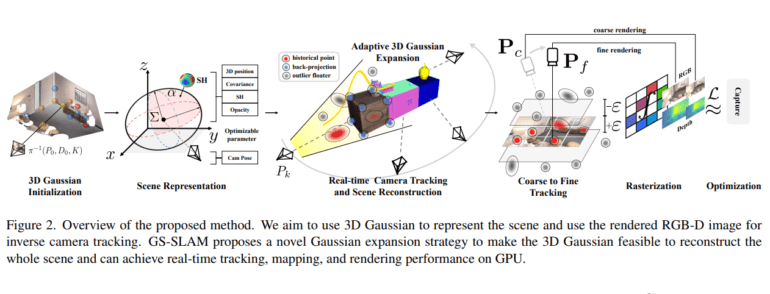

GS-SLAM’s core strength lies in its utilization of a real-time differentiable splatting rendering pipeline, an adaptive expansion strategy, and a refined coarse-to-fine technique to enhance pose tracking. The result? A dramatic reduction in runtime and a significant boost in estimation robustness. Recent testing against the Replica and TUM-RGBD datasets has confirmed its prowess, outperforming its real-time counterparts with remarkable consistency.

This pioneering research also takes a comprehensive look at existing real-time dense visual SLAM systems, spanning handcrafted features, deep-learning embeddings, and NeRF-based approaches. It underscores the glaring gap in camera pose estimation and real-time mapping using 3D Gaussian models, which GS-SLAM expertly fills. Its groundbreaking incorporation of 3D Gaussian representation, coupled with the real-time differentiable splatting rendering pipeline and the adaptive expansion strategy, positions it as a formidable force in scene reconstruction. When compared to established real-time SLAM methods, GS-SLAM stands as the undisputed champion, offering superior performance on the Replica and TUM-RGBD datasets.

But GS-SLAM doesn’t stop there. It directly addresses the limitations of traditional SLAM techniques in achieving finely detailed dense maps. Introducing GS-SLAM, a revolutionary RGB-D dense SLAM approach, powered by a 3D Gaussian scene representation and a real-time differentiable splatting rendering pipeline. This combination provides a delicate balance between speed and accuracy, while the adaptive expansion strategy efficiently reconstructs new scene geometry, and the coarse-to-fine technique elevates camera pose estimation to new heights. The result is an impressive trifecta of improved tracking, mapping, and rendering performance, unlocking unprecedented possibilities in the realms of robotics, virtual reality, and augmented reality.

GS-SLAM’s arsenal includes the deployment of 3D Gaussian representation, a real-time differentiable splatting rendering pipeline, and an adaptive expansion strategy for scene geometry reconstruction and mapping enhancement. The camera tracking aspect employs a coarse-to-fine technique, strategically selecting the optimal 3D Gaussian representation while simultaneously reducing runtime and ensuring steadfast estimation. GS-SLAM has established itself as the gold standard, consistently outperforming state-of-the-art real-time methods on the Replica and TUM-RGBD datasets, offering an efficient and accurate solution for simultaneous localization and mapping applications.

In rigorous comparative tests, GS-SLAM reigns supreme. It surpasses NICE-SLAM, Vox-Fusion, and iMAP on the Replica and TUM-RGBD datasets while delivering results on par with CoSLAM across various metrics. Notably, GS-SLAM excels in constructing meshes with precise boundaries and intricate details, setting a new benchmark for reconstruction performance. In the realm of tracking, it outpaces Point-SLAM, NICE-SLAM, Vox-Fusion, ESLAM, and CoSLAM. With a remarkable running speed of approximately 5 FPS, GS-SLAM is tailor-made for real-time applications, ensuring efficiency without compromising on accuracy.

However, GS-SLAM does come with certain prerequisites. It relies on high-quality depth information obtained from depth sensors for 3D Gaussian initialization and updates. In large-scale scenes, it exhibits elevated memory usage, a limitation that future work aims to address through the integration of neural scene representation. Additionally, while acknowledging the constraints, further analysis is required to comprehensively evaluate the adaptive expansion strategy and coarse-to-fine camera tracking technique, providing a more in-depth understanding of their controls.

Conclusion:

The introduction of GS-SLAM signifies a significant breakthrough in the realm of 3D mapping and localization. This collaborative effort between esteemed Chinese AI research institutions promises to revolutionize industries such as robotics, virtual reality, and augmented reality by striking a delicate balance between precision and efficiency. With its demonstrated superiority over existing real-time SLAM methods, GS-SLAM sets new standards for scene reconstruction, camera pose estimation, and tracking. As a powerful tool with real-world applications, GS-SLAM presents opportunities for innovation and advancement in various markets.