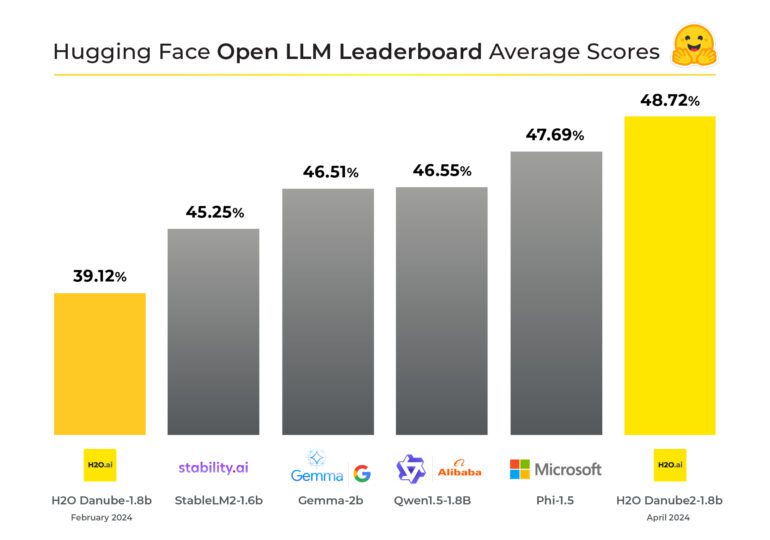

- H2O-Danube2-1.8B, an open-source language model by H2O.ai, dominates the Hugging Face Open LLM Leaderboard in the <2B parameter range.

- Surpasses Google’s Gemma-2B model in the 2.5B parameter category, showcasing superior performance.

- Built upon the success of its predecessor, H2O-Danube2-1.8B leverages vast datasets and optimizations for enhanced natural language processing.

- Praised for versatility and efficiency, it offers economic benefits for enterprise and edge computing applications.

- CEO Sri Ambati highlights its significance in democratizing large language models and ensuring sustainability within current systems.

Main AI News:

In the realm of Generative AI and machine learning, H2O.ai stands out as a beacon of open-source innovation. Its latest contribution, the H2O-Danube2-1.8B model, licensed under Apache v2.0, has ascended to the summit of the Hugging Face Open LLM Leaderboard for the <2B parameter category. Surpassing even the formidable Gemma-2B model by Google in the 2.5B parameter class, this achievement underscores H2O.ai’s unwavering dedication to advancing AI accessibility and performance through open-source solutions.

H2O-Danube2-1.8B represents a significant leap forward from its predecessor, H2O-Danube 1.8B, boasting notable upgrades and optimizations. With a foundation built on a massive dataset comprising 2 trillion high-quality tokens, this model harnesses the Mistral architecture and optimizations like dropping windowing attention to deliver unmatched performance in natural language processing tasks.

Commenting on this milestone, Sri Ambati, CEO and Founder of H2O.ai, expressed enthusiasm for the model’s versatility and efficiency: “We love this category – a great size to fine-tune or post-train on domain-specific datasets for our enterprise customers, economically efficient on inference and training, and very easily embedded on edge devices like mobile phones, drones, and in offline applications.” Notably, H2O-Danube2-1.8B not only surpasses leading competitors such as Microsoft Phi-2 and Google Gemma 2 but also offers economic efficiency and deployment simplicity for enterprise and edge computing applications.

Sri Ambati further emphasized the broader implications of H2O-Danube2-1.8B, heralding it as a catalyst for the democratization of large language models while ensuring sustainability within existing systems. “The applications of this model are far-reaching, from detecting and preventing PII data leakage to improving prompt generation and enhancing guardrails and the robustness of RAG systems.“

As H2O.ai continues to push the boundaries of AI research and development, it empowers organizations to embrace cutting-edge technology without the shackles of traditional resource-intensive approaches. Through initiatives like H2O-Danube2-1.8B, the journey towards democratized, sustainable AI is accelerated, promising a future where innovation knows no bounds.

Conclusion:

H2O-Danube2-1.8B’s triumph signals a paradigm shift in open-source AI, emphasizing performance, versatility, and economic efficiency. Its dominance on the leaderboard underscores the growing importance of accessible and sustainable AI solutions in the market, paving the way for broader adoption and innovation across industries.