TL;DR:

- Helm.ai introduces advanced DNN foundation models for behavioral prediction and decision-making in autonomous driving.

- These models anticipate vehicle and pedestrian behavior in complex urban scenarios and predict autonomous vehicle paths.

- The core representation leverages Helm.ai’s surround-view semantic segmentation and 3D detection system.

- Proprietary Deep Teaching technology enables broad predictive capability in a scalable manner.

- The models learn from real driving data, capturing intricate aspects of urban driving.

- They generate predicted video sequences and consistent paths for safe autonomous vehicle actions.

- The training paradigm, Deep Teaching, eliminates the need for physics-based simulators and manual rules.

- Scalability extends to both L2/L3 and L4 autonomous applications and beyond to robotics domains.

- Helm.ai’s hardware-agnostic, vision-first approach addresses the critical perception challenge.

- The recent $55 million Series C funding round brings Helm.ai’s total raised capital to $102 million.

Main AI News:

In a significant stride toward the future of autonomous driving and robotics automation, Helm.ai has proudly introduced its advanced Deep Neural Network (DNN) foundation models. These models are designed to revolutionize behavioral prediction and decision-making, making them a pivotal part of Helm.ai’s AI software stack tailored for high-end ADAS L2/L3 and L4 autonomous driving.

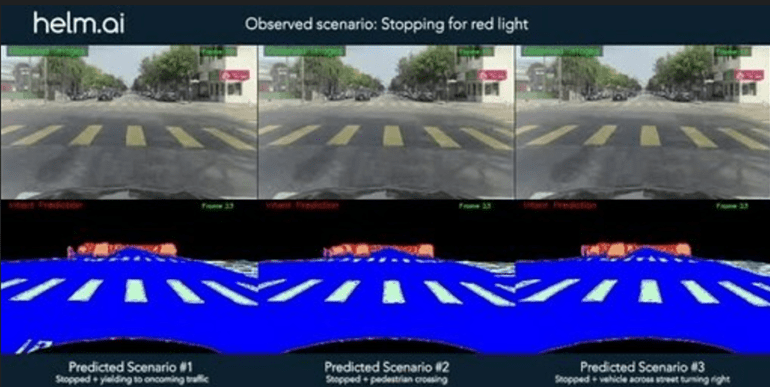

These meticulously trained DNN foundation models possess the remarkable ability to anticipate the actions of vehicles and pedestrians within intricate urban settings. Moreover, they excel at predicting the precise path an autonomous vehicle would follow in such complex scenarios. These capabilities form the bedrock of the decision-making prowess required for self-driving cars. Helm.ai’s innovation leverages their industry-validated surround-view full scene semantic segmentation and 3D detection system as the foundational representation, enabling the training of intent prediction and path planning capabilities. What sets these models apart is the utilization of Helm.ai’s proprietary Deep Teaching technology, ensuring broad predictive capability in a highly scalable manner.

At the core of Helm.ai’s technology lies an ingenious learning process fueled by real-world driving data. This innovative approach, combined with the company’s remarkably precise and temporally stable perception system, captures a wealth of information pertaining to the intricate behaviors of vehicles, pedestrians, and the ever-evolving driving environment. The resulting DNNs possess the uncanny ability to automatically assimilate nuanced yet vital aspects of urban driving. These foundation models take input from a sequence of observed images and generate predictive video sequences, offering the most probable future scenarios. Simultaneously, they provide a path prediction that aligns seamlessly with the intent prediction, a crucial element in ensuring the safest and most optimal actions by autonomous vehicles.

One of the standout features of Helm.ai’s DNN foundation models is their training paradigm in the highly scalable Deep Teaching framework. This paradigm enables unsupervised learning directly from real-world driving data, effectively sidestepping the limitations of physics-based simulators and manually coded rules. Notably, Helm.ai’s development and validation pipeline, originally optimized for high-end ADAS L2/L3 mass production software, can seamlessly extend to L4 fully autonomous applications. This scalability extends beyond self-driving vehicles, encompassing diverse robotics domains.

Helm.ai is at the forefront of an AI-centric approach to autonomous driving, meticulously designed to scale seamlessly from high-end ADAS L2/L3 mass production initiatives to large-scale L4 deployments. The company’s software platform boasts hardware-agnosticism and prioritizes vision as its foundation. It elegantly addresses the pivotal perception challenge in vision, while also incorporating sensor fusion between vision and radar/lidar as necessary. The groundbreaking advancements announced today mark a significant leap forward, paving the way for the scalable development and validation of AI-driven intent prediction and path planning software for the autonomous vehicles of tomorrow.

Helm.ai CEO Vladislav Voroninski remarked, “At Helm.ai, we are pioneering a highly scalable AI approach that addresses high-end ADAS L2/L3 mass production and large-scale L4 deployments within the same framework. Perception serves as the fundamental building block of any self-driving system. The more comprehensive and temporally stable our perception system, the smoother the development of downstream prediction capabilities becomes. This is especially crucial in complex urban environments. By harnessing our industry-validated surround-view urban perception system and Deep Teaching training technology, we’ve successfully trained DNN foundation models for intent prediction and path planning to learn directly from real driving data. This approach empowers them to comprehend a diverse range of urban driving scenarios and the subtleties of human behavior without the reliance on traditional physics-based simulators or manual rule coding.”

Helm.ai’s pioneering strides are further validated by their recent accomplishment of closing a $55 million Series C funding round in August 2023. Led by Freeman Group, this round also saw investments from prominent venture capital firms such as ACVC Partners and Amplo, as well as strategic support from industry giants like Honda Motor, Goodyear Ventures, and Sungwoo Hitech. This financing round propels Helm.ai’s total raised capital to an impressive $102 million.

Conclusion:

Helm.ai’s groundbreaking DNN foundation models represent a significant leap in autonomous driving technology. Their ability to anticipate and respond to complex urban scenarios, coupled with their scalability and real-world learning capabilities, positions Helm.ai at the forefront of the market. This innovation has the potential to accelerate the development and validation of AI-driven autonomous vehicle software and holds promise for broader applications in the robotics industry.