- Hidet is an open-source deep-learning compiler developed by CentML Inc.

- It aims to optimize inference workloads by supporting DNN models from PyTorch and ONNX to efficient CUDA kernels for NVIDIA GPUs.

- Hidet introduces task mappings to streamline computation assignment and ordering directly within tensor programs.

- The compiler automates fusion optimization post-scheduling, reducing engineering efforts and tuning time significantly.

- Extensive experiments demonstrate Hidet’s superiority over existing frameworks, achieving notable performance gains and slashing tuning times.

Main AI News:

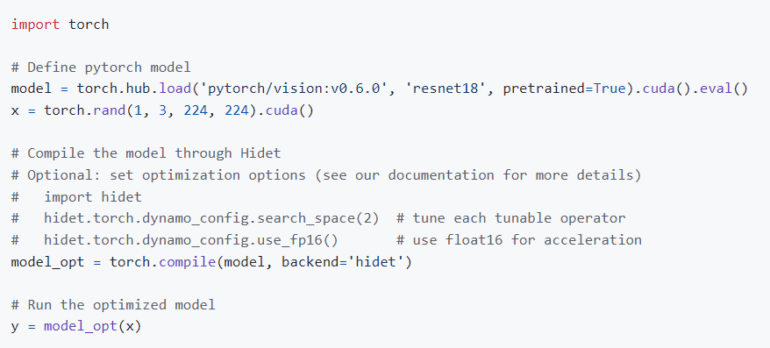

In the realm of deep learning, the demand for optimized inference workloads has reached unprecedented levels. Enter Hidet, an innovative open-source deep-learning compiler crafted by the adept minds at CentML Inc. This Python-based compiler stands as a beacon, offering a seamless journey through the compilation process, providing comprehensive support for DNN models ranging from PyTorch to ONNX, all while delivering efficient CUDA kernels tailored for NVIDIA GPUs.

Originating from the groundbreaking research paper titled “Hidet: Task-Mapping Programming Paradigm for Deep Learning Tensor Programs,” this compiler is designed to tackle the challenge of minimizing the latency of deep learning model inferences, a pivotal factor in ensuring smooth model deployment across diverse platforms, spanning from cloud services to edge devices.

Hidet’s development stems from the realization that crafting efficient tensor programs for deep learning operators is a formidable task, given the complexities inherent in modern accelerators like NVIDIA GPUs and Google TPUs, coupled with the rapid proliferation of operator types. While existing deep learning compilers, such as Apache TVM, rely on declarative scheduling primitives, Hidet introduces a novel approach.

By integrating the scheduling process into tensor programs through dedicated mappings termed task mappings, Hidet empowers developers to delineate the computation assignment and ordering directly within the tensor programs. This approach augments the spectrum of expressible optimizations by enabling meticulous manipulations at a program-statement level, ushering in what is dubbed as the task-mapping programming paradigm.

Furthermore, Hidet introduces a post-scheduling fusion optimization mechanism, streamlining the fusion process subsequent to scheduling. This not only allows developers to concentrate on scheduling individual operators but also substantially mitigates the engineering endeavors needed for operator fusion. Additionally, the paradigm constructs an efficient hardware-centric schedule space agnostic to program input size, thus markedly reducing tuning time.

A plethora of experiments conducted on contemporary convolution and transformer models underscore the prowess of Hidet, surpassing state-of-the-art DNN inference frameworks such as ONNX Runtime and the compiler TVM equipped with AutoTVM and Ansor schedulers. On average, Hidet achieves a commendable 1.22x improvement, with a peak performance gain of 1.48x.

Moreover, in addition to its stellar performance, Hidet shines in efficiency by substantially slashing tuning times. In comparison to AutoTVM and Ansor, Hidet reduces tuning times by a remarkable 20x and 11x, respectively.

As Hidet continues its evolutionary journey, it is setting unprecedented benchmarks for efficiency and performance in deep learning compilation. With its groundbreaking approach to task mapping and fusion optimization, Hidet is poised to emerge as a cornerstone in the arsenal of developers committed to pushing the frontiers of deep learning model serving.

Conclusion:

Hidet’s emergence signifies a significant shift in the deep learning compilation landscape. Its innovative approach to task mapping and fusion optimization not only improves performance but also streamlines the development process. As Hidet continues to evolve, it is likely to disrupt the market, setting new standards for efficiency and performance in deep learning model serving. Companies invested in deep learning technologies should closely monitor Hidet’s advancements to stay competitive in this rapidly evolving landscape.