TL;DR:

- HiFi4G is a groundbreaking technology for 4D human performance modeling and rendering in VR/AR.

- It eliminates occlusions and texture deficiencies, delivering photo-realistic volume rendering.

- The method combines nonrigid tracking with 3D Gaussian representation for compact and efficient data processing.

- HiFi4G outperforms existing techniques in optimization speed, rendering quality, and storage efficiency.

- Its explicit representation seamlessly integrates into GPU-based rendering pipelines.

- A dual-graph technique and 4D Gaussian optimization ensure an accurate representation of motion and appearance.

- Adaptive weighting mechanisms reduce artifacts in regions with small, nonrigid movements.

- HiFi4G offers significant compression, making it accessible on various devices, including VR headsets.

Main AI News:

In the realm of immersive technology, the fusion of volumetric recording and the lifelike portrayal of 4D (spacetime) human performances has brought down the barriers that once separated spectators from performers. This breakthrough technology is opening up a world of possibilities in the realm of virtual and augmented reality (VR/AR), ushering in telepresence and tele-education experiences that redefine the way we interact with digital environments.

While early systems utilized nonrigid registration to recreate textured models from recorded footage, they were plagued by occlusions and texture deficiencies, resulting in gaps and noise in the final output. However, recent neural advancements, epitomized by the likes of NeRF, have revolutionized the game by optimizing a coordinate-based multi-layer perceptron (MLP) instead of relying on explicit reconstruction. This approach has enabled the achievement of breathtaking photo-realistic volume rendering.

Certain dynamic variations of NeRF have aimed to maintain a canonical feature space for reproducing features consistently in each live frame, thanks to an additional implicit deformation field. However, the sensitivity of such a canonical design to significant topological changes or massive movements remained a challenge. Recent methods have stepped in to eliminate deformation fields through planar factorization or hash encoding, resulting in faster interactive program rendering and training. Nevertheless, they raise concerns about runtime memory and storage.

Enter 3D Gaussian Splatting (3DGS), which reverts to an explicit paradigm for representing static scenes and offers real-time, high-quality radiance field rendering based on GPU-friendly rasterization of 3D Gaussian primitives. While several ongoing projects have adapted 3DGS for dynamic settings, some sacrifice rendering quality for dynamic Gaussians’ nonrigid movements, while others lose the original 3DGS’s explicit GPU-friendly elegance, resorting to additional implicit deformation fields to compensate for motion information.

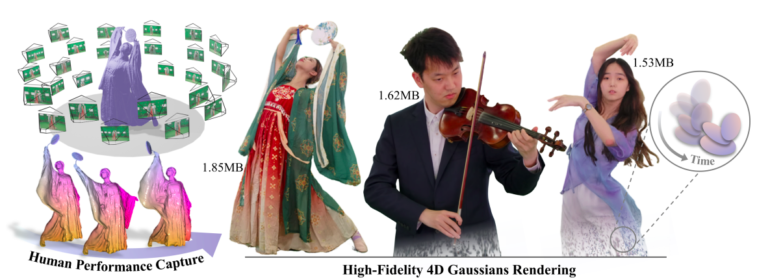

In this study, a collaborative effort between researchers from ShanghaiTech University, NeuDim, ByteDance, and DGene has given birth to HiFi4G – a fully explicit and compact Gaussian-based method for recreating high-fidelity 4D human performances from dense video. The core idea behind HiFi4G is the fusion of nonrigid tracking with the 3D Gaussian representation, effectively segregating motion and appearance data. The result is a representation that is both compact and storage-friendly.

HiFi4G stands out in terms of optimization speed, rendering quality, and storage efficiency when compared to existing implicit rendering techniques. Thanks to its explicit representation, HiFi4G seamlessly integrates into GPU-based rasterization pipelines, allowing users to immerse themselves in high-fidelity human performances within the realm of virtual reality.

The research team’s approach involves a dual-graph technique, marrying a fine-grained Gaussian graph with a coarse deformation graph to naturally connect Gaussian representation with nonrigid tracking. This explicit tracking technique divides sequences into segments, preserving rich motion data within each part. Additionally, they employ 3DGS to manage the number of Gaussians in each segment, removing incorrect ones from the previous segment and introducing new ones.

To address unnatural distortions and jittery artifacts, the research team introduces a 4D Gaussian optimization approach, incorporating temporal regularizers for consistent appearance properties and smooth motion characteristics. An adaptive weighting mechanism further refines the process, minimizing artifacts in regions with small, nonrigid movements.

After optimization, the research team generates spatially-temporally compact 4D Gaussians. To ensure usability for consumers, they present a companion compression technique, utilizing residual correction, quantization, and entropy encoding for Gaussian parameters. With an astounding compression rate of approximately 25 times and a storage requirement of less than 2 MB per frame, HiFi4G makes immersive human performances accessible on a wide range of devices, including VR headsets.

Conclusion:

HiFi4G’s emergence represents a significant leap forward in the VR/AR market. Its ability to provide high-fidelity human performances with efficient rendering and storage solutions opens up new possibilities for immersive experiences. This technology has the potential to drive adoption and innovation in the VR/AR industry, enhancing user experiences and paving the way for exciting developments in telepresence, tele-education, and beyond.