TL;DR:

- Hugging Face and ServiceNow have collaborated to develop StarCoder, an open-source language model for code generation.

- StarCoder is an improved version of the StarCoderBase model trained on 35 billion Python tokens.

- It supported over 80 programming languages and was trained on 1 trillion tokens, boasting 15.5 billion parameters.

- StarCoder is an alternative to GitHub’s Copilot, DeepMind’s AlphaCode, and Amazon’s CodeWhisperer.

- It may not have as many features as GitHub Copilot, but it can be improved by the community and integrated with custom models.

- The new VSCode plugin complements StarCoder, allowing users to check if their code was in the pretraining dataset.

- StarCoder’s training includes code from GitHub, but it may not be optimal for certain requests.

- Attribution requirements and license guidelines must be followed when using StarCoder’s code generation capabilities.

- StarCoder has limitations and is available under the OpenRAIL-M license with legal restrictions on its use.

- Additional research is needed to explore the effectiveness of Code LLMs in different natural languages.

- AI-powered coding tools can reduce development expenses and free up developers for more creative projects.

- Engineers spend a significant amount of time debugging, costing the software industry billions of dollars annually.

Main AI News:

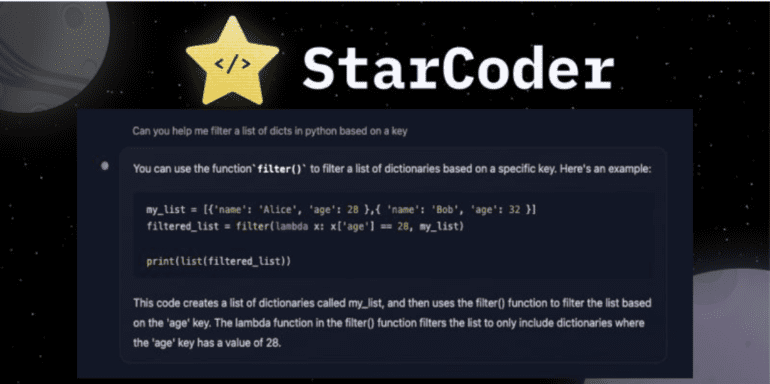

Hugging Face and ServiceNow, in a collaborative effort, have unveiled StarCoder, a cutting-edge open-source language model tailored for code generation. This revolutionary model, developed as part of the esteemed BigCode Initiative, is an enhanced iteration of the StarCoderBase model, meticulously trained on a staggering 35 billion Python tokens. As a remarkable AI code-generating system, StarCoder presents a viable alternative to established industry contenders such as GitHub’s Copilot, DeepMind’s AlphaCode, and Amazon’s CodeWhisperer.

Distinguished by its versatility, StarCoder has undergone rigorous training in over 80 programming languages, incorporating vast amounts of textual data sourced from GitHub repositories, encompassing documentation, and Jupyter programming notebooks.

This extensive training regimen, comprising a colossal 1 trillion tokens and leveraging an expansive context window of 8192 tokens, empowers the model with an awe-inspiring 15.5 billion parameters. This remarkable feat positions StarCoder as a frontrunner, outperforming even larger models like PaLM, LaMDA, and LLaMA. In fact, StarCoder has been shown to be on par with, or perhaps even surpassing, closed models like OpenAI’s code-Cushman-001.

Leandro von Werra, one of the esteemed co-leaders driving StarCoder’s development, shared insights with TechCrunch, highlighting the model’s commitment to open-source principles. While StarCoder may not initially offer an exhaustive feature set comparable to GitHub Copilot, its open-source nature enables the wider community to contribute to its ongoing enhancement. Furthermore, the integration of custom models becomes a possibility, fostering a collaborative environment for continual improvement and innovation.

To complement the software development workflow, the introduction of the StarCoder VSCode plugin proves invaluable. This indispensable tool facilitates seamless interaction with StarCoder, empowering users to effortlessly verify if the current code segment exists within the pretraining dataset by simply pressing CTRL+ESC. Such integration streamlines the coding experience and nurtures a symbiotic relationship between developers and the StarCoder model.

It is worth noting that StarCoder’s Language Learning Model (LLM) was meticulously trained using code sourced from GitHub repositories. Consequently, while it may not exhibit optimal performance when prompted to generate specific functions, such as computing square roots, it can serve as a highly valuable technical aid when utilized in alignment with the on-screen instructions.

The model effectively employs tokens in its Fill-in-the-Middle methodology, intelligently deciphering the input and output’s prefix, middle, and suffix. Crucially, only content possessing permissive licenses has been incorporated into the pretraining dataset, enabling the model to generate source code with remarkable fidelity. It is, however, imperative to diligently observe any attribution requirements and adhere to the guidelines stipulated by the relevant code licenses.

In light of the ethical considerations surrounding language models, it is essential to acknowledge that StarCoder, like its contemporaries, has inherent limitations. These limitations may manifest in the form of producing incorrect, discourteous, deceitful, ageist, sexist, or stereotypical information. To address these concerns, StarCoder is made available under the OpenRAIL-M license, which enforces legally binding restrictions on its utilization and modification.

Furthermore, researchers have conducted extensive evaluations of StarCoder’s coding capabilities and natural language understanding, benchmarking its performance against English-only metrics. Nonetheless, in order to expand the applicability of these models, additional research into their effectiveness and limitations within various natural languages remains imperative.

Conlcusion:

The development of StarCoder, an advanced open-source language model for code generation, in collaboration between Hugging Face and ServiceNow, signifies a significant milestone in the market. This innovative model, trained on a vast amount of programming language data and boasting impressive parameters, offers a compelling alternative to existing code-generating systems. With its open-source nature and potential for community collaboration, StarCoder presents a unique opportunity for developers to contribute to its ongoing improvement and customization.

Furthermore, the introduction of the VSCode plugin enhances the coding experience, promoting efficiency and accuracy. As the demand for AI-powered coding tools continues to rise, the market stands to benefit from the potential cost reductions in development expenses and the increased productivity of developers. This development represents a noteworthy step forward, paving the way for more efficient and imaginative software development practices in the future.