- Hugging Face Researchers introduce Idefics2, an 8B vision-language model revolutionizing multimodal AI.

- Idefics2 integrates advanced OCR and native resolution techniques, setting new benchmarks in image-text fusion.

- The model’s architecture enhances visual data integrity and facilitates a nuanced understanding of multimodal inputs.

- Pre-trained on diverse datasets and fine-tuned on ‘The Cauldron,’ Idefics2 excels in various tasks from basic to complex.

- Versions include Idefics2-8B-Base, Idefics2-8B, and the forthcoming Idefics2-8B-Chatty, each tailored for specific scenarios.

Main AI News:

In the dynamic landscape of digital interactions, the need for advanced analytical tools to navigate and decipher diverse data is paramount. This entails seamlessly integrating various data types, particularly images and text, to construct models capable of comprehending and responding to multimodal inputs effectively. Such capabilities are indispensable for a spectrum of applications spanning from automated content generation to interactive systems with heightened sophistication.

Prevailing research endeavors have yielded notable models like LLaVa-NeXT and MM1, renowned for their robust multimodal prowess. The LLaVa-NeXT lineage, notably its 34B iteration, alongside MM1-Chat variants, has established benchmarks in tasks such as visual question answering and seamless image-text amalgamation. Gemini models like Gemini 1.0 Pro have further propelled advancements in tackling complex AI challenges. DeepSeek-VL specializes in visual question answering, whereas Claude 3 Haiku excels in generating narrative content from visual cues, showcasing diverse methodologies for integrating visual and textual data within AI frameworks.

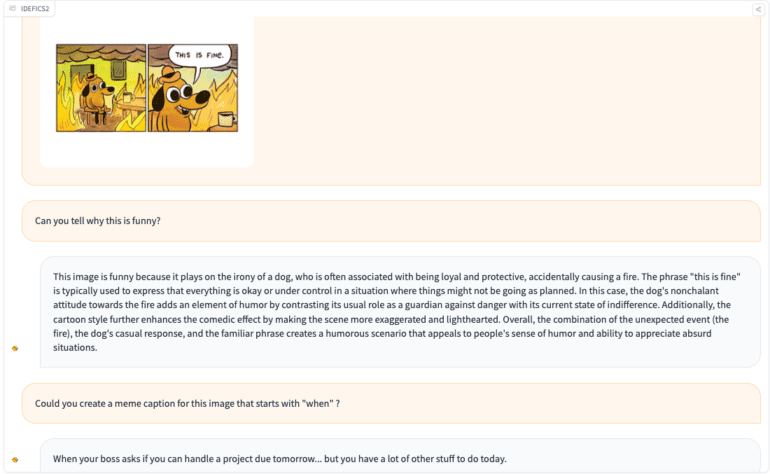

Idefics2, introduced by Hugging Face Researchers, stands as a powerful 8B parameter vision-language model aimed at refining the fusion of text and image processing within a unified framework. Diverging from prior approaches that often necessitated resizing images to fixed dimensions, potentially compromising visual data fidelity, Idefics2 employs a novel strategy derived from NaViT. This strategy enables precise and efficient processing of visual information. Additionally, the integration of visual features into the language backbone through learned Perceiver pooling and MLP modality projection sets Idefics2 apart, fostering a deeper comprehension of multimodal inputs.

The model underwent pre-training utilizing a blend of publicly available resources, encompassing Interleaved web documents, image-caption pairs from the Public Multimodal Dataset and LAION-COCO, and specialized OCR data from PDFA, IDL, and Rendered-text. Furthermore, fine-tuning on “The Cauldron,” a meticulously curated compilation of 50 vision-language datasets, was instrumental. This phase of refinement incorporated advanced technologies like Lora for adaptive learning and tailored fine-tuning strategies for newly initialized parameters in the modality connector, thereby enhancing the distinct functionalities of various versions, ranging from the foundational base model to the forthcoming Idefics2-8B-Chatty, tailored for conversational prowess. Each iteration is tailored to excel in specific scenarios, spanning from fundamental multimodal tasks to intricate, prolonged interactions.

Versions of Idefics2

Idefics2-8B-Base:

Serving as the cornerstone of the Idefics2 series, this variant boasts 8 billion parameters and is adept at handling general multimodal tasks. Pre-trained on a diverse dataset comprising web documents, image-caption pairs, and OCR data, the base model exhibits robustness across a spectrum of vision-language tasks.

Idefics2-8B:

Building upon the base model, Idefics2-8B incorporates fine-tuning on ‘The Cauldron,’ augmenting its performance in complex instruction-following tasks. This version excels in understanding and processing multimodal inputs with enhanced efficiency, bolstering its versatility.

Idefics2-8B-Chatty (Upcoming):

Anticipated as a leap forward, the Idefics2-8B-Chatty is tailored for extensive conversations and contextual comprehension. Fine-tuned for dialogue applications, it is poised to excel in scenarios necessitating prolonged interactions, such as customer service bots or interactive storytelling platforms.

Advancements over Idefics1:

- •Utilization of the NaViT strategy elevates image processing to native resolutions, bolstering visual data integrity.

- •Enhanced OCR capabilities, facilitated by specialized data integration, elevate text transcription accuracy.

- •Simplified architecture featuring a vision encoder and Perceiver pooling significantly enhances performance compared to Idefics1.

During testing, Idefics2 exhibited exceptional performance across various benchmarks. Notably, it achieved an 81.2% accuracy in Visual Question Answering (VQA), surpassing its predecessor, Idefics1, by a significant margin. Furthermore, Idefics2 showcased a remarkable 20% enhancement in character recognition accuracy for document-based OCR tasks compared to earlier iterations. The refined OCR capabilities notably reduced the error rate from 5.6% to 3.2%, affirming its efficacy in real-world applications demanding precise text extraction and interpretation.

Conclusion:

The introduction of Idefics2 marks a significant leap forward in the realm of multimodal AI, offering unparalleled capabilities in integrating text and image processing. With enhanced OCR accuracy and native resolution techniques, Idefics2 sets a new standard for precision and efficiency in interpreting diverse data inputs. This innovation paves the way for transformative applications across industries, driving advancements in automation, customer service, and interactive storytelling. Businesses leveraging Idefics2 stand to gain a competitive edge by harnessing its sophisticated capabilities for enhanced data comprehension and engagement.