TL;DR:

- Quantum computing’s practical applications are explored for generating hyper-realistic human images.

- The HyperHuman framework revolutionizes text-to-image models, addressing challenges in human image generation.

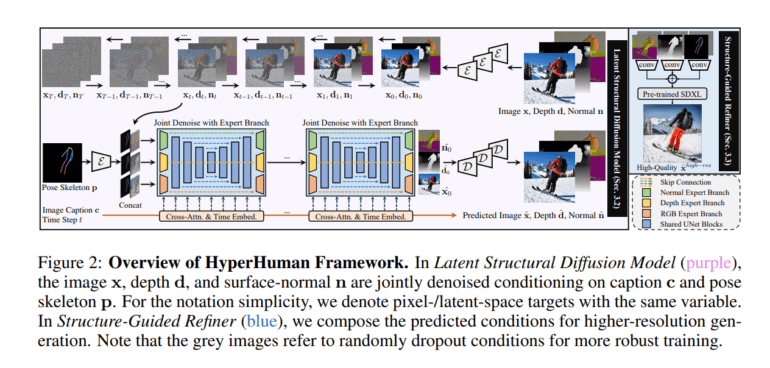

- It utilizes a vast dataset, a Latent Structural Diffusion Model, and a Structure-Guided Refiner.

- HyperHuman excels in image quality, pose accuracy, and text-image alignment, ranking second in CLIP scores.

- This technology promises transformative applications in image animation, virtual try-ons, and more.

Main AI News:

Quantum computing, the heralded revolution in problem-solving, is now poised to take a quantum leap into the world of generating hyper-realistic human images, thanks to the groundbreaking HyperHuman framework. In the realm of finite-sized problems, Quantum computing, often discussed for its theoretical advantages in asymptotic scaling, is stepping out of the theoretical realm and into practical applications.

In recent years, collaborative research efforts have paved the way for quantum computing’s real-world applications. These efforts have highlighted specific problem domains where quantum computers outshine their classical counterparts, unveiling the immense potential of this emerging technology.

The world of image generation has also witnessed significant advancements, with diffusion-based text-to-image (T2I) models taking center stage due to their scalability and training stability. However, even leading models like Stable Diffusion face challenges when it comes to creating high-fidelity human images.

Traditional approaches for controllable human image generation have been hamstrung by limitations. Enter the HyperHuman framework, a revolutionary solution that transcends these limitations. HyperHuman captures intricate correlations between appearance and latent structure, unleashing its power with the aid of a vast human-centric dataset, HumanVerse, boasting 340 million annotated images.

At the heart of HyperHuman lies the Latent Structural Diffusion Model, a marvel of technology that not only generates RGB images but also denoises depth and surface-normal information. To further enhance the quality and detail of synthesized images, the framework employs a Structure-Guided Refiner, ensuring that hyper-realistic human images emerge across a myriad of scenarios.

Applications abound for this cutting-edge technology. Generating hyper-realistic human images from user inputs, including text and pose, has far-reaching implications, from image animation to virtual try-ons. Early methods using Variational Autoencoders (VAEs) or Generative Adversarial Networks (GANs) were hindered by issues of training stability and capacity. HyperHuman, however, overcomes these challenges and ushers in a new era of coherent human anatomy and natural poses in generative AI.

The significance of HyperHuman is underscored by rigorous assessments. The framework is put through its paces with various metrics, including FID (Fréchet Inception Distance), KID (Kernel Inception Distance), and FID CLIP for image quality and diversity, as well as CLIP similarity for text-image alignment, and pose accuracy metrics. HyperHuman not only excels in image quality and pose accuracy but also secures a commendable second place in CLIP scores despite using a smaller model. In essence, this framework delivers a balanced performance across image quality, text alignment, and commonly used CFG scales.

Conclusion:

HyperHuman represents a significant leap in hyper-realistic image generation, powered by quantum computing. Its ability to create high-quality human images from text and pose inputs has wide-ranging applications, making it a game-changer in industries like fashion, entertainment, and advertising. As quantum computing continues to advance, HyperHuman’s capabilities will likely reshape the market for AI-driven image generation technologies. Businesses should keep a close eye on these developments to stay competitive in the evolving landscape.