- IBM Research and Georgia Tech developed Transformer Explainer, a web-based tool to understand AI language models.

- The tool runs GPT-2 in-browser, allowing users to see how transformer models predict text.

- It is designed for non-experts and appeals to tech enthusiasts for its detailed yet accessible insights.

- Transformer Explainer features adjustable “temperature” settings for creative or predictable word predictions.

- The tool is built with Svelte, D3, ONNX runtime, and Hugging Face’s Transformers library.

- Set to be presented at VIS 2024 alongside a similar tool for image-generating models.

- The team is exploring user-specific model integration and future developments in transformer models.

Main AI News:

IBM Research and Georgia Tech have unveiled an open-source, web-based tool called Transformer Explainer. It is designed to shed light on the neural network architecture powering today’s AI revolution. While the text generated by large language models might seem magical, this tool breaks it down into a sophisticated process of probability distribution.

The brainchild of Benjamin Hoover, an AI engineer at IBM and a PhD candidate at Georgia Tech, Transformer Explainer provides a hands-on introduction to the inner workings of transformer-based language models. These models mimic human text by processing vast amounts of data and are essential to modern AI advancements.

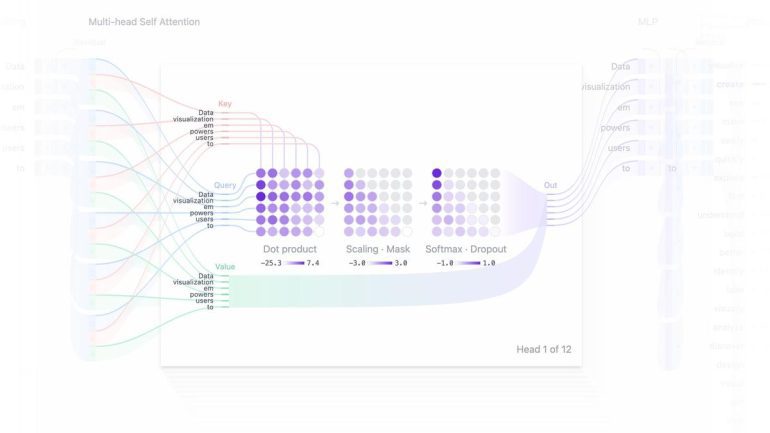

Transformer Explainer runs a live GPT-2 model directly in the user’s web browser, allowing users to input phrases and watch how the model predicts the next word. It converts words into tokens, processes them through transformer blocks, and generates a ranked list of predictions. Users can explore at different levels, from high-level overviews to detailed insights like attention scores, which help the model determine word importance.

Although designed for non-experts, the tool has gained popularity among tech enthusiasts. Hoover’s previous work includes exBERT, a transformer visualization tool that earned recognition at NeurIPS. His team also developed RXNMapper, demonstrating transformers’ ability to understand chemical language.

During the pandemic, Hoover returned to academia at Georgia Tech, where he became a technical consultant for Transformer Explainer under the mentorship of Professor Polo Chau. Three of Chau’s students conceived the tool five months ago, aiming to create an accessible yet detailed exploration of transformer models. A standout feature is the sliding “temperature” scale, which allows users to adjust the model’s creativity in predictions.

Built using Svelte, D3, ONNX runtime, and Hugging Face’s Transformers library, Transformer Explainer is optimized to run GPT-2 in-browser, ensuring accessibility on standard CPUs.

Set to be showcased at VIS 2024, IEEE’s annual data visualization conference, Transformer Explainer will appear alongside Diffusion Explainer, a similar tool for image-generating models. The team, including Aeree Cho, Grace Kim, Alex Karpekov, Alec Helbing, Jay Wang, Seongmin Lee, and Hoover, is also exploring the integration of user-specific models.

As part of his PhD work, Hoover is collaborating with IBM’s Dmitry Krotov on a new transformer variation inspired by physical memory models. While this may seem complex, expect a new visualization tool to simplify it for everyone.

Conclusion:

IBM and Georgia Tech’s introduction of Transformer Explainer represents a significant step in making complex AI technologies more accessible to a broader audience. By demystifying the inner workings of transformer-based language models, this tool not only enhances understanding but also has the potential to accelerate the adoption of AI in various sectors. As businesses increasingly leverage AI for competitive advantage, tools like Transformer Explainer will be crucial in bridging the gap between AI developers and end-users. The growing trend towards transparency in AI operations could drive market demand for similar educational and explanatory tools, positioning companies that invest in these resources as leaders in AI literacy and innovation.