TL;DR:

- Researchers from Meta AI and MIT introduce In-Context Risk Minimization (ICRM) as an innovative algorithm.

- ICRM emphasizes the crucial role of context in AI research for superior domain generalization.

- The algorithm focuses on context-unlabeled examples, leading to remarkable improvements in out-of-distribution performance.

- ICRM reframes out-of-distribution prediction challenges as in-distribution next-token predictions.

- It advocates training machine learning models using diverse environment examples.

- In-context learning balances trade-offs in efficiency-resiliency, exploration-exploitation, specialization-generalization, and focusing-diversifying.

- The study highlights the significance of considering context in data structuring and generalization research.

Main AI News:

In the realm of artificial intelligence, the quest for domain generalization has been a relentless pursuit, and the stakes have never been higher. As we witness the rapid advancement of AI, it becomes increasingly evident that adapting to diverse environments beyond training data is an imperative challenge. This is particularly critical in domains like autonomous vehicles, where the consequences of failure can be catastrophic. Despite the diligent efforts of researchers to conquer this challenge with various algorithms, the stark reality remains: none have surpassed the efficacy of basic empirical risk minimization (ERM) methods when confronted with real-world out-of-distribution scenarios.

This formidable hurdle has spurred dedicated research groups, workshops, and broader societal considerations. As our reliance on AI systems continues to grow, it is imperative that we chart a course toward effective generalization that extends beyond the confines of training data distribution. Our goal is clear: AI systems must not only adapt but also function safely and effectively in new and uncharted environments.

In response to this pressing need, a collaborative team of researchers hailing from Meta AI and MIT CSAIL has emerged as a beacon of hope. Their groundbreaking research introduces the concept of In-Context Risk Minimization (ICRM), a novel algorithm designed to revolutionize domain generalization. At its core, the ICRM algorithm underscores the paramount importance of context in the realm of AI research.

The crux of their argument is this: researchers in the field of domain generalization must perceive the environment as the very essence of context. Similarly, those immersed in the world of Large Language Models (LLMs) must recognize context as the environment that catalyzes enhanced data generalization. The fruits of their labor have borne testament to the efficacy of the ICRM algorithm.

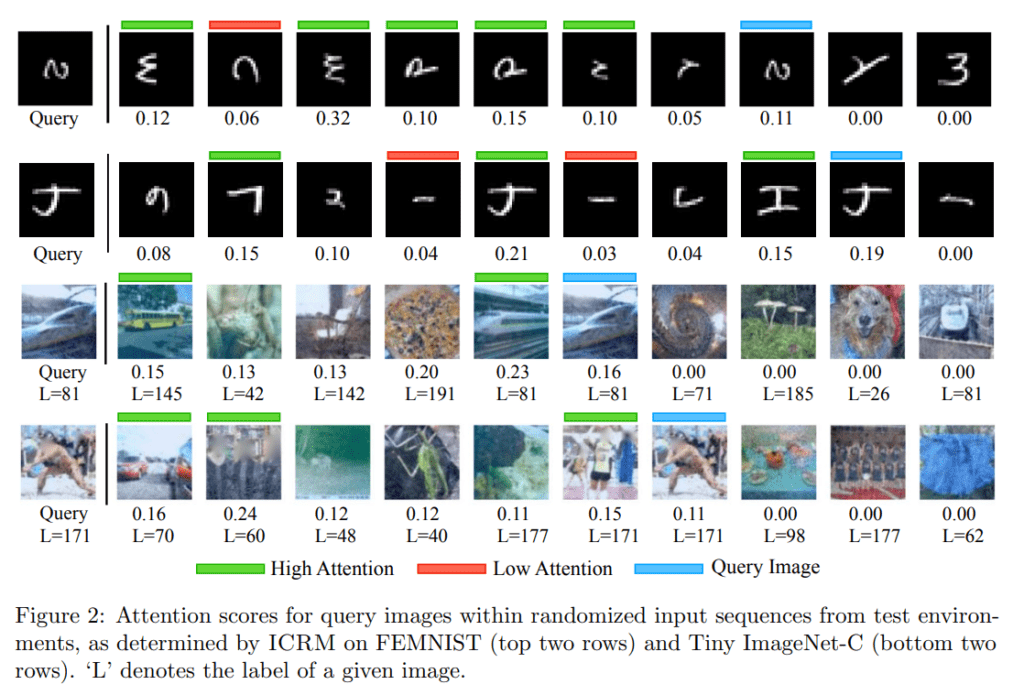

Through meticulous experimentation and theoretical insights, the researchers have unequivocally demonstrated the power of ICRM in elevating the field of domain generalization. The algorithm’s unique ability to zero in on context-unlabeled examples has unlocked the secret to optimizing risk minimization within the test environment. The result? A substantial enhancement in out-of-distribution performance.

But the ICRM algorithm doesn’t stop at merely addressing out-of-distribution prediction challenges; it reframes them as in-distribution next-token predictions. This shift in perspective has far-reaching implications, further solidifying the algorithm’s position as a transformative solution.

In essence, the researchers advocate for the reimagining of machine learning, with a focus on training models using examples drawn from diverse environments. The synergy of their theoretical insights and empirical experiments has ushered in a new era of domain generalization. By prioritizing context-unlabeled examples, ICRM empowers machine learning models to identify and mitigate risks specific to the test environment, resulting in remarkable improvements in performance.

The crux of their research lies in the realm of in-context learning, a dynamic process that adeptly navigates the trade-offs between efficiency and resiliency, exploration and exploitation, specialization and generalization, and focusing and diversifying. This paradigm shift underscores the paramount importance of context in domain generalization research and underscores the remarkable adaptability of in-context learning. It is the authors’ fervent belief that researchers should harness this capability to reshape data structuring and propel the field of generalization to new heights.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of the In-Context Risk Minimization (ICRM) algorithm signifies a breakthrough in the domain generalization capabilities of AI systems. This innovation, which prioritizes context and context-unlabeled examples, holds immense potential for industries reliant on AI, promising increased adaptability and superior performance in diverse real-world scenarios. As AI continues to permeate various sectors, ICRM’s impact is poised to reshape the market landscape, offering more effective solutions for complex, out-of-distribution challenges and enhancing the safety and efficiency of AI-driven applications.