TL;DR:

- Diffusion Models (DMs) are making strides in image synthesis.

- Researchers aim to extend T2I models to T2V for various applications.

- Balancing video quality, training cost, and model complexity is a challenge.

- LaVie, with 3 billion parameters, uses cascaded video latent diffusion models.

- It builds upon a pre-trained T2I model to create realistic videos.

- LaVie incorporates temporal self-attention and joint image-video fine-tuning.

- The WebVid10M dataset falls short, so LaVie leverages Vimeo25M.

- Training on Vimeo25M enhances LaVie’s performance significantly.

- LaVie is a step toward high-quality T2V generation and plans to improve further.

Main AI News:

In the realm of image synthesis, Diffusion Models (DMs) have been making remarkable advancements in recent years. These strides have ignited a newfound focus on the generation of photorealistic images from textual descriptions (T2I). As T2I models continue to impress, researchers are increasingly eager to extend these capabilities into the realm of video synthesis driven by textual inputs (T2V). The potential applications of T2V models in domains like filmmaking, video games, and artistic creation are promising.

However, achieving the delicate equilibrium between video quality, training costs, and model compositional complexity remains a formidable challenge. This endeavor demands meticulous attention to model architecture, training methodologies, and the curation of high-quality text-video datasets.

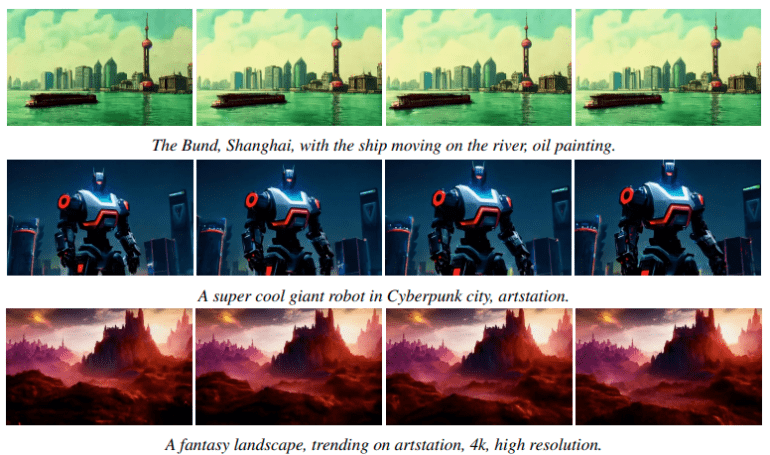

In response to these multifaceted challenges, the pioneering LaVie framework has emerged. Equipped with a colossal 3 billion parameters, LaVie operates on the principles of cascaded video latent diffusion models. This innovative framework serves as a cornerstone in the realm of text-to-video models, building upon the foundation laid by a pre-trained T2I model, specifically Stable Diffusion, as elucidated by Rombach et al. in 2022. Its primary objective is to craft visually lifelike and temporally coherent videos while preserving the creative prowess of the pre-trained T2I model.

LaVie is underpinned by two pivotal design principles. Firstly, it harnesses the power of simple temporal self-attention, augmented by RoPE, to adeptly capture the inherent temporal correlations in video data. Remarkably, complex architectural alterations yield only marginal improvements in the generated results. Secondly, LaVie employs joint image-video fine-tuning, a critical facet for producing high-quality and imaginative outputs. Directly fine-tuning on video datasets can jeopardize the model’s ability to amalgamate concepts and result in catastrophic memory loss. Joint image-video fine-tuning facilitates substantial knowledge transfer from images to videos, encompassing a broad spectrum of scenes, styles, and characters.

Moreover, the publicly accessible text-video dataset, WebVid10M, has been found wanting in terms of supporting the T2V task, primarily due to its low resolution and focus on watermark-centered videos. In response to this limitation, LaVie leverages the newly introduced text-video dataset named Vimeo25M, comprising a staggering 25 million high-resolution videos, all exceeding 720p, complemented by detailed textual descriptions.

Experimental evidence unequivocally demonstrates that training on Vimeo25M yields a substantial boost to LaVie’s performance. This enhancement translates into superior results across dimensions such as quality, diversity, and aesthetic appeal. Researchers envision LaVie as a pioneering stride towards realizing high-quality T2V generation. Future research endeavors are set to further expand LaVie’s capabilities, enabling the synthesis of extended videos featuring intricate transitions and movie-level quality, all guided by script descriptions.

Conclusion:

LaVie’s innovative approach to video generation with cascaded latent diffusion models, coupled with its use of high-quality datasets, marks a significant leap forward in the market. It paves the way for improved video synthesis for various industries, such as filmmaking, video games, and creative content creation. The ability to generate high-quality videos from text descriptions has the potential to revolutionize these domains, offering new possibilities and efficiencies.