TL;DR:

- Language models struggle to keep up with evolving language due to temporal misalignment.

- Conventional methods for updating language models have limitations.

- Researchers at the Allen Institute for AI introduce ‘time vectors’ as a groundbreaking solution.

- Time vectors are directions in the weight space of models, enabling adaptation to specific time periods.

- This approach allows language models to adjust to linguistic changes without extensive retraining.

- Time vectors enhance adaptability and accuracy across various periods, tasks, and domains.

Main AI News:

In the ever-evolving realm of computational linguistics, researchers at the University of Washington and the Allen Institute for AI have unveiled a groundbreaking solution to a persistent challenge: the temporal misalignment between language models and the dynamic nature of language itself.

Language models, driven by the cutting-edge advancements in machine learning and artificial intelligence, seek to grasp the nuances of human language comprehensively. However, the relentless evolution of language influenced by cultural, social, and technological shifts poses a formidable obstacle to these models’ effectiveness.

The Predicament

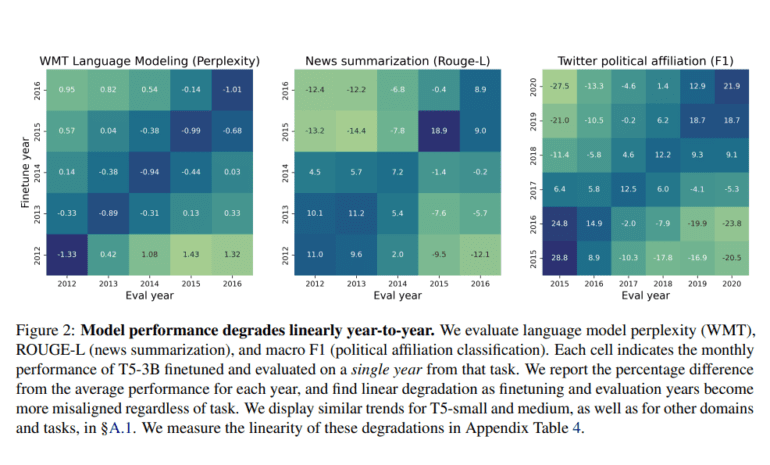

Outdated Language Models One pressing issue confronting language model developers is the discrepancy between the data used to train these models and the rapidly changing landscape of language. As time progresses, the lexicon and linguistic patterns across various domains undergo significant transformations. Consequently, language models trained on historical data gradually lose their efficacy.

The Challenge

Acquiring and Integrating New Data To combat this issue, conventional methods involve continuously updating language models with fresh data as it becomes available. Dynamic evaluation and continuous pretraining have been the go-to strategies for keeping these models relevant. However, these approaches have their shortcomings, including the risk of models forgetting previously acquired knowledge and the resource-intensive nature of integrating new data.

A Game-Changing Solution

Time Vectors In response to these challenges, researchers at the Allen Institute for AI have introduced a revolutionary approach known as ‘time vectors.’ This innovative concept offers a new dimension for adapting language models to the evolving linguistic landscape.

Time vectors represent directions in the weight space of language models that significantly enhance their performance with text from specific time periods. What sets this method apart is its remarkable capacity to interpolate between these time vectors. This means that language models can be seamlessly adjusted to accommodate linguistic changes in new or future periods. Astonishingly, this adaptation can be achieved without the need for extensive retraining—a monumental leap forward in the field.

Efficiency Meets Effectiveness

By harnessing time vectors, language models can efficiently stay in sync with the ever-changing nature of language. This approach has demonstrated promising results, elevating the adaptability and accuracy of language models across various periods, tasks, and domains. Its effectiveness spans different model sizes and time scales, signaling a profound understanding of and leverage over the temporal dynamics within the weight space of fine-tuned language models—a significant breakthrough in the realm of language modeling.

Conclusion:

The introduction of ‘time vectors’ represents a significant breakthrough in the field of language modeling. This innovation addresses the persistent challenge of keeping language models up-to-date with the ever-evolving nature of language. By enhancing adaptability and accuracy across different domains and time periods, this advancement has the potential to revolutionize the market for language models, making them more effective and efficient tools for businesses across various industries.